Active Directory Troubleshooting, Auditing, and Best Practices

A Non‐Introduction to Active Directory

The world has been using Active Directory (AD) for more than a decade now, so there's probably little point in doing a traditional introduction for this book. However, there's still a bit of context that we should cover before we get started, and we should definitely think about AD's history as it applies to our topics of troubleshooting, auditing, and best practices.

The real point of this chapter is to identify key elements of AD that you need to completely inventory in your environment before proceeding in this book. Much of the material in the following chapters will refer to specific infrastructure elements, and will make recommendations based on specifics in common AD environments and scenarios. To make the most of those recommendations, you'll need to know the specifics of your own environment so that you know exactly which recommendations apply to you—and a complete, up‐to‐date inventory is the best way to gain that familiarity. To conclude this chapter, I'll briefly outline what's coming up in the chapters ahead.

A Brief AD History and Background

AD was introduced with Windows 2000 Server, and replaced the "NT Domain Services" (NTDS) that had been used since Windows NT 3.1. AD is Microsoft's first real directory; NTDS was pretty much just a flat user account database. AD was designed to be more scalable, more efficient, more standards‐based, and more modern that its predecessor. However, AD was (and is) still built on the Windows operating system (OS), and as such shares some of the OS's particular patterns, technologies, eccentricities, and other characteristics.

AD also integrated a successor to Microsoft's then‐nascent registry‐based management tools. Known today as Group Policy, this new feature added significant roles to the directory beyond the normal one of authentication. With Group Policy, you can centrally define and assign literally thousands of configuration settings to Windows computers (and even non‐Windows computers, with the right add‐ins) belonging to the domain.

When AD was introduced, security auditing was something that relatively few companies worried about. Since 2000, numerous legislative and industry regulations throughout the world have made security and privacy auditing much more commonplace, although AD's native auditing capabilities have changed very little throughout that time. Because of its central role in authentication and configuration management, AD occupies a critical role for security operations, management, and review within organizations.

We also have to recognize that, outside from governing permissions on its own objects, AD doesn't play a central role in authorization. That is, permissions on things like files, folders, mailboxes, databases, and so forth aren't managed within AD. Instead, those permissions are managed at their point, meaning they're managed on your file servers, mail servers, database servers, and so forth. Those servers may assign permissions to identities that are authenticated by AD, but those servers control who actually has access to what. This division of labor between authentication and authorization makes for a highly‐scalable, robust environment, but it also creates significant challenges when it comes to security management and auditing because there's no central place to control or review all of those permissions.

Over the past decade, we've learned a lot about how AD should be built and managed. Gone are the days when consultants routinely started a new forest by creating an empty root domain; also gone are the days when we believed the domain was the ultimate security boundary and that organizations would only ever have a single forest. In addition to covering troubleshooting and auditing, this book will present some of the current industry best practices around managing and architecting AD.

We've also learned that, although difficult to change, your AD design isn't necessarily permanent. Tools and techniques originally created to help migrate to AD are now used to restructure AD, in effect "migrating" to a new version of a domain as our businesses change, merge, and evolve. This book doesn't specifically focus on mergers and restructures, but keep in mind that those techniques (and tools to support them) are available if you decide that a directory restructure is the best way to proceed for your organization.

Inventorying Your AD

Before we get started, it's important that you have an up‐to‐date, accurate picture of what your directory looks like. This doesn't mean turning to the giant directory diagram that you probably have taped to the wall in your data center or server room, unless you've doublechecked to make sure that thing is up‐to‐date and accurate! Throughout this book, I'll be referring to specific elements of your AD infrastructure, and in some cases, you might even want to consider implementing changes to that infrastructure. In order to best follow along, and make decisions, you'll want to have all of the following elements inventoried.

Forests and Trusts

Most organizations have realized that, given the power of the forest‐level Enterprise Admins group, the AD forest is in fact the top‐level security boundary. Many companies have multiple forests, simply because they have resources that can't all be under the direct control of a single group of administrators. However, to ensure the ability for users, with the appropriate permissions of course, to access resources across forests, cross‐forest trusts are usually defined. Your first inventory should be to define the forests in your organization, determine who controls each forest, and document the trusts that exist between those forests.

Cross‐forest trusts can be one‐way, meaning that if Forest A trusts Forest B, the converse is not necessarily true unless a separate trust has been established so that Forest B explicitly trusts Forest A. Two‐way trusts are also possible, meaning that Forest A and Forest B can trust each other through a single trust connection. Forest trusts are also non‐transitive: If Forest A trusts Forest B, and Forest B trusts Forest C, then Forest A does not trust Forest C unless a separate, explicit trust is created directly between A and C.

When we talk about trust, we're saying that the trusting forest will accept user accounts from the trusted forest. That is, if Forest A trusts Forest B, then user accounts from Forest B can be assigned permissions on resources within Forest A. Forest trusts automatically include every domain within the forest so that if Forest A contains five domains, then every one of those domains would be able to assign permissions to user accounts from Forest B. Each forest consists of a root domain and may also include one or more child domains.

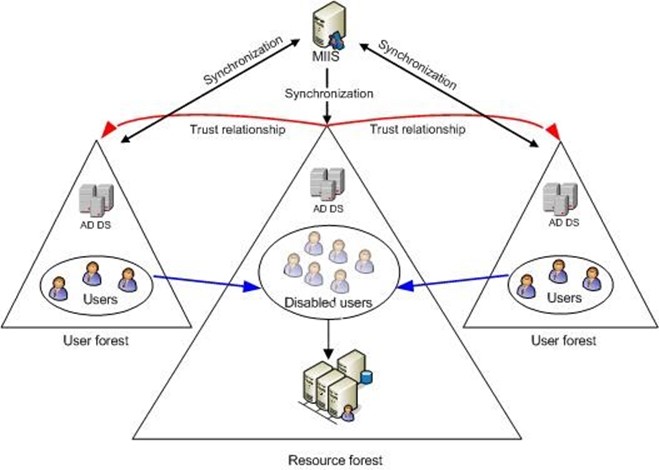

Figure 1.1 shows how you might document your forests. Key elements include meta directory synchronization links, forest trusts, and a general indication of what each forest is used for (such as for users or for resources).

Figure 1.1: Documenting forests.

For the various diagrams in this chapter, I'm going to draw from a variety of sources, including my past consulting engagements and Microsoft documentation. My purpose in doing so is to illustrate that these diagrams can take many different forms, at many different levels of complexity, and with many different levels of sophistication. Consider each of them, and produce your own diagrams using the best tools and skills you have.

Domains and Trusts

Domains act as a kind of security boundary. Although subject to the management of members of the Enterprise Admins group, and to a degree the Domain Admins of the forest root domain, domains are otherwise independently managed by their own Domain Admins group (or whatever group those permissions have been assigned or delegated to).

Account domains are those that have been configured to contain user accounts but which contain no resource servers such as file servers. Resource domains contain only resources such as file servers, and do not contain user accounts. Neither of these designations is strict, and neither exists within AD itself. For example, any resource domain will have at least a few administrator user accounts, user groups, and so forth. The type of domain designation is strictly a human convenience, used to organize domains in our minds. Many companies also use mixed domains, in which both user accounts and resources exist. Domains are typically organized into a tree, beginning with the root domain and then through domains that are configured as children of the root. Domain names reflect this hierarchy: Company.com might be the name of a root domain, and West.Company.com, East.Company.com, and North.Company.com might be child domains. Within such a tree, all domains automatically establish a transitive parent‐child two‐way trust, effectively meaning that each domain trusts each other domain within the same tree.

Forests, as the name implies, can contain multiple domain trees. By default, the root of each tree has a two‐way, transitive trust with the forest root domain (which is the root of the first tree created within that forest), effectively meaning that all domains within a forest trust each other. That's the main reason companies have multiple forests, because the full trust model within a forest gives top‐level forest‐wide control to the forest's Enterprise Admins group.

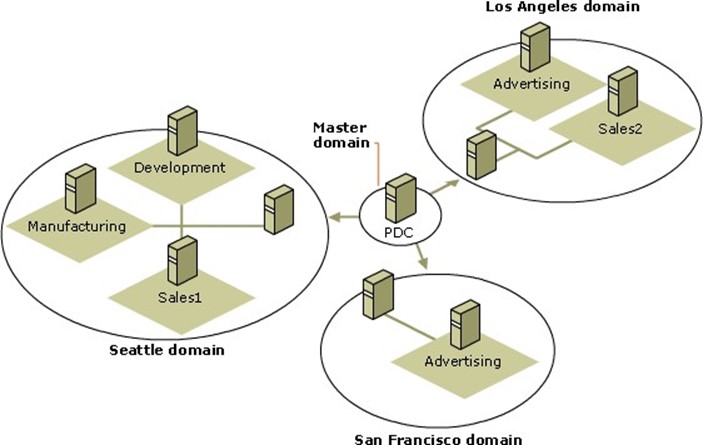

Even if you rely entirely on these default inter‐domain trusts, it's still important to document them, along with the domains' names. Figure 1.2 shows how you might build a domain diagram in a program like Microsoft Office Visio. The emphasis in this diagram is on the logical domain structure.

Figure 1.2: Documenting domains.

If you have any specialized domains—such as resource‐only domains, user‐only domains, and so forth—note those in your documentation. Also note the number of objects (especially computer and user accounts) in each domain. That is actually one of the most important metrics you can know about your domains, although many administrators can't immediately recall their numbers.

Domain Controllers

Domain controllers (DCs) are what make AD work. They're the servers that run AD's services, making the directory a reality. It's absolutely crucial, as you start reading this book, that you know how many DCs you have, where they're located, what domains they're in, and their individual IP addresses.

In many environments, DCs also provide other services, most frequently Domain Name Service (DNS). Other roles held by DCs may include WINS and DHCP services.

A DC's main role is to provide authentication services for domain users and for resources within the domain. We typically think of this authentication stuff as happening mainly when users show up for work in the morning—and in most cases, that is when the bulk of the authentication traffic occurs. However, as users attempt to access resources throughout the day, their computer will automatically contact a DC to obtain a Kerberos ticket for those resources. In other words, authentication traffic continues throughout the day—albeit at a somewhat slower, more evenly‐distributed pace than the morning rush.

That morning rush can be significant: Each user's computer must contact a DC to log itself onto the domain, and then again when the user is ready to log on. Users almost always start the day with a few mapped drives, each of which may require a Kerberos ticket, and they usually fire up Outlook, requiring yet another ticket. Some of the organizations I've consulted with have each user interacting with a DC more than a dozen times each morning, and then several dozen more times throughout the day.

We tend to size our DCs for that morning rush, and that capacity generally sees us throughout the day—even if we take the odd DC offline mid‐day for patching or other maintenance.

Each DC maintains a complete, read/write copy of the entire directory (the only exception being new‐fangled readonly domain controllers—RODCs, which as the name implies, contain only a readable copy of the directory). Multi‐master replication ensures that any change made on any DC will eventually propagate to every other DC in the domain. Replication is often one of the trickiest bits of AD, and is one of the things we tend to spend the most time monitoring and troubleshooting. Not all domain data is created equally: Some highpriority data, such as account lockouts, replicate almost immediately (or at least as quickly as possible), while less‐critical information can take much longer to make its way throughout the organization.

Figure 1.3 shows what a DC inventory might look like. Note the emphasis on physical details: IP addresses, DNS configuration, domain membership, and so forth.

Figure 1.3: DC inventory.

It's also important to note whether any of your DCs are performing any non‐AD‐related tasks, such as hosting a SQL Server instance (which isn't recommended), running IIS, and so forth.

Global Catalogs

A global catalog (GC) is a specific service that can be offered by a DC in addition to its usual DC duties. The GC contains a subset of information about every object in an entire forest, and enables users in each domain to discover information from other domains in the same forest. Each domain needs at least one GC; however, given the popularity of Exchange Server and its heavy dependence on GCs (Outlook, for example, relies on GCs to do email address resolution), it's not unusual to see a majority, or even all, DCs in a domain configured as GC servers.

Make sure you know exactly where your GCs are located. Numerous network operations can be hindered by a paucity of GCs, but having too many GCs can significantly increase the replication burden on your network.

In Figure 1.3, "GC" is used to indicate DCs that are also hosting the GC server role.

FSMOs

Certain operations within a domain, and within a forest, need a single DC to be in charge. It is absolutely essential for most troubleshooting processes that you know where these Flexible Single Master of Operation (FSMO) role‐holders sit within your infrastructure:

- The RID Master is in charge of handing out Relative IDs (RIDs) within a single domain (and so you'll have one RID Master per domain). RIDs are used to uniquely identify new AD objects, and they are assigned in batches to DCs. If a DC runs out of RIDs and can't get more, that DC can't create new objects. It's common to put the RID Master role on a DC that's used by administrators to create new accounts so that that DC will always be able to request RIDs.

- The Infrastructure Master maintains security identifiers for objects referenced in other domains—typically, that means updating user and group links. You have one of these per domain.

- The PDC Emulator provides backward‐compatibility with the old NTDS, and is the only place where NTDS‐style changes can be made (any DC provides read access for NTDS clients). Given that NTDS clients are becoming extinct in most organizations, the PDC Emulator (you'll have one in each of your domains, by the way) doesn't get used a lot for that purpose. Fortunately, it has a few other things to keep it busy. For example, password changes processed by other DCs tend to replicate to the PDC Emulator first, and the PDC Emulator serves as the authoritative time source for time synchronization within a domain.

- Each forest will contain a single Schema Master, which is responsible for handling schema modifications for the forest.

- Each forest also has a Domain Naming Master, which keeps track of the domains in the forest, and which is required when adding or removing domains to or from the forest. The Domain Naming Master also plays a role in maintaining group membership across the forest.

Marking these role owners on your main diagram (such as Figure 1.3) is a great way to document the FSMO locations. Some organizations also like to indicate a "backup" DC for each FSMO role so that in the event a FSMO role must be moved, it's clear where it should be moved to.

Containers

The logical structure of AD is divided into a set of hierarchical containers. AD supports two main types: containers and organizational units (OUs). A couple of built‐in containers (such as the Users container) exist by default within a domain, and you can create all the OUs that you want to help organize your domain's objects and resources. Again, an inventory here is critical, as several operations—most especially Group Policy application—work primarily based on things like OU membership.

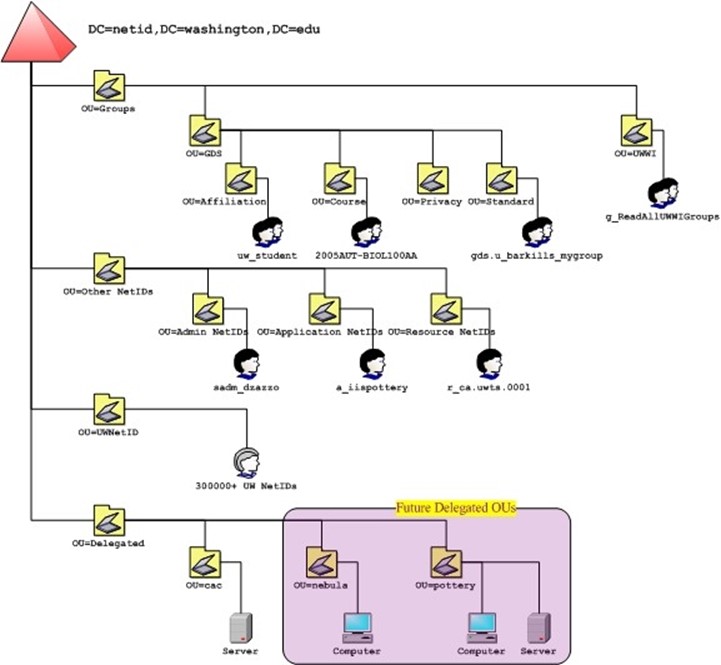

Figure 1.4 shows one way in which you might document your OU and container hierarchy. Depending on the size and depth of your hierarchy, you could also just grab a screenshot from a program like Active Directory Users and Computers.

Figure 1.4: Documenting OUs and containers.

Try to make some notation of how many objects are in each container, and if possible make a note of which containers have which Group Policy Objects (GPOs) linked to them. That information will be useful as we dive into troubleshooting and best practices discussions.

Subnets, Sites, and Links

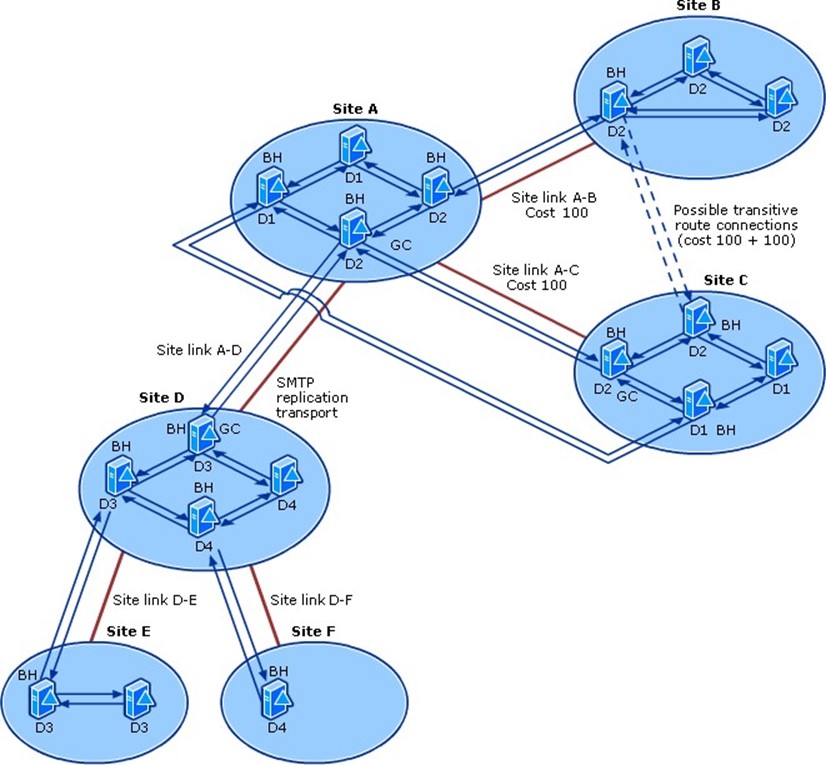

In AD terms, a subnet is an entry in the directory that defines a single network subnet, such as 192.168.1.0/8. A site is a collection of subnets that all share local area network (LAN)style connectivity, typically 100Mbps or faster. In other words, a site consists of all the subnets in a given geographic location.

Links, or site links, define the physical or logical connectivity between sites. These tell AD's replication algorithms which DCs are able to physically communicate across wide area network (WAN) links so that replicated data can make its way throughout the organization. Documenting your subnets, sites, and links is quite probably the most important inventory you can have for a geographically‐dispersed domain.

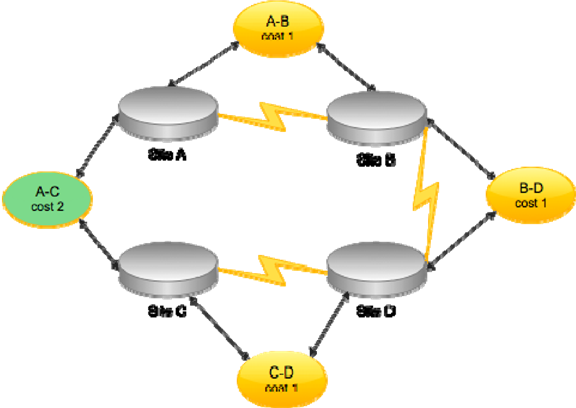

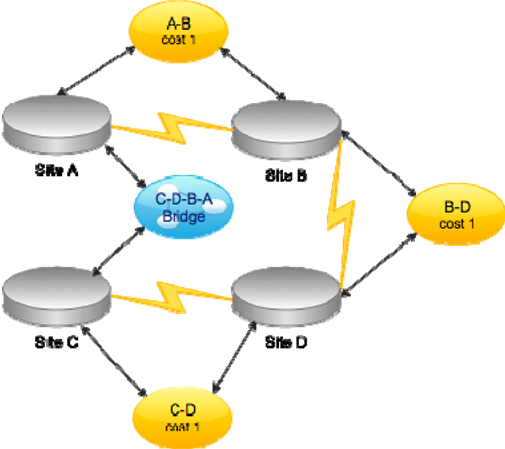

Typically, you'll have site links that represent the physical WAN connectivity between sites. A cost can be applied to each link, indicating its relative expense. For example, if two sites are connected by a high‐speed WAN link and a lower‐speed backup link, the backup link might be given a higher cost to discourage its use by AD under normal conditions. As Figure 1.5 shows, you can also create site links that represent a virtual connection. The A‐C link connects two sites that do not have direct WAN connectivity. This isn't necessarily a best practice, as it tells AD to expect WAN connectivity where none in fact exists.

Figure 1.5: Configuring site links.

Eliminating the A‐C site link will not hinder AD operations: The directory will correctly determine the best path for replication. For example, changes made in Site C would replicate to D, then to B, and eventually to A. If Site C were the source of many changes (perhaps a concentration of administrators work there), you could speed up replication from there to Site A by creating a site link bridge, effectively informing AD of the complete path from C to A by leveraging the existing A‐B, B‐D, and C‐D site links. Such a bridge accurately reflects the physical WAN topology but provides a higher‐priority route from C to A. Figure 1.6 shows how you might document that.

Figure 1.6: Configuring a site link bridge.

As you document your sites, think again about numbers: How many computers are in each site? How many users? Make a notation of these numbers, along with a notation of how many DCs exist at each site.

Sites should, as much as possible, reflect the physical reality of your network; they don't correspond to the logical structure of the domain in any way. One site may contain DCs from several domains or forests, and any given domain may easily span multiple sites. However, site links are kind of a part of the domain's logical structure because those links are defined within the directory itself. If you have multiple domains, it's worth building a diagram (like Figure 1.5 or 1.6) for each domain—even if they look substantially the same. In fact, any group of domains that spans the same physical sites should have identicallooking site diagrams because the physical reality of your network isn't changing. Going through the exercise of creating the diagrams will help ensure that each domain has its links and bridges configured properly.

DNS

The last critical piece of your inventory consists of your DNS servers. You should clearly document where each server physically sits and think about which clients it serves. Most companies have at least two DNS servers, although having more (and distributing them throughout your network) can provide better DNS performance to distant clients. AD absolutely cannot function without DNS, so it's important that both servers and clients have ready access to a high‐performance DNS server. Most AD problems are rooted in DNS issues, meaning much of our troubleshooting discussion will be about DNS, and that discussion will be more meaningful if you can quickly locate your DNS servers on your network.

Also try to make some notation of which users, and how many users, utilize each DNS server either as a primary, secondary, or other server. That will help give you an at‐aglance view of each DNS server's workload, and give you an idea of which users are relying on a particular server.

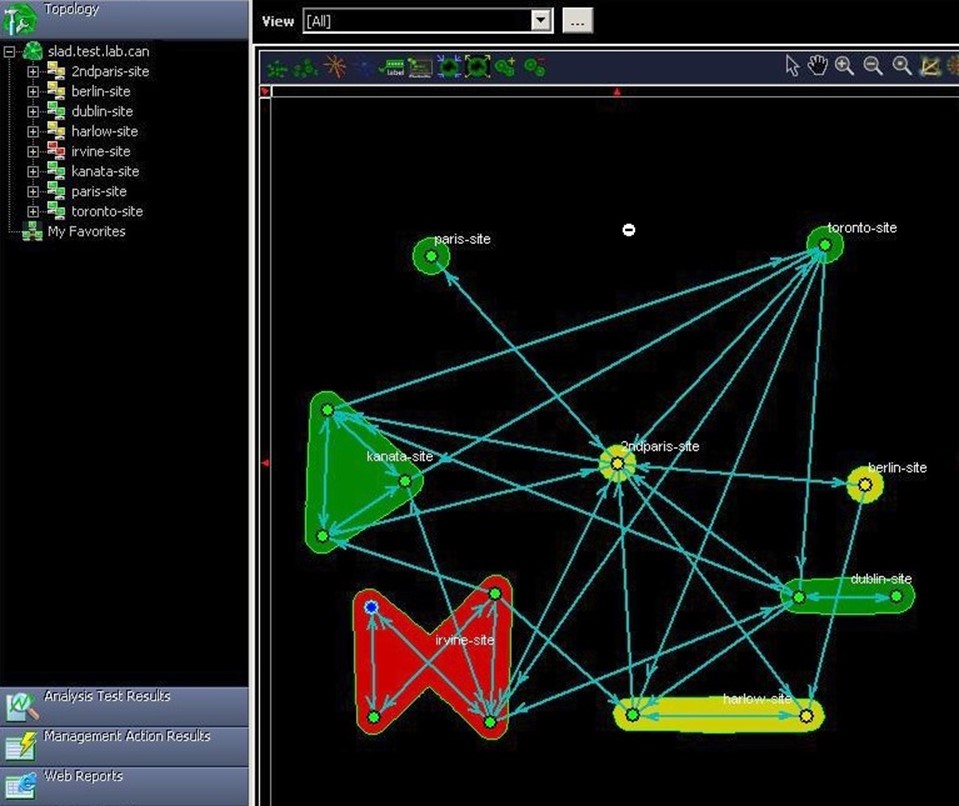

Putting Your Inventory into Visual Form

A tool like Microsoft Office Visio is often utilized to create AD infrastructure diagrams, often showing both the logical structure (domains, forests, and trusts) and the physical topology (subnets, sites, links, and so forth). There are also third‐party tools that can automatically discover your infrastructure elements and create the appropriate charts and diagrams for you. The benefit of such tools is that they're always right because they're reflecting reality— not someone's memory of reality. They can usually catch changes and create updated diagrams much faster and more accurately than you can.

I love to use those kinds of tools in combination with my own hand‐drawn diagrams. If the tool‐generated picture of my topology doesn't match my own picture, I know I've got a problem, and that can trigger an investigation and a change, if needed.

What's Ahead

Let's wrap up this brief introduction with a look at what's coming up in the next seven chapters.

AD Troubleshooting

Chapters 2 and 3 will concern themselves primarily with troubleshooting. In Chapter 2, we'll focus on the ways and means of monitoring AD, including native event logs, system tools, command‐line tools, network monitors, and more. I'll also present desirable capabilities available in third‐party tools (both free and commercial), with a goal of helping you to build a sort of "shopping list" of features that may support troubleshooting, security, auditing, and other needs.

Chapter 3 will focus on troubleshooting, including techniques for narrowing the problem domain, addressing network issues, resolving name resolution problems, dealing with AD service issues, and more. We'll also look at replication, AD database failures, Group Policy issues, and even some of the things that can go wrong with Kerberos. I'll present this information in the form of a troubleshooting flowchart that was developed by a leading AD Most Valuable Professional (MVP) award recipient, and walk you through the tools and tasks necessary to troubleshoot each kind of problem.

I'll wrap up this book with more troubleshooting, devoting Chapter 8 to additional troubleshooting tips and tricks.

AD Security

In Chapter 4, we'll dive into and discuss the base architecture for AD security. We'll look more at the issue of distributed permissions management, and discuss some of the problems that it presents—and some of the advantages it offers. We'll look at some do‐ityourself tools for centralizing permissions changes and reporting, and explore whether you should rethink your AD security design. We'll also look at third‐party capabilities that can make security management easier, and dive into the little‐understood topic of DNS security.

AD Auditing

Chapter 5 will cover auditing, discussing AD's native auditing architecture and looking at how well that architecture helps to meet modern auditing requirements. I'll also present capabilities that are offered by third‐party tools and how well those can meet today's business requirements and goals.

AD Best Practices

Chapter 6 will be a roundup of best practices for AD, including a quick look at whether you should reconsider your current AD domain and forest design (and, if you do, how you can migrate to that new design with minimum risk and effort). We'll also look at best practices for disaster recovery, restoration, security, replication, FSMO placement, DNS design, and more. I'll present new ideas for virtualizing your AD infrastructure, and look at best practices for ongoing maintenance.

Monitoring Active Directory

The fact is that you can't really do anything with Active Directory (AD) unless you have some way of figuring out what's going on under the hood. That's what this chapter will be all about: how to monitor AD. I have to make a distinction between monitoring and auditing: Monitoring, which we'll cover here, is primarily done to keep an eye on functionality and performance, and to solve functional and performance problems when they arise. Auditing is an activity designed to keep an eye on what people are doing with the directory—exercising permissions, changing the configuration, and so forth. We have chapters on auditing lined up for later in this book.

Monitoring Goals

There are really two reasons to monitor AD. The first is because there's some kind of problem that you're trying to solve. In those cases, you're usually interested in current information, delivered in real‐time, and you're not necessarily interested in storing that data for more than a few moments. That is, you want to see what's happening right now. You also usually want to focus in on specific data, such as that related to replication, user logon performance, or whatever you're troubleshooting.

The second reason to monitor is for trending purposes. That is, you're not looking at a specific problem but instead collecting data so that you can spot potential problems. You're usually looking at a much broader array of data because you don't have anything specific that you need to focus on. You're also usually interested in retaining that data for a potentially long time so that you can detect trends. For example, if user logon workload is slowly growing over time, storing monitoring data and examining trends—perhaps in the form of charts—allows you to spot that growing trend, anticipate what you might need to do about it, and get it done.

Having these goals in mind as we look at some of the available tools is important. Some tools excel at offering real‐time data but are poor at storing data that would provide trending information. Other tools might be great at storing information for long‐term trending but aren't as good at providing highly‐detailed, very‐specific, real‐time information for troubleshooting purposes. So as we look at these tools, we'll try to identify which bits they're good at.

Another thing to keep in mind before we jump in is that some of these tools are actually foundational technologies. In other words, when we discuss event logs, you have to keep in mind that that technology is a tool that you can use—and it's a foundation that other tools use. Any strengths or weaknesses present in that technology are going to carry through to any tools that use that technology. So again, it's simply important to recognize such considerations because they'll have an impact beyond that specific tool.

Event Logs

Windows' native event logs play a crucial role in monitoring AD. The event logs aren't great, but they're the place where AD sends a decent amount of diagnostic and auditing information, so you have to get used to using them.

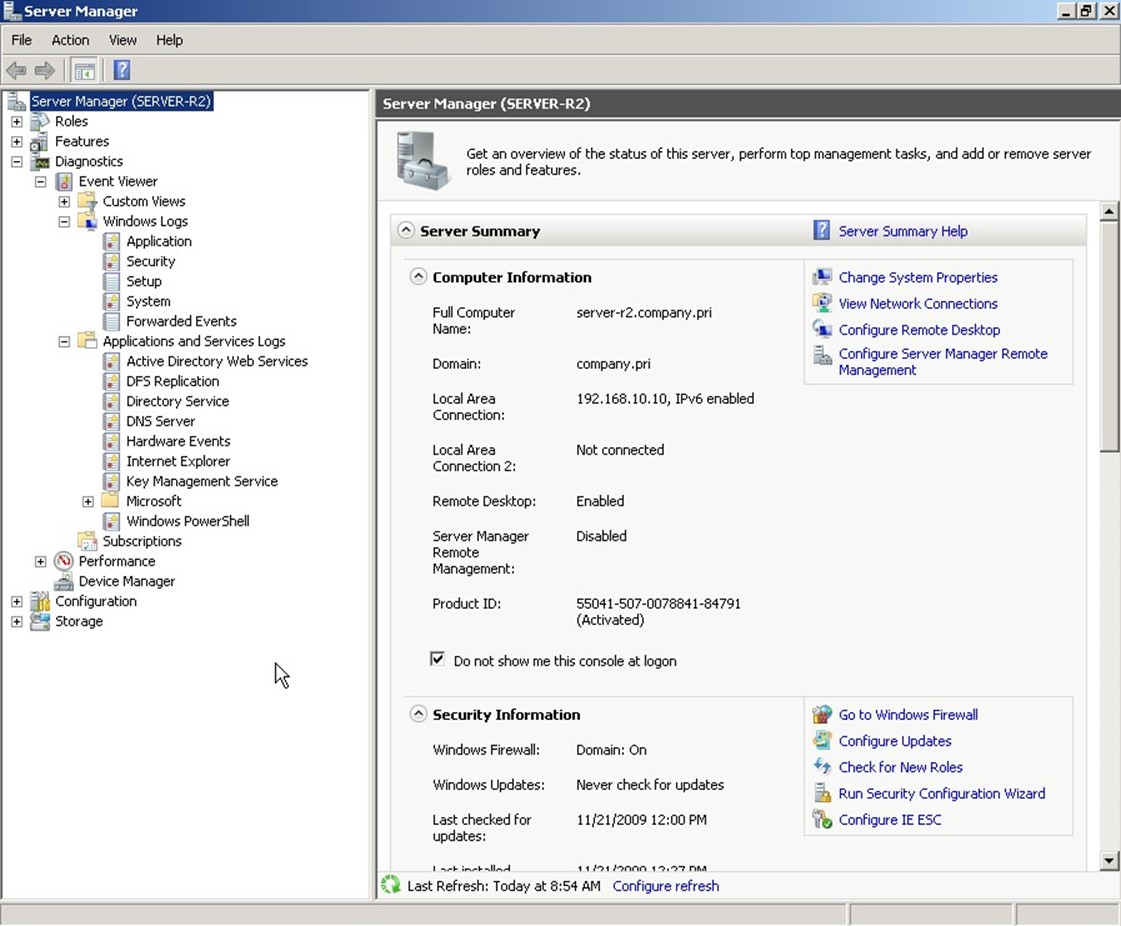

There's a bit of a distinction that needs to be made: The event log is a native Windows data store. The Event Viewer is the native tool that enables you to look at these logs. Event logs themselves are also accessible to a wide variety of other tools, including Windows PowerShell, Windows Management Instrumentation (WMI), and numerous third‐party tools. In Windows Server 2008 and later, these logs' Viewer is accessible through the

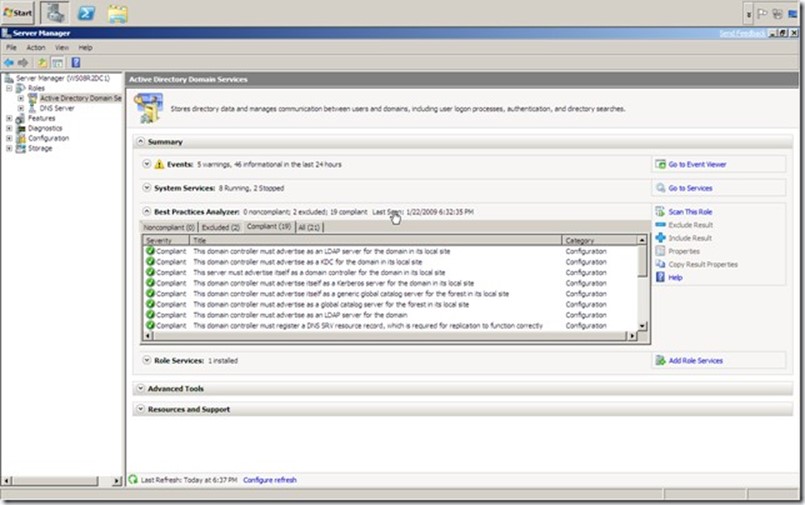

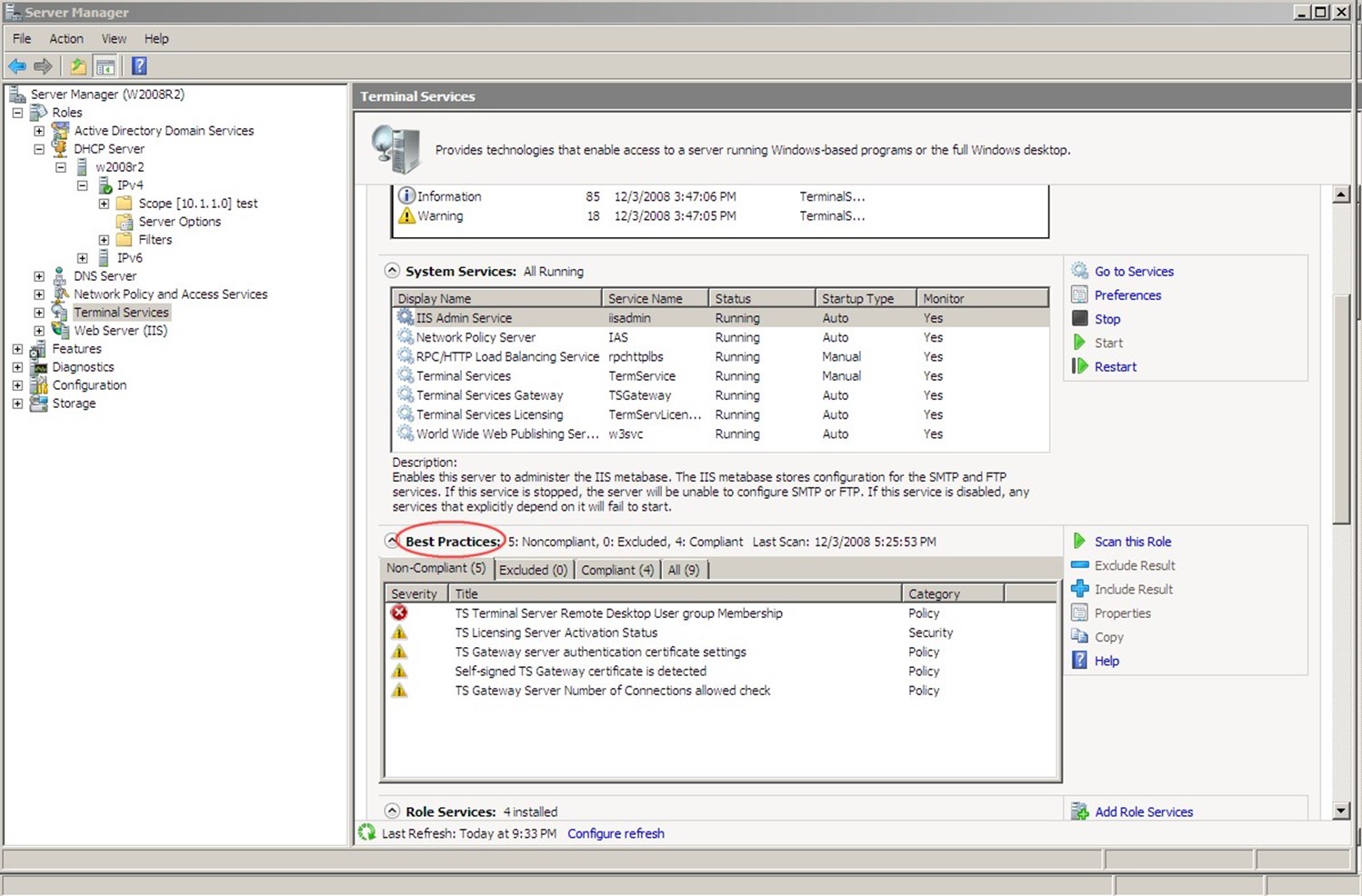

Server Manager console, which Figure 2.1 shows.

Figure 2.1: Accessing event logs in Server Manager.

There are two kinds of logs. The Windows Logs are the same basic logs that have been around since the first version of Windows NT. Of these, Active Directory (AD) writes primarily to the Security log (auditing information) and the System log (diagnostic information). In Windows Server 2008, a new kind of log, Applications and Services Logs, were introduced. These supplement the Windows Logs by giving each application the ability to create and write to its own log rather than dumping everything into the Application log, as was done in the past. In these new logs, AD creates an Active Directory Web Services log, DFS Replication log, Directory Service log, and DNS Server log. Technically, DFS and DNS aren't part of AD, but they do integrate with and support AD, so they're important to look at.

Windows itself also creates numerous logs under the Microsoft folder, as Figure 2.1 shows: GroupPolicy, DNS Client Events, and a few others, all of which can offer clues into AD's operation and performance. Don't forget that client computers play a role in AD, as well. Logs for NTLM, Winlogon, DNS Client, and so forth can all provide useful information when you're troubleshooting an AD problem.

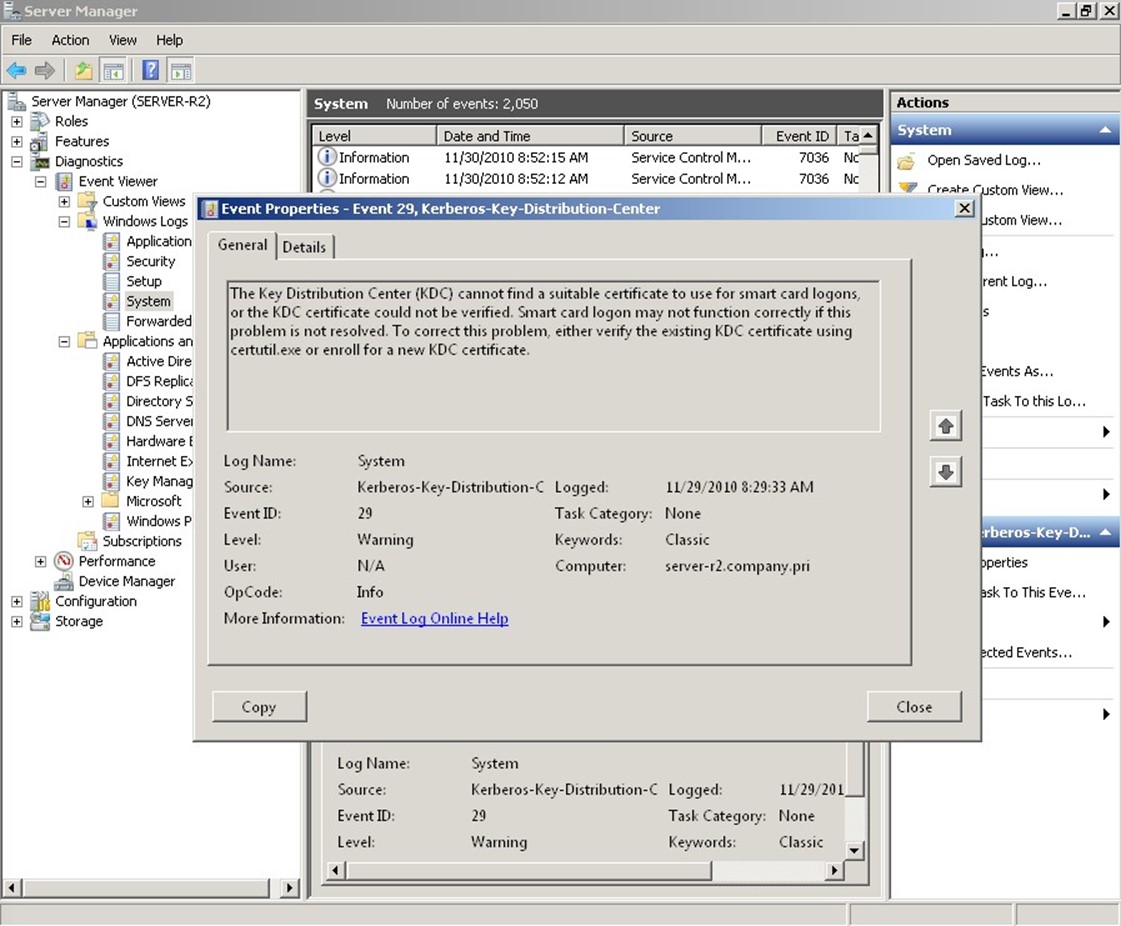

Although the event logs can contain a wealth of information, their usefulness can be hit or miss. For example, the event that Figure 2.2 shows is pretty clear: Smart card logons aren't working because there isn't a certificate installed. My domain doesn't use smart card logons, so this is expected and doesn't present a problem.

Figure 2.2: Helpful events.

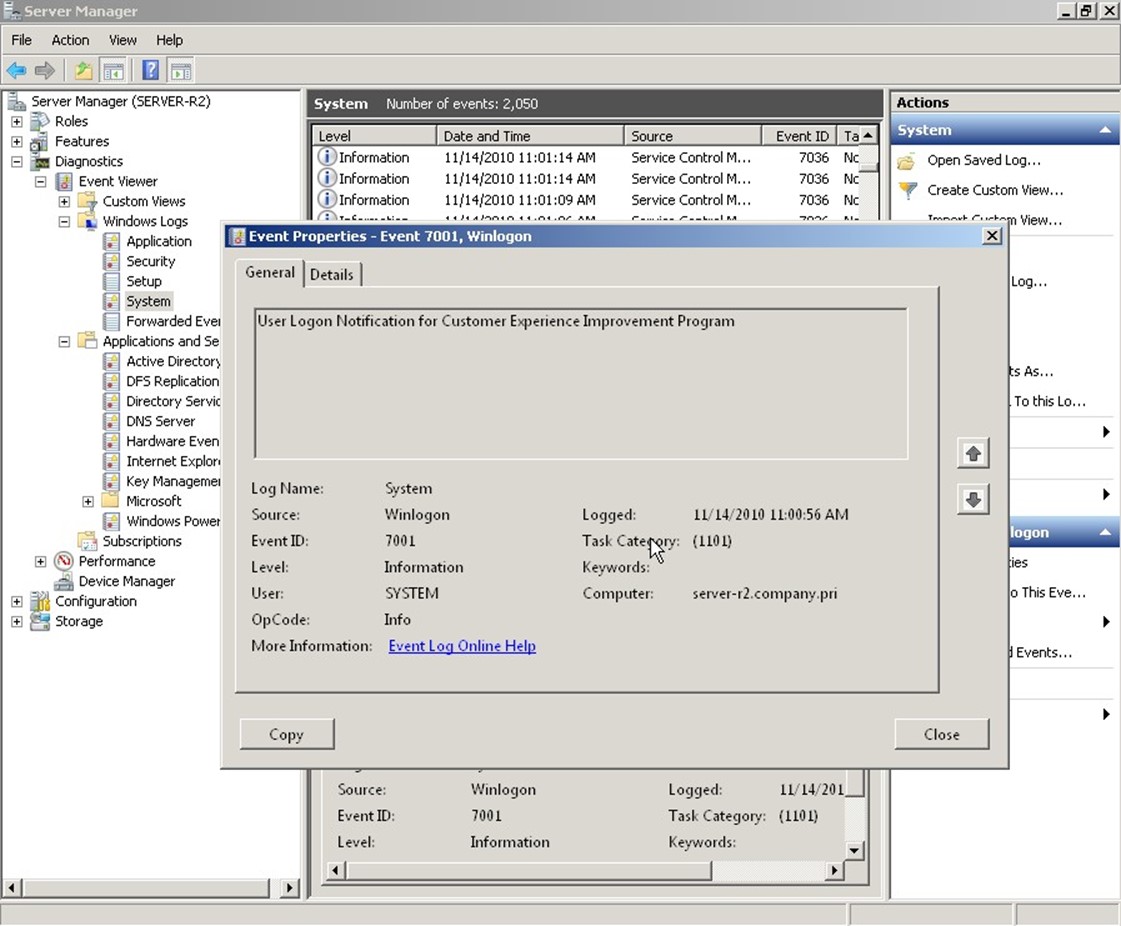

Other events just constitute "noise," such as the one shown in Figure 2.3: User Logon Notification for Customer Experience Improvement Program. Huh? Why do I care?

Figure 2.3: "Noise" events.

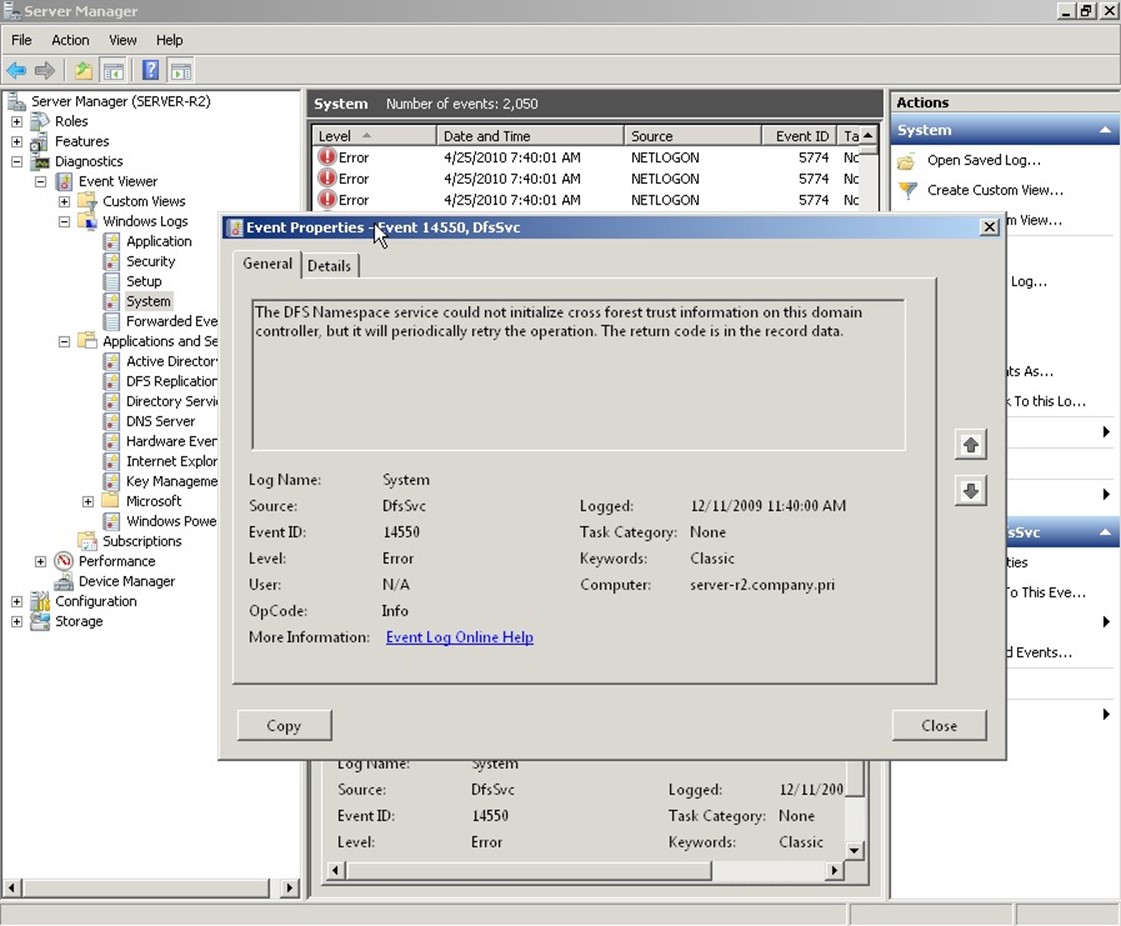

Then you've got winners like the one shown in Figure 2.4. This is tagged as an actual error, but it doesn't tell me much—and it doesn't give many clues about how to solve the problem or even if I need to worry about it.

Figure 2.4: Unhelpful events.

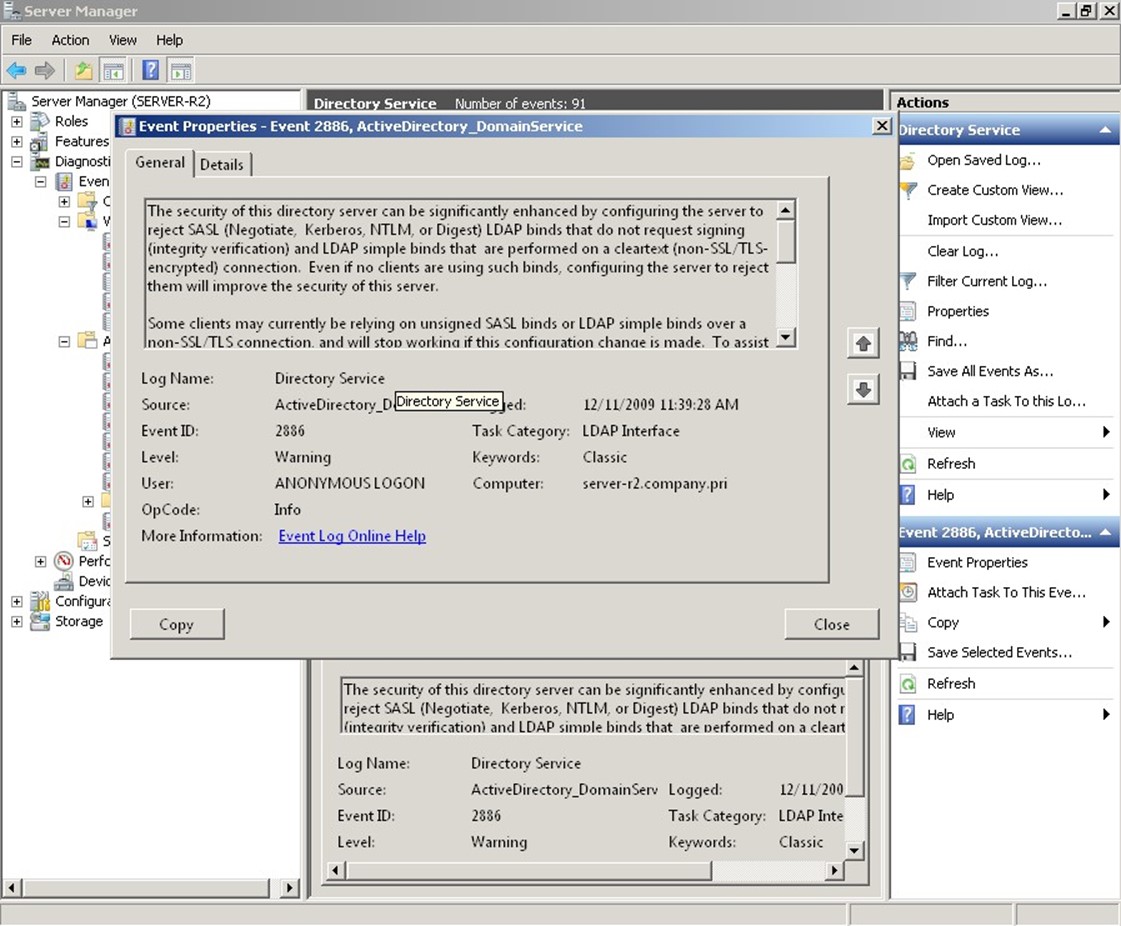

It's probably going too far to call this event "useless," but this event is certainly not very helpful. Finally, as shown in Figure 2.5, sometimes the event logs will include suggestions. That's nice, but is this the best place to put these? They create more "noise" when you're trying to track down information related to a specific problem, and they're tagged as Warnings (so you tend to want to look at them, just in case they're warning you of a problem), but they can often be ignored.

Figure 2.5: Suggestions, not "events."

There probably isn't an administrator alive who hasn't spent a significant amount of time in Google hunting down the meaning behind—and resolution for—dozens of event IDs over the course of their careers. That reality highlights key problems of the native event logs:

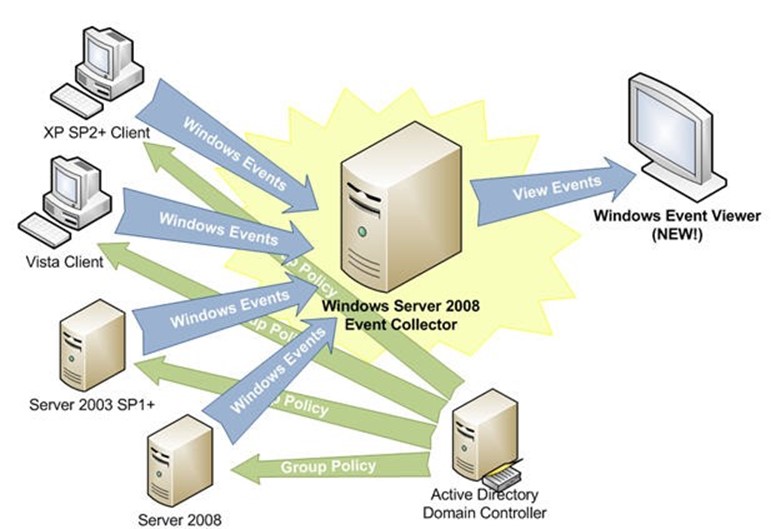

- They're not centralized. Although you can configure event forwarding, it's pretty painful to get all of your domain controllers' logs into a single location. That means your diagnostic information is spread across multiple servers, giving you multiple places to search when you're trying to solve a problem.

- They're not always very clear. Confusing, vague, or obtuse messages are what the event logs are famous for. Although Microsoft has gradually improved that over the years in some instances, there are still plenty of poor examples in the logs.

- They're full of noise. Worse, you can't rely on the "Information," "Warning," and "Error" tags. Sometimes, an "Information" event will give you the clue you need to solve a problem, and "Warning" events—as we've seen—can contain information that is not trouble‐related.

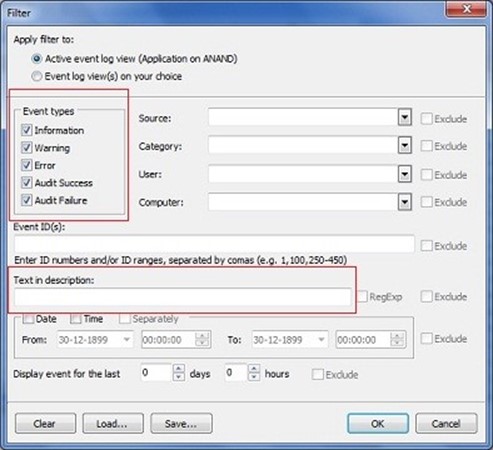

- The native Viewer tool offers poor filtering and searching capabilities, and no correlation capability. That is, it can't help you spot related events that might point to a specific problem or solution.

Problems notwithstanding, you have to get used to these logs because they're the only place where AD and its various companions log any kind of diagnostic information when problems occur.

System Monitor/Performance Monitor

Also located in Server Manager is Performance Monitor, the native GUI‐based tool used to view Windows' built‐in performance counters. Any domain controller will contain numerous counter sets related to directory services, including several DFS‐related categories, DirectoryServices, DNS, and more. These are designed to provide the focused, real‐time information you need when you're troubleshooting specific problems—typically, performance problems, although not necessarily. Although Performance Monitor does have the ability to create logs, containing performance data collected over a long period of time, it's not a great tool for doing so. More on that in a bit.

It's difficult to give you a fixed list of counters that you should always look at; any of them might be useful when you're troubleshooting a specific problem. That said, there are a few that are useful for monitoring AD performance in general:

- DRA Inbound Bytes Total/Sec shows inbound replication traffic. If it's zero, there's no replication, which is generally a problem unless you have only one domain controller.

- DRA Inbound Object Updates Remaining in Packet provides the number of directory objects that have been received but not yet applied. This number should always be low on average, although it may spike as replicated objects arrive. If it remains high, your server isn't processing updates quickly.

- DRA Outbound Bytes Total/Sec offers the data being sent from the server due to replication. Again, unless you've got only one domain controller, this will rarely be zero in a normal environment.

- DRA Pending Replication Synchronization shows the number of directory objects waiting to be synchronized. This may spike but should be low on average.

- DS Threads in Use provides the number of process threads currently servicing clients. Continuously high numbers suggest a need for a larger number of processor cores to run those threads in parallel.

- Kerberos Authentications offers a basic measure of authentication workload.

- LDAP Bind Time shows the number of milliseconds that the last LDAP bind took to complete. This should be low on average; if it remains high, the server isn't keeping up with demand.

- LDAP Client Sessions is another basic unit of workload measurement.

- LDAP Searches/Sec offers another good basic unit of workload measurement.

All of these counters benefit from trending, as they all help you form a basic picture of how busy a domain controller is. In other words, it's great when you can capture this kind of data on a continuous basis, then view charts to see how it changes over time. Performance Monitor itself isn't a great tool for doing that because it simply wasn't designed to collect weeks and weeks worth of data and display it in any meaningful way. However, it can be suitable for collecting data for shorter periods of time—say, a few hours—then using the collected data to get a sense of your general workload.

You'll have to do that monitoring on each domain controller, too, because the performance information is local to each computer. Ideally, each domain controller's workload will be roughly equal. If they're not, start looking at things like other tasks the computer is performing, or the computer's hardware, to see why one domain controller seems to be working harder than others.

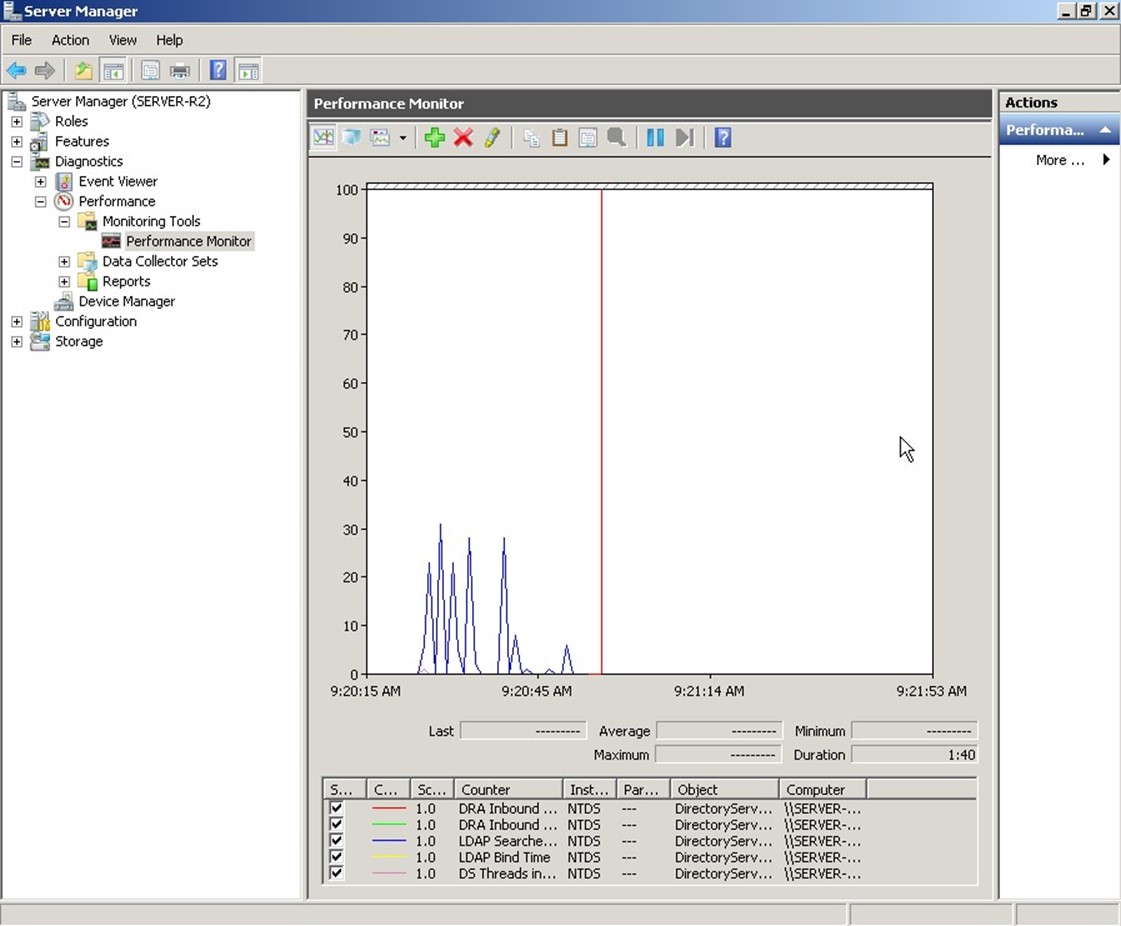

This kind of performance monitoring is one of the biggest markets for third‐party tools, which we'll discuss toward the end of this chapter. Using the same underlying performance counters, third‐party tools (as well as additional, commercial tools from Microsoft) can provide better performance data collection, storage, trending, and reporting—and can even do a better job of sending alerts when performance data exceeds pre‐set thresholds. What Performance Monitor is good at—as Figure 2.6 shows—is enabling you to quickly view real‐time data when you're focusing on a specific problem.

Figure 2.6: Viewing realtime performance data in Performance Monitor.

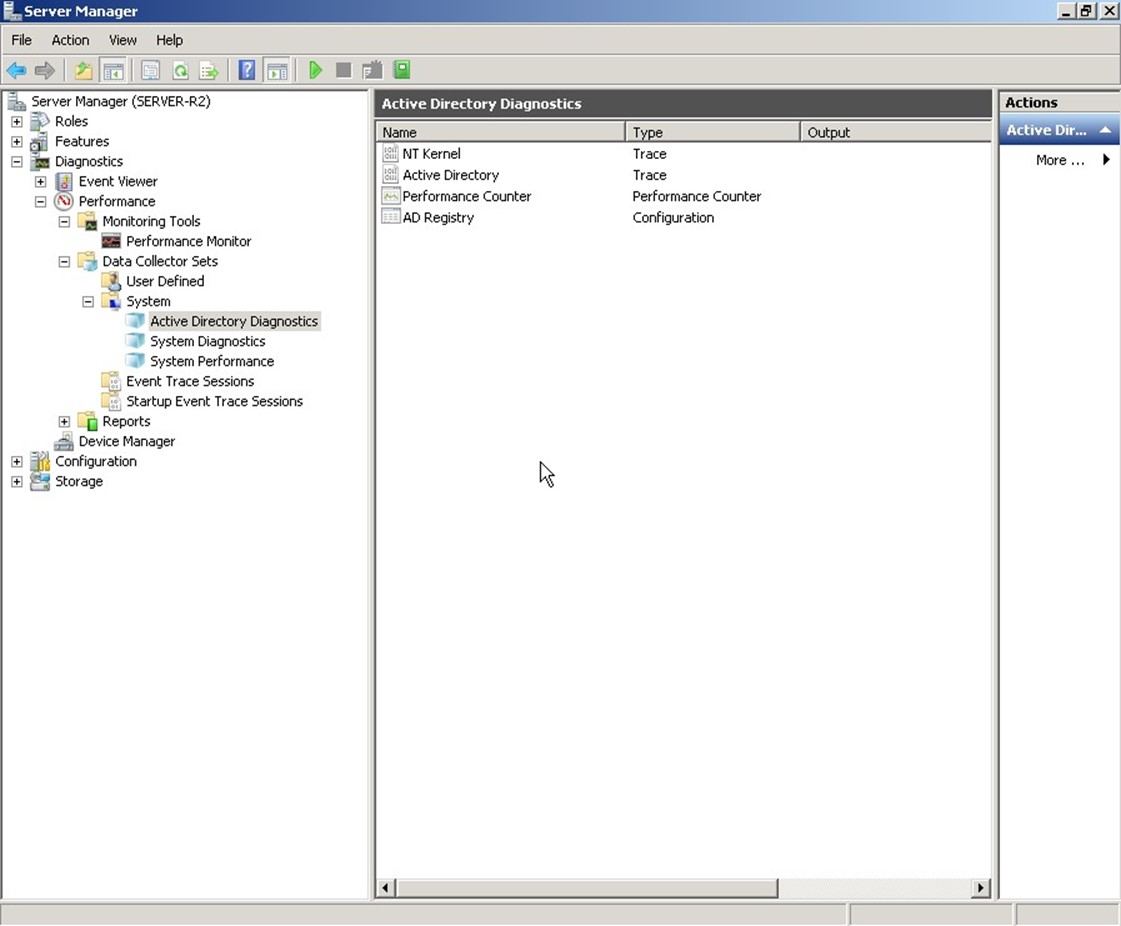

One problem we should identify, though, is that Performance Monitor requires a good deal of knowledge on your part to be useful. First, you have to make sure you're looking at all the right counters at the right time. Looking at DS Threads alone is useless unless you're also looking at some other counters to tell you why all those threads are, or are not, in use. In other words, you have to be able to mentally correlate the information from many counters to get an accurate assessment of how AD is really performing. Microsoft helps by providing predefined data collector sets, which can include not only counters but also trace logs and configuration changes. One is provided for AD diagnostics (see Figure 2.7).

Figure 2.7: The AD Diagnostics data collector set.

Once you start a collector set, you can let it run for however long you like. Results aren't displayed in real‐time; instead, you have to view the latest report, which is a snapshot. These sets are designed to run for longer periods of time than a normal counter trace log, and the sets' configuration includes settings for managing the collected log size. Figure 2.8 shows an example report.

Figure 2.8: Viewing a data collector set report.

These reports do a decent job of applying some intelligence to the underlying data. As you can see here, a "green light" icon lets you know that particular components are performing within Microsoft's recommended thresholds. That "intelligence" doesn't extend far, though: Once you start digging into AD‐specific stuff, you're still looking at raw data, as you can see in the section on Replication that's been expanded in Figure 2.8. Thus, you'll still need a decent amount of expertise to interpret these reports and determine whether they represent a problem condition.

Command‐Line Tools

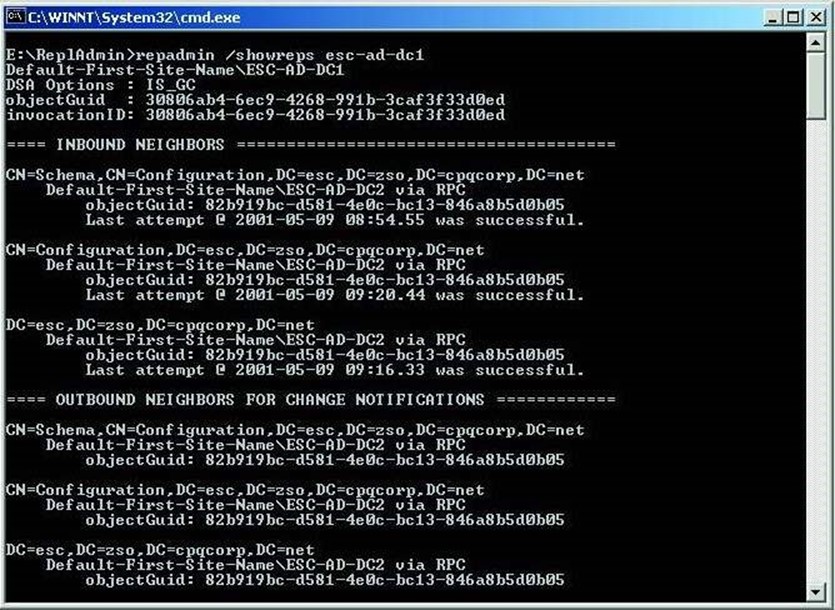

A host of command‐line tools can help detect AD problems or provide information needed to solve those problems. This chapter isn't intended to provide a comprehensive list of them, but one of the more well‐known and useful ones includes Repadmin. This tool can be used to check replication status and diagnose replication problems. For example, as Figure 2.9 shows, this tool can be used to check a domain controller's replication neighbors—a way of checking on your environment's replication topology. You'll also see if any replication attempts with those neighbors have succeeded or failed.

Figure 2.9: Using Repadmin to check replication status.

This—and other command‐line tools—are great for checking real‐time status information. What they're not good at is collecting information over the long haul, or for running continuously and proactively alerting you to problems.

Network Monitor

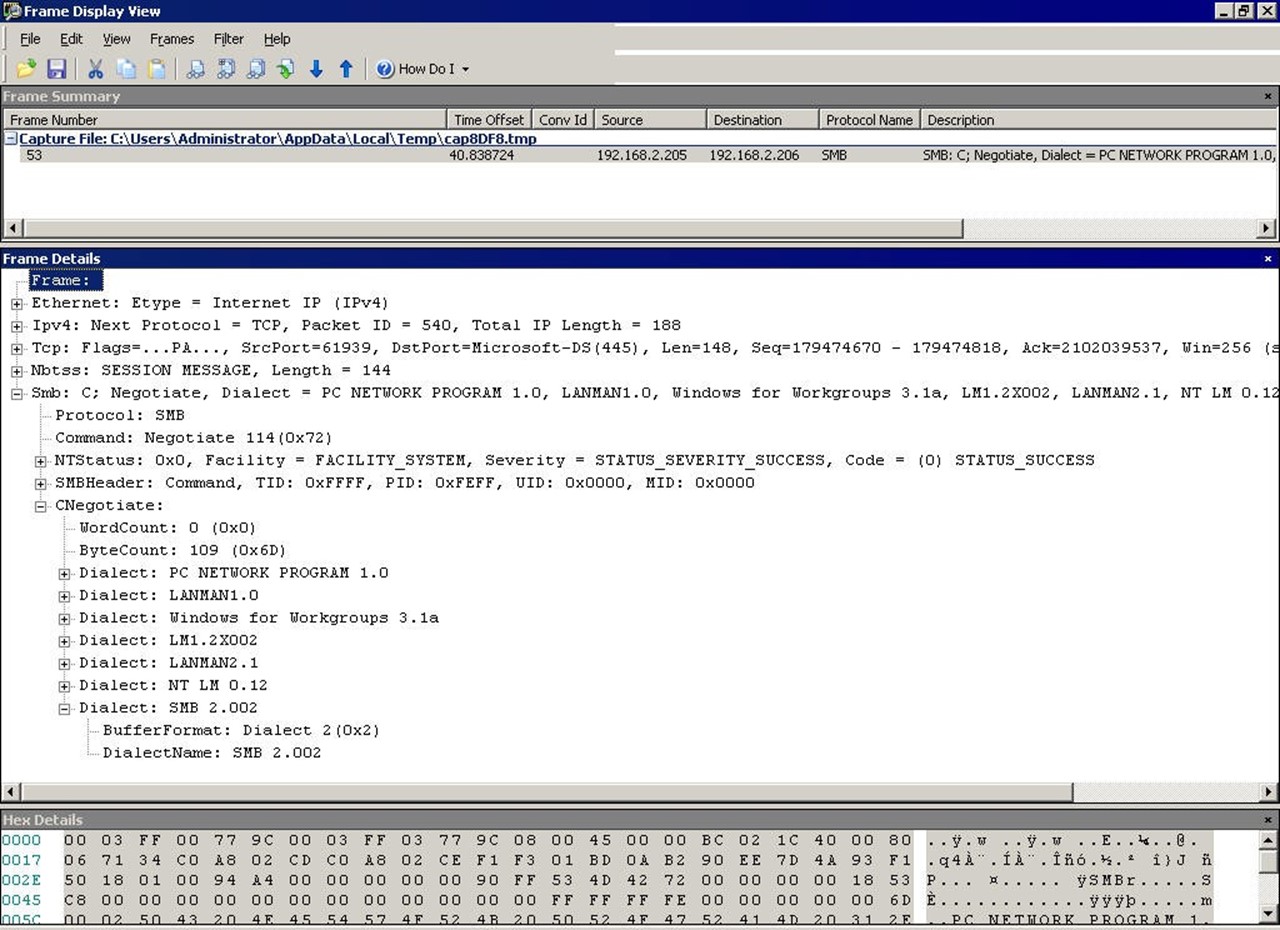

You might not ordinarily think of Network Monitor—or any packet‐capture tool, including Wireshark and others—as a way of monitoring AD. In fact, with a lot of practice, they can be great tools. After all, much of what AD does ultimately comes down to network communications, and with a packet capture tool, you can easily see exactly what's transpiring over the network. Figure 2.10 illustrates the main difficulty in using these tools.

Figure 2.10: Captured AD traffic in Network Monitor.

You see the problem, right? This is rocket science‐level stuff. I'm showing a captured packet for directory services traffic, but unless you know what this traffic should look like, it's impossible to tell whether this represents a problem. But gaining that knowledge is worth the time: I've used tools like this to find problems with DNS, Kerberos, time sync, and numerous other AD‐related issues. Unfortunately, a complete discussion of these protocols, how they work, and what they should look like is far beyond the scope of this book.

At a simpler level, though, you can use packet capture tools as a kind of low‐level workload monitor. For example, consider Figure 2.11.

Figure 2.11: Capturing traffic in Network Monitor.

Ignoring the details of the protocol, pay attention to the middle frame. At the top of the packet list, you can see a few LDAP search packets. This gives me an idea of what kind of workload the domain controller is receiving, where it's coming from, and so forth. If I know a domain controller is overloaded, this can be the start of the process to discover where the workload is originating—in this case, it might be a new application submitting poorlyconstructed LDAP queries to the directory.

System Center Operations Manager

System Center Operations Manager is Microsoft's commercial offering for monitoring both performance and functionality in AD as well as in numerous other Microsoft products and Windows subsystems. SCOM, as it's affectionately known, utilizes both performance counters and other data feeds much as Windows' native tools do. What sets SCOM apart are two things:

- Data is stored for a long period of time, enabling trending and other historical tasks

- Data is compared with a set of Microsoft‐provided thresholds, packaged into Management Packs, that tell you when data represents a good, bad, or "going bad" condition

That last bit enables SCOM to more proactively alert you to performance conditions that are trending bad, and to then show you detailed real‐time and historical data to help troubleshoot the problem. In many cases, Management Packs can include prescriptive advice for failure conditions, helping you to troubleshoot and solve problems more rapidly. As a tool, SCOM addresses most, if not all, of the weaknesses in the native Windows toolset. It does so by relying primarily on native technologies, and it does so in a way that often imposes less monitoring overhead than some of the native tools. Having SCOM collect performance data for a month, for example, is a lot easier on the monitored server than running Performance Monitor continuously on that server. SCOM does, however, require its own infrastructure of servers and other dependencies, so it adds some complexity to your environment.

Unfortunately, one of SCOM's greatest strengths—its ability to monitor a wide variety of products and technologies from a single console—is also a kind of weakness because it doesn't offer a lot of technology‐specific functionality. For example, SCOM isn't a great way to construct an AD replication topology map because that's a very AD‐specific capability that wouldn't be used by any other product. In other words, SCOM is a bit generic. Although it can provide great information, and good prescriptive advice, it isn't necessarily the only tool you'll need to troubleshoot every problem. SCOM can alert you to most types of problems (such as an unacceptably high number of replication failures), but it can't always help you visualize the underlying data in the most helpful way.

Third‐Party Tools to Consider

I'm not normally a fan of pitching third‐party products, and I'm not really going to do so here. That said, we've identified some weaknesses in the native tools provided with Windows. Some of those weaknesses are addressed by SCOM, but because that tool itself is a commercial add‐on (that is, it doesn't come free with Windows), you owe it to yourself to consider other add‐on commercial tools that might address the native tools' weaknesses in other ways, or perhaps at a different price point. That said, what are some of the weaknesses that we're trying to address?

Weaknesses of the Native Tools

Although I think Microsoft has provided some great underlying technologies in things like event logs and performance counters, the tools they provide to work with those are pretty basic. In order to decide if a replacement tool is suitable, we need to see if it can correct these weaknesses:

- Non‐centralized—Windows' tools are per‐server, and when you're talking about AD, you're talking about an inherently distributed system than functions as a single, complicated unit. We need tools that can bring diagnostic and performance information together into a single place.

- Raw data—Windows' tools really just provide GUI access to underlying raw data, either in the form of events or performance counters or whatever. That's really suboptimal. What we want is something to translate that data into English, tell us what it means, and possibly provide intelligence around it—which is a lot of what SCOM offers, really.

- Limited data—Windows' tools collect the information available to them through native diagnostic and performance technologies—and that's it. There are certainly instances when we might want more data, especially more‐specific data that deals with AD and its unique issues.

- Generic—Windows' tools are pretty generic. The Event Viewer and Performance Monitor, for example, aren't AD‐specific. But an AD‐specific tool could go a long way in making both monitoring and troubleshooting easier because it could present information in a very AD‐centric fashion.

Ways to Address Native Weaknesses

There are a few ways that vendors work to address these weaknesses:

- Centralization—Bringing data together into one place is almost the first thing any vendor seeks to address when building a toolset. Even Microsoft did this with SCOM.

- Intelligence—Translating raw data into processed information—telling us if something is "good" or "bad," for example—is one way a tool can add a great deal of value. Prescriptive advice, such as providing advice on what a particular event ID means and what to do about it, is also useful. This kind of built‐in "knowledge base" is a major selling point for some tool sets.

- More data—Some tools either supplement or bypass the native data stores and collect more‐detailed data straight from the source. This might involve tapping into LDAP APIs, AD's internal APIs, and so forth.

- Task‐specific—Tools that are specifically designed to address AD monitoring can often do so in a much more helpful way than a generic tool can. Replication topology maps, data flow dashboards, and so forth all help us focus on AD's specific issues.

Vendors in this Space

There are a lot of players in this space. A lot a lot. Some of the major names include:

- Quest

- ManageEngine

- Microsoft

- Blackbird Management Group

- NetIQ

- IBM

- NetPro (which was purchased by Quest)

Most of these vendors offer tools that address native weaknesses in a variety of ways. Some utilize underlying native technologies (event logs, performance counters, and so forth) but gather, store, and present the data in different ways. Others bypass these native technologies entirely, instead plugging directly into AD's internals to gather a greater amount of information, different information, and so forth.

In addition, there are a number of smaller tools out there that have been produced by the broader IT community and smaller vendors. A search engine is a good way to identify these, especially if you have specific keywords (like "replication troubleshooting") that you can punch into that search engine.

AD LDS

Chapter 7 gives me an opportunity to cover additional information: AD's smaller cousin, Active Directory Lightweight Directory Services (AD LDS). We'll look at what it is, when to use it, when not to use it, and how to troubleshoot and audit this valuable service.

Active Directory Troubleshooting: Tools and Practices

For the most part, in most organizations, Active Directory (AD) "just works." Over the past 10 years or so, Microsoft has improved both AD's performance and its stability, to the point where few organizations with a well‐designed AD infrastructure experience day‐to‐day issues. That said, when things do go wrong, it can be pretty scary because a lot of us don't have day‐to‐day experience in troubleshooting AD. The goal of this chapter is to provide a structured approach to troubleshooting to help you put out those fires faster.

For this chapter, I'll be drawing a lot on the wisdom and experience of Sean Deuby, a fellow Microsoft Most Valuable Professional award recipient and a real AD troubleshooting guru. You might enjoy reading his infrequently‐updated blog at http://www.windowsitpro.com/blogs/ActiveDirectoryTroubleshootingTipsandTricks.aspx. Although he doesn't post a lot, what he does post is worth the trip.

Narrowing Down the Problem Domain

"How do you find a wolf in Siberia?" It's a question I and others have used to kick off any discussion on troubleshooting. Siberia is, of course, a huge place, and finding a particular anything—let alone a wolf—is tough. The answer to the riddle is a maxim for troubleshooting:

Build a wolf‐proof fence down the center, and then look on one side of the fence.

Troubleshooting consists mainly of tests, designed to see if a particular root cause is responsible for your problems. The answer to the riddle provides important guidance: Make sure your tests (that is, the wolf‐proof fence) can definitively eliminate one or more root causes (that is, one whole half of Siberia). Don't bother conducting tests that can't eliminate a root cause. For example, if a user can't log in, you might first check their physical network connection. Doing so definitively eliminates a potential problem (network connectivity) so that you can move on to other possible root causes. Of course, checking connectivity only eliminates one or two possible root causes; a better first test would eliminate a whole host of them. For example, checking to see whether a different user could log in might eliminate the vast majority of potential infrastructure problems, making that a better wolf‐proof fence.

Sean's Seven Principles for Better Troubleshooting

Here's where I'll repeat excellent advice Sean Deuby once offered. Follow these seven principles (which I'll explain through the filter of my own experience) and you'll be a faster, better troubleshooter in any circumstance.

- Be Logical. Pay attention to how you're attempting to solve the problem. Before you do anything, ask yourself, "What outcome do I expect from this? If I get that outcome, what does it mean? If I don't get the expected outcome, what does that mean?" Don't do anything unless you know why, and unless you can state what the follow‐up step would be.

- Remember Occam's Razor. Simply put, the simplest solution is often the correct one. Don't start rebooting domain controllers until you've checked that the user is trying the correct password.

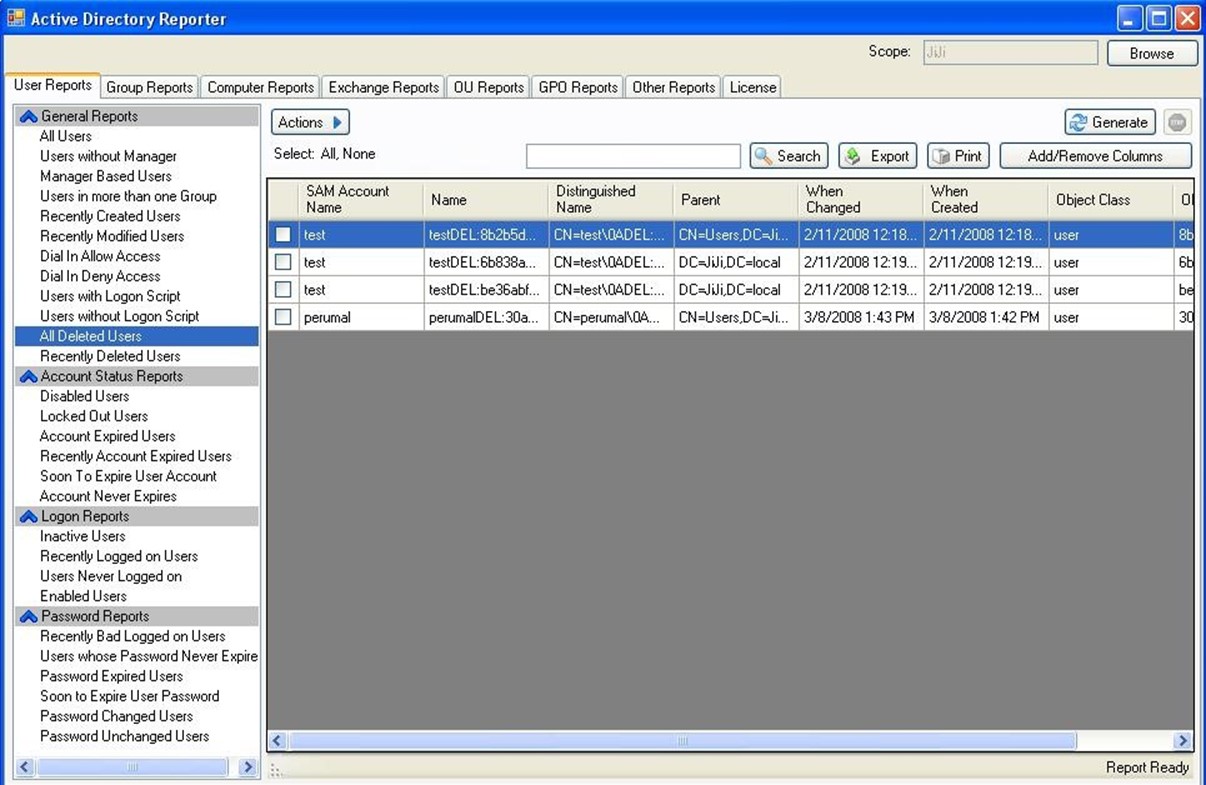

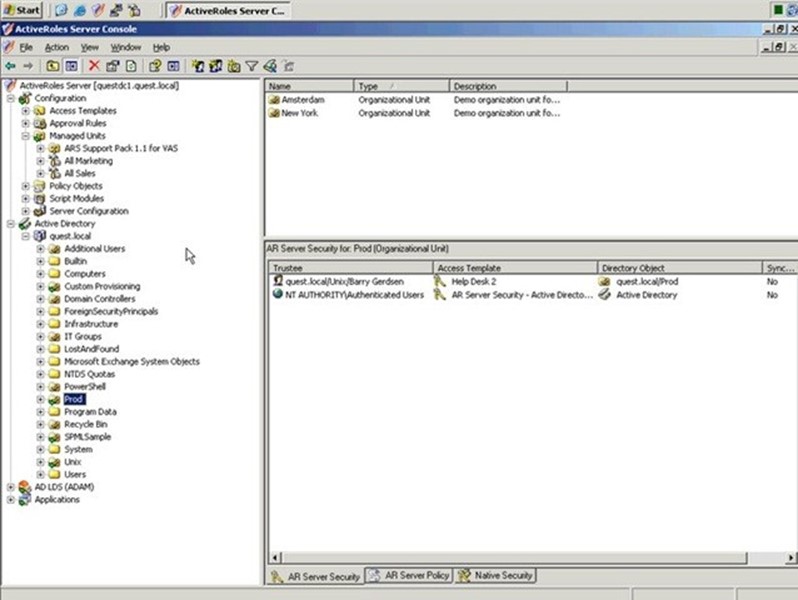

- What Changed? If everything was working fine an hour ago, what's different? This is where change auditing tools can come in handy. Although I don't specifically recommend it, I've used Quest's ChangeAuditor for Active Directory in the past because it keeps a very detailed, real‐time log of changes, and it's been a big help in solving some tricky issues. Whatever changed recently is a very likely candidate for being the root cause of your current woes.

- Don't Make Assumptions. It's easy to make assumptions, but sticking with an orderly elimination of possible causes will get you to the root cause of the problem more consistently. For example, don't assume that just because one user can log on that everything's okay with the infrastructure; the problem user might be hitting a different domain controller, for example.

- Change One Thing at a Time, and Retest. You won't get anywhere with five people attacking the problem, each one changing things as they go. You also won't get anywhere if you're changing multiple things at once. If the boss is tearing his hair out to get things fixed, remind him that you have just as much capability to further break things if you're not methodical.

- Trust, but Verify, Evidence. Sometimes an inaccurate problem description can get you going in the wrong direction—so verify everything (this goes back to not making assumptions, too). "I can't log in!" a user cries over the phone. "Log into what?" you should ask, before diving into AD problems. Maybe the user is talking about their Gmail account.

- Document Everything You Try. Especially for tough issues, documenting everything you try will help keep you from repeating steps, and will help you eliminate possible causes more easily. It's also crucial in the inevitable post‐mortem, where you and your colleagues will discuss how to keep this from happening again, or how to solve it more quickly the next time.

A Flowchart for AD Troubleshooting

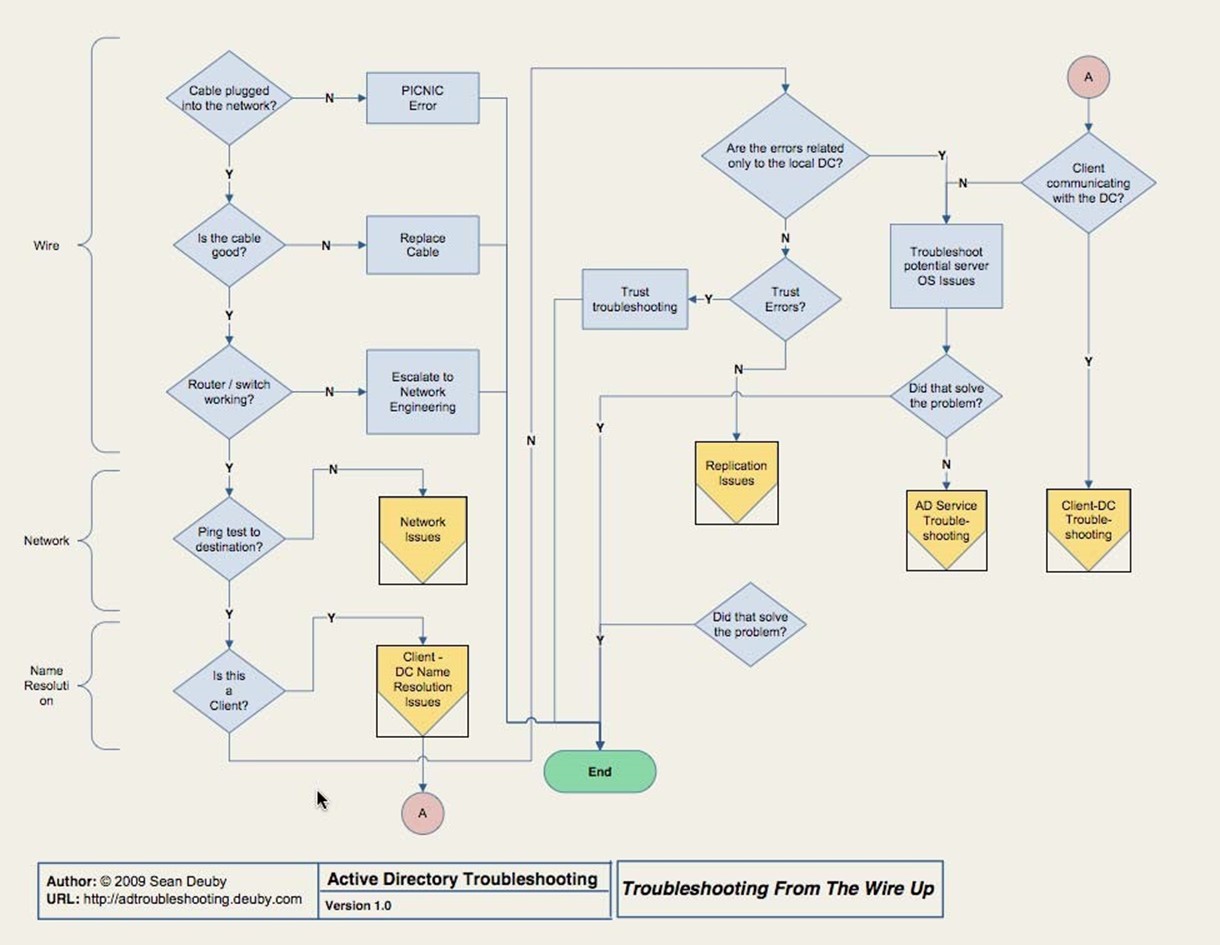

Sean has further helped by coming up with an AD troubleshooting flowchart, which I'll reprint in pieces throughout this chapter. You should check Sean's blog or Web site (which is shown at the bottom of the chart pages) for the latest revision of the flowchart. Sean's blog also offers a full‐sized PDF version, which I keep right near my desk at all times. The flowchart starts with that is shown in Figure 3.1, which is the core starting point that gets you off to the different sections of the chart.

Figure 3.1: Starting point in AD troubleshooting.

I strongly recommend that you head over to Sean's blog or Web site to download the PDF version of this flowchart for yourself. You may find a later version, which is great—it'll still start off in basically this same way.

Start in the upper‐left, with "Cable plugged into network?" and work down from there. The basics—the "wire" portion—should be things you can quickly eliminate, but don't eliminate them without actually testing them. You might, for example, attempt to ping a known‐good IP address on the network (using an IP address prevents potential DNS issues from becoming involved at this point). If that doesn't work, you've got a hardware issue of some kind to solve.

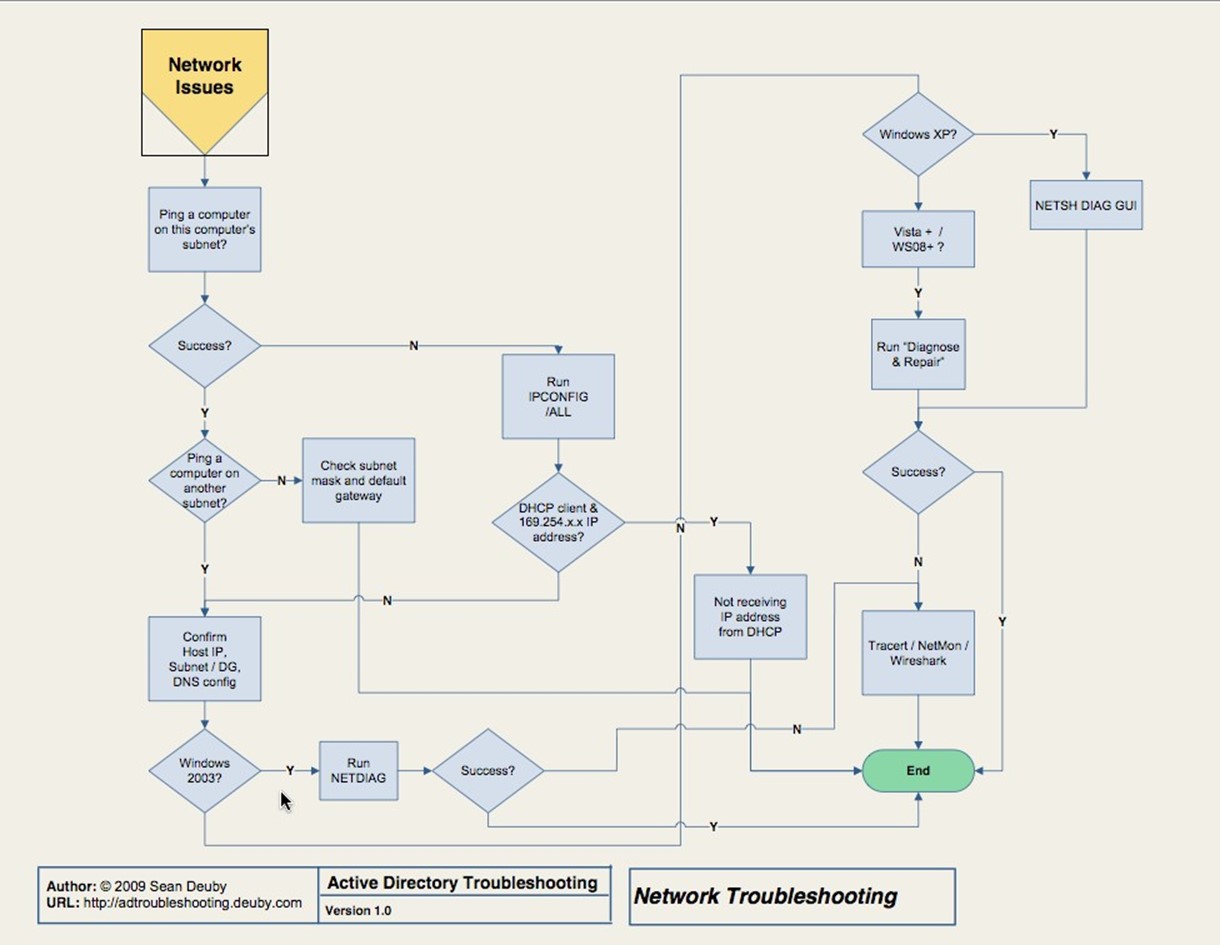

Easy Stuff: Network Issues

A ping does, of course, start to encroach on the "Network" section of the flowchart. Stick with IP addresses to this point because we're not ready to involve DNS yet. If the ping isn't successful, and you've verified the network adapter, cabling, router, and other infrastructure hardware, you're ready to move on to Figure 3.2, which is the Network Issues portion of the flowchart.

Figure 3.2: Network issues.

The tools here are straightforward, so I won't dwell on them. You'll be using ping, Ipconfig, Netdiag, and other built‐in tools. At worst, you might find yourself hauling out Wireshark or Network Monitor to actually check network packets. That's not truly AD troubleshooting, so it's out of scope for this book, but the flowchart should walk you through to a solution if this is your root cause.

Name Resolution Issues

If a ping to a different intranet subnet worked by IP address, it's time to start pinging by computer name to test name resolution. Watch the ping command's output to see if it resolves a server's name to the correct IP address. Ideally, use the name of a domain controller or two because we're testing AD problems. If ping doesn't resolve correctly, or can't resolve at all, you're ready to move into the name resolution issues.

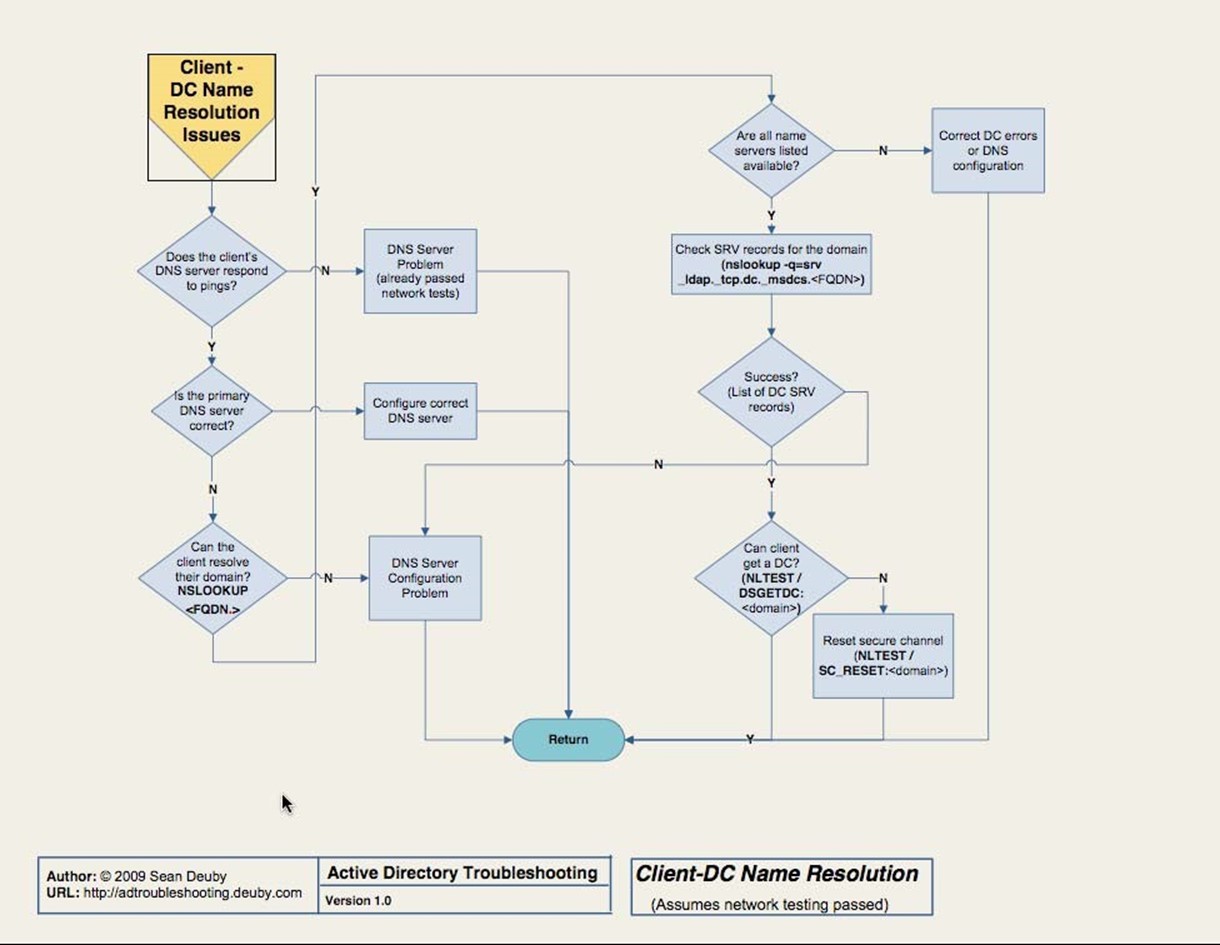

The "Client‐DC Name Resolution Issues" flowchart is designed for when you're troubleshooting connectivity from a client to a domain controller; if you're troubleshooting problems on a server, you'll skip this step and move on in the core flowchart (Figure 3.1). If you are on a client, the flowchart that Figure 3.3 shows will come into play.

Figure 3.3 ClientDC name resolution issues.

Again, the tools for troubleshooting name resolution should be familiar to you. Primarily, you'll rely on ping and Nslookup. Of these, Nslookup might be the one you use the least— but if you're going to be troubleshooting AD, it's worth your while to get comfortable with it. The flowchart offers the exact commands you need to use, provided you know the FullyQualified Distinguished Name (FQDN) of your domain (for example, dc=Microsoft,dc=com for the Microsoft.com domain).

The other tool you'll find yourself using is Nltest, which permits you to test the client's ability to connect to a domain controller, among other things.

Log Spelunking

Once name resolution is resolved, or if it isn't the problem, you have a bit of checking to do before you move on. Specifically, you're going to have to look in the System and Application event logs on the domain controllers in the client's local site (or whatever domain controller you're having a problem with, if it's just a specific one). If you find any errors, you'll have to resolve them—and they may be more specific to Windows than to AD. Don't ignore anything. In fact, that "don't ignore anything" is a huge reason I hate domain controllers that do anything other than run AD, and perhaps DNS and DHCP. I once had a domain controller that was having real issues talking to the network. There were a bunch of IIS‐related errors in the log, but I ignored those—what does IIS have to do with networking or AD, after all? I shouldn't have made assumptions: It turned out that IIS was more or less jamming up the network pipe. Shutting it down solved the problem for AD.

Having to dig through the event logs on more than one domain controller— heck, even doing it on one server—is time‐consuming and frustrating. This is where some kind of log consolidation and analysis tool can help tremendously. Get all your logs into one place, and have software that can pre‐filter the event entries to just those that need your attention. Software like Microsoft System Center Operations Manager can also help because one of its jobs is to scan event logs and call to your attention any events that require it.

If you don't see any errors specific to the domain controller or controllers, you move on. You're looking first for errors related to trusts, and if you find any, you'll need to resolve them. If you did find errors related to the domain controller or controllers, and you corrected them but that didn't solve the problem, you're moving on to AD service issues.

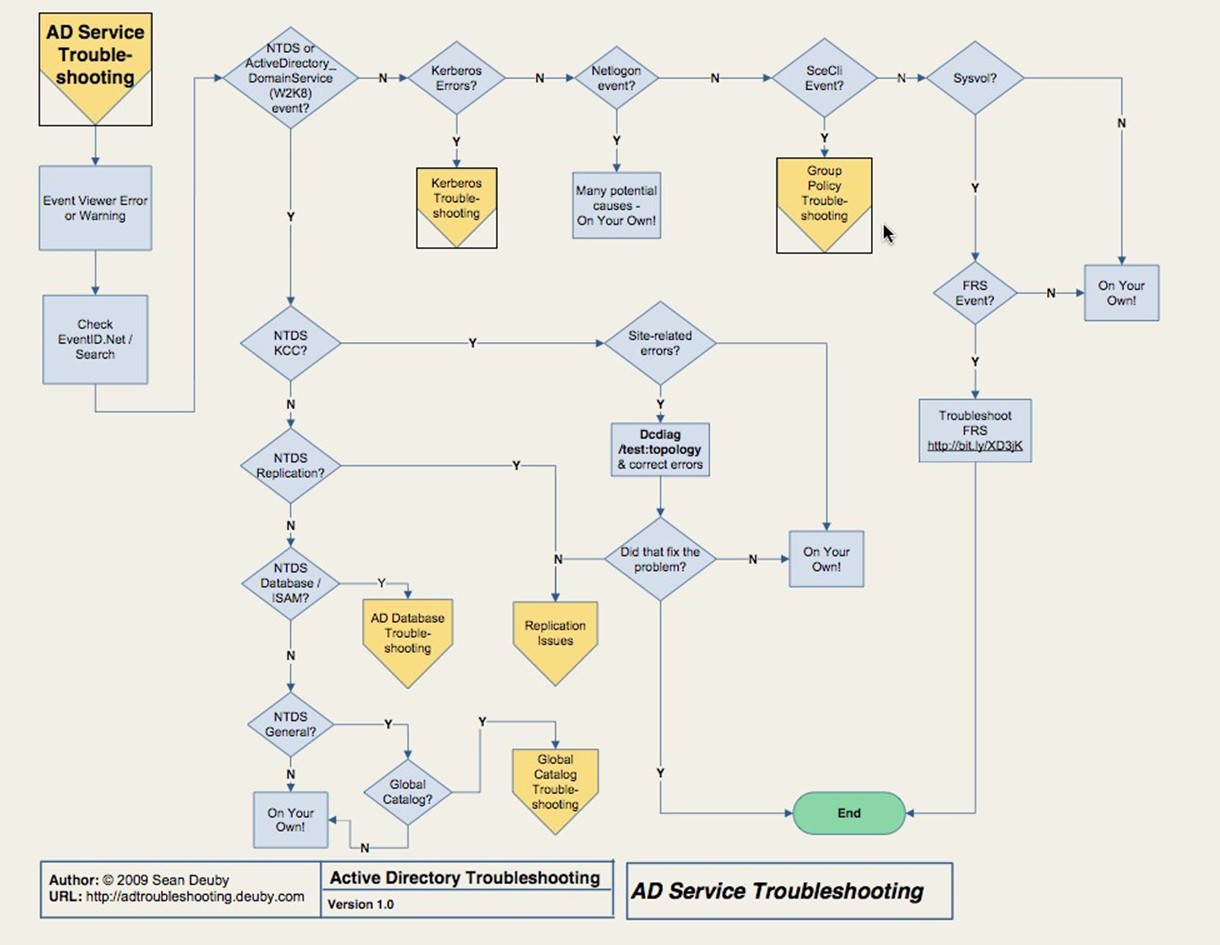

AD Service Issues

Figure 3.4 contains the AD service issue portion of the troubleshooting flowchart. Here, we've moved into the complex part of AD troubleshooting. First, of course, look in the event log for errors or warnings. Don't ignore something just because you don't understand it; you're going to have to amass knowledge about obscure AD events so that you know which ones can be safely ignored in a given situation.

This is where knowledge, more than pure data, comes in handy. Operations Manager, for example, can be extended with Management Packs that should be called Knowledge Packs. When important events pop up in the log, Ops Manager can not only alert you to them but also explain what they mean and what you can do to resolve them. NetPro made a product called DirectoryTroubleshooter that went even further, incorporating a complete knowledge base of what those events meant and how to deal with them. Sadly, the product was discontinued when the company was purchased by Quest, but Quest does offer a similar product: Spotlight on Active Directory. Again, its job is to call your attention to problematic events and provide guidance on how to resolve them.

Figure 3.4: AD service troubleshooting.

The remainder of the AD service troubleshooting flowchart helps you narrow down the potential specific AD service involved in the problem based on the error messages you find in the log. You might be looking at Kerberos, the AD database, Global Catalog (GC), Replication, or Group Policy. Along the way, you'll also troubleshoot site‐related issues and the File Replication System (FRS). We'll pick up most of these major service issues in dedicated sections later in this chapter.

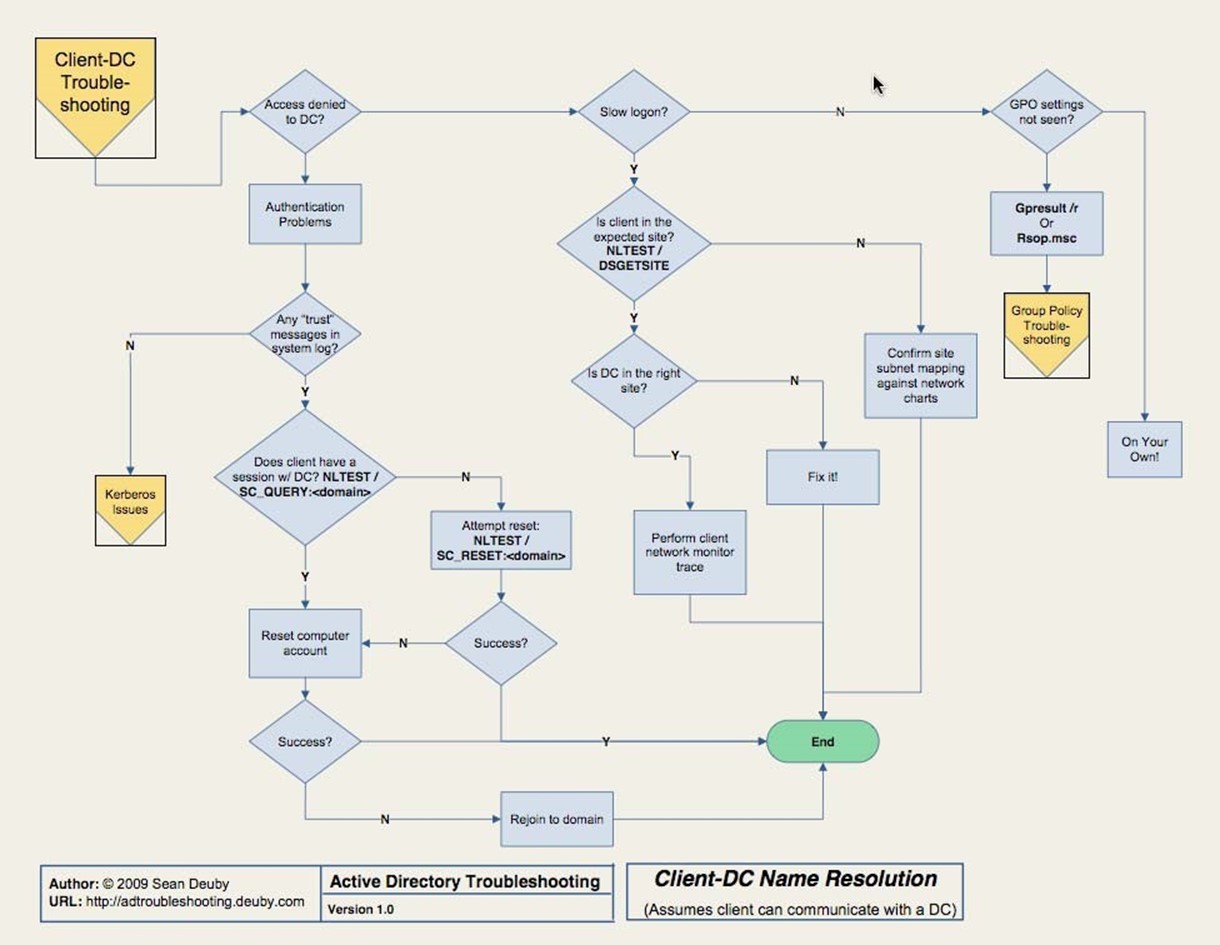

Client‐Domain Controller Issues

Assuming you resolved any client name resolution issues earlier, if you're still having problems with the client communicating with the domain controller, you'll move to the

Client‐DC Troubleshooting chart, which Figure 3.5 shows.

Figure 3.5: ClientDC troubleshooting.

Here, you'll have to personally observe symptoms. For example, are you getting "Access Denied" errors on the client, or does logon seem unusually slow for the time of day? Are you logging on but not getting Group Policy Object (GPO) settings applied? You'll rely heavily on Nltest to verify client‐domain controller connectivity and communications; you could wind up dealing with Kerberos issues, which we'll come to later in this chapter.

This is also the point where you're going to want a chart of your network so that you can confirm which domain controllers should be in which sites. You'll want that chart to also list each subnet that belongs to each site. You have to verify that reality matches the desired configuration, and don't skip any steps. It seems obvious to assume that a client was given a proper address by DHCP and is therefore in the same site; don't ever make that assumption. I once had a client that seemed to be working just fine but was in fact hanging onto an outdated IP address, making the client believe it was in a different site. The way our LAN was configured, the incorrect IP address was still able to function (we used a lot of VLAN stuff and IP addressing got incredibly confusing), but the client didn't see itself as being in the proper site—so it wouldn't talk to the right domain controller.

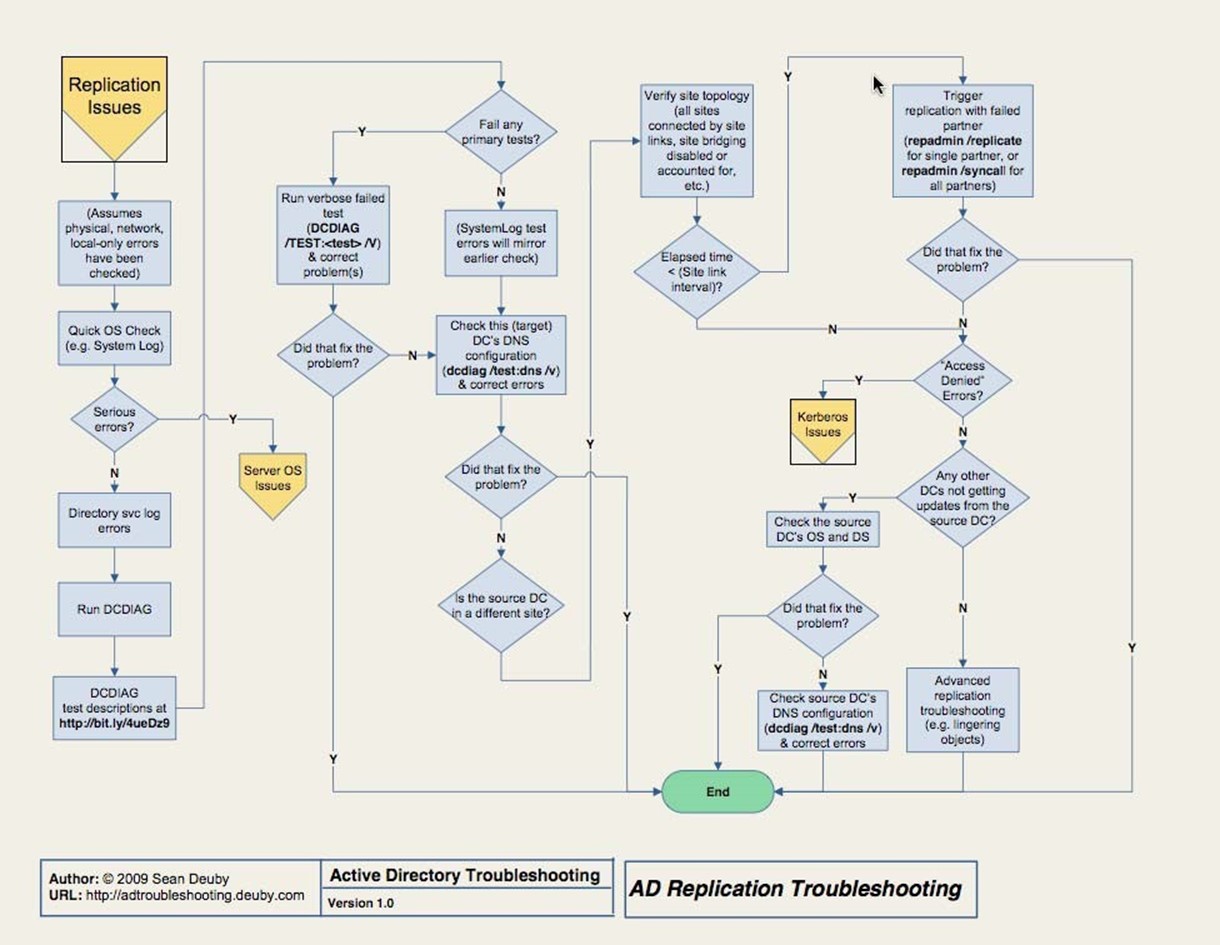

Replication Issues

If the flowchart has gotten you to this point, we're dealing with the page Figure 3.6 shows.

Figure 3.6: Replication issues.

Troubleshooting AD replication is often perceived as the most difficult and mysterious thing you can do with AD. It's like magic: either the trick works or it doesn't, and you'll never know why either way. I see more people struggle with replication issues than with anything else, yet replication is the one thing that can come up most frequently, due in large part to its heavy reliance on proper configuration and the underlying network infrastructure.

Sean proposes four reasons, which I agree with, that make replication troubleshooting difficult for people. In my words, they are:

- They've not been trained in a formal troubleshooting methodology. More admins than you might believe tend to troubleshoot by rote, meaning they try the same things in the same order every time—which is good—without really understanding what they're testing—which is bad.

- They don't approach the problem logically. Think about what's happening. Does it make sense to test name resolution between two domain controllers when other communications between them seem unhindered?

- They don't understand how replication works. This, I think, is the biggest problem. If you don't understand what's happening under the hood, you have no means of isolating individual processes or components to test them. If you can't do that, you can't find the problem.

- They don't understand what the tools do. This is also a big problem because if you don't really know what's being tested, you don't know how to eliminate potential root causes from your list of suspects.

Ultimately, you can't just run tools in the order someone else has prescribed. Sean proposes four te s ps to help proceed; I prefer to limit the list to three:

- Form a hypothesis. What do you think the problem is? A firewall rule? IP addressing problem? DNS problem? Apply whatever experience you have to just pick a problem that seems likely.

- Predict what will happen. In other words, if you think external communications might be failing, you might predict that internal communications will be fine.

- Test your prediction. Use a tool to see if you're right. If you are, you've narrowed the problem domain. If you're not, you form a new hypothesis.

If you remember science class from elementary school, you might recognize this as the scientific method, and it works as well for troubleshooting as it does for any science.

Replication troubleshooting cannot proceed unless you've already resolved networking, local‐only issues, and other problems that precede this step in the core flowchart. Once you've done that, you'll find yourself quickly looking for OS‐related issues in the event log, then move on to the Dcdiag tool—the flowchart provides a URL with a description of the tests to run.

You'll also have to exercise human review and analysis. Do your site links, for example, match your big network chart printout? In other words, are things configured as they should be? This is where a change‐auditing tool can save a ton of time. Rather than manually checking to make sure all your sites, site links, and other replication‐related configurations are right, you could just check an audit log to determine whether anything's changed. In fact, some change‐auditing tools will alert you when key changes happen—like site link reconfigurations—so that you can jump on the problem before it becomes an issue in the environment.

AD Database Issues

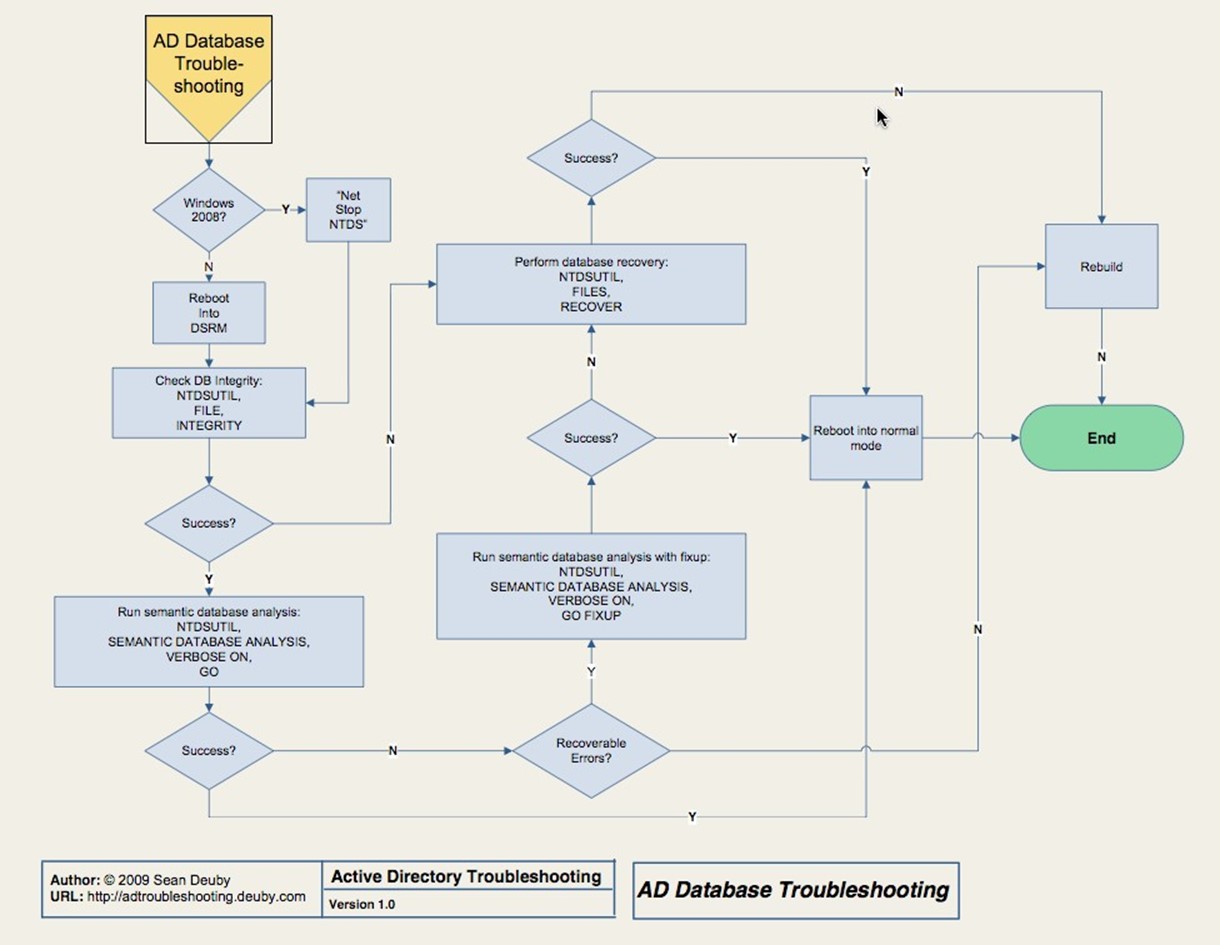

Next, you'll move into troubleshooting the AD database, which is covered in the flowchart that Figure 3.7 shows.

Figure 3.7: AD database troubleshooting.

Here, you'll probably be taking a domain controller offline so that you can reboot into Directory Services Restore Mode (DSRM)—make sure you know the DSRM password for whatever domain controller you're dealing with. You'll use NTDSUTIL to check the file integrity of the AD database itself because, at this point, we're starting to suspect corruption of some kind. If you find it, you'll be doing a database restore. If you don't have a backup, you're probably looking at demoting and re‐promoting the domain controller, if not rebuilding the serve entirely. Sorry.

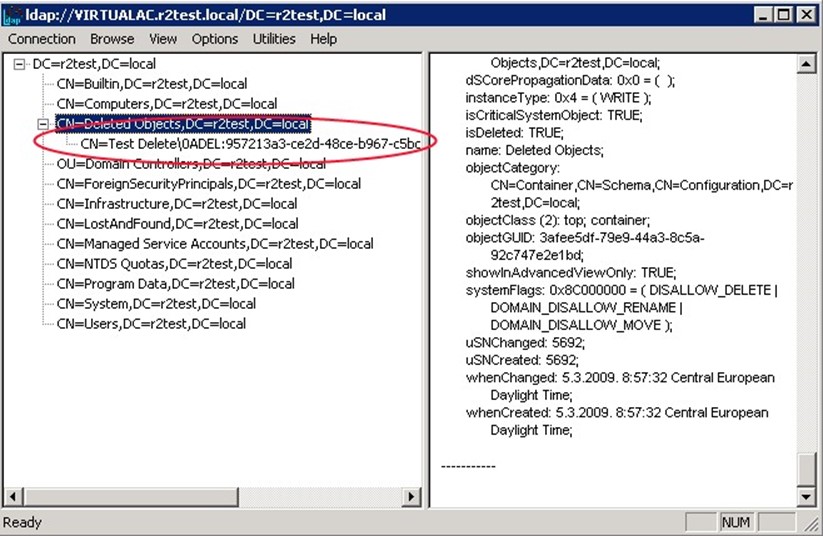

Again, this is where third‐party tools can help. You may have thought that the "AD Recycle Bin" feature of Windows Server 2008 R2 was a great feature, but it isn't designed to deal with a total database failure. Third‐party recovery tools (which are available from numerous vendors) can get you out of a jam here. Make sure you're not using too old a backup; ideally, domain controller backups shouldn't be older than a few days. Older backups will require the domain controller to perform a lot more replication when it comes back online, and a very old backup can re‐introduce tombstoned (deleted) objects to the domain, which would be a Bad Thing.

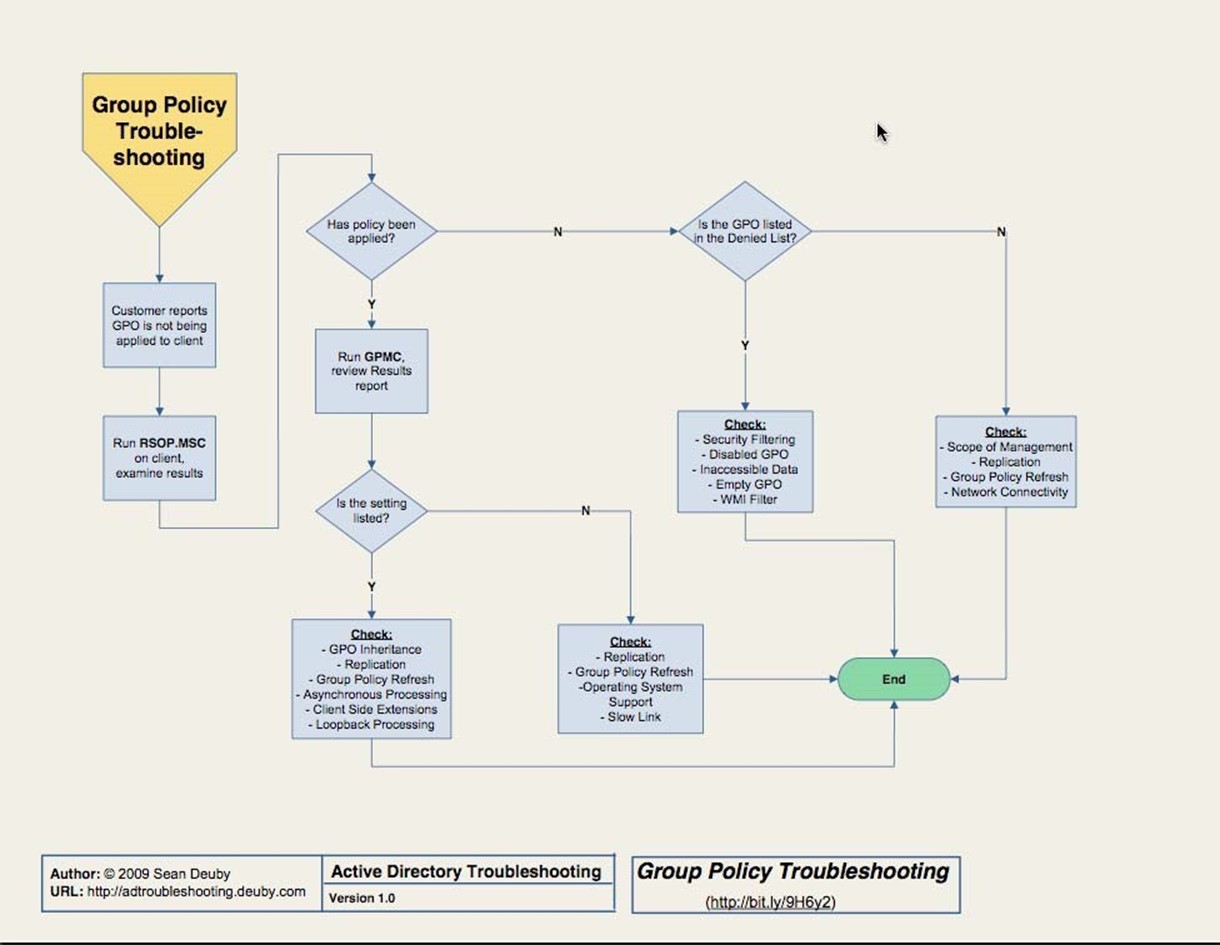

Group Policy Issues

If you've made it this far, AD's most complex components are working, and you're on to troubleshooting one of the easier elements. First, recognize that there are two broad classes of problem with Group Policy: no settings from a Group Policy object are being applied or the wrong settings are being applied. This chapter, as shown in the flowchart in Figure 3.8, is concerned only with the former. If you're getting settings but not the right ones, you need to dive into the GPOs, Resultant Set of Policy (RSoP), and other tools to discover where the wrong settings are being defined.

Figure 3.8: Group Policy troubleshooting.

Troubleshooting GPOs is pretty much about verifying their configuration. If a user isn't getting a specific GPO, the problem will be due to replication, inheritance, asynchronous processing (which means they're getting the GPO, just not as quickly as you expected), and so forth. Group Policy is complicated, and knowing all the little tricks and gotchas is key to solving problems. I recommend buying Jeremy Moskowitz' latest book on the subject; he's pretty much the industry expert on Group Policy and his books comes with great explanations and flowcharts to help you troubleshoot these problems.

Unraveling "what's changed" is also the easiest way to fix GPO problems. Unfortunately, most tools that track AD configuration changes don't touch GPOs because GPOs aren't stored in AD itself. There are tools that can place GPOs under version‐control, and can help track the changes related to GPOs that do live in AD (such as where the GPOs are linked). Quest, NetWrix, Blackbird Group, and NetIQ all offer various solutions in these spaces.

Kerberos Issues

Finally, the last area we'll cover is Kerberos. Figure 3.9 shows the last page in the flowchart.

Figure 3.9: Kerberos issues.

Here, you'll need to install resource kit tools, preferably Kerbtray.exe, so that you can get a peek inside Kerberos. You'll also need a strong understanding of how Kerberos works.

Here's a brief breakdown:

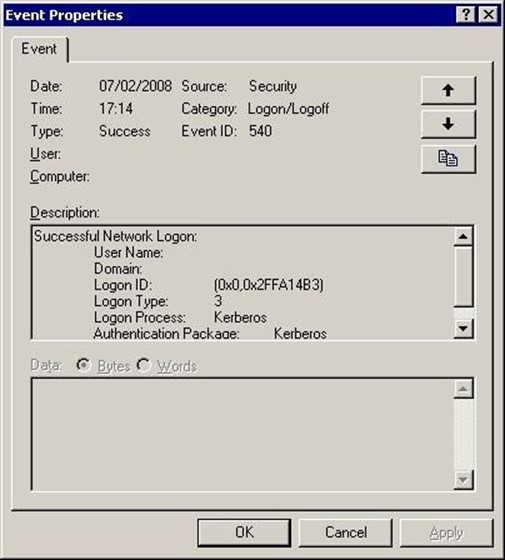

- When you log on, you get a Ticket‐Granting Ticket (TGT) from your authenticating domain controller. This enables you to get Kerberos tickets, which provide access to a specific server's resources. Each server you access will require you to have a ticket for that server. So each time you access a new server every day, you'll have to first contact a domain controller to get that ticket.

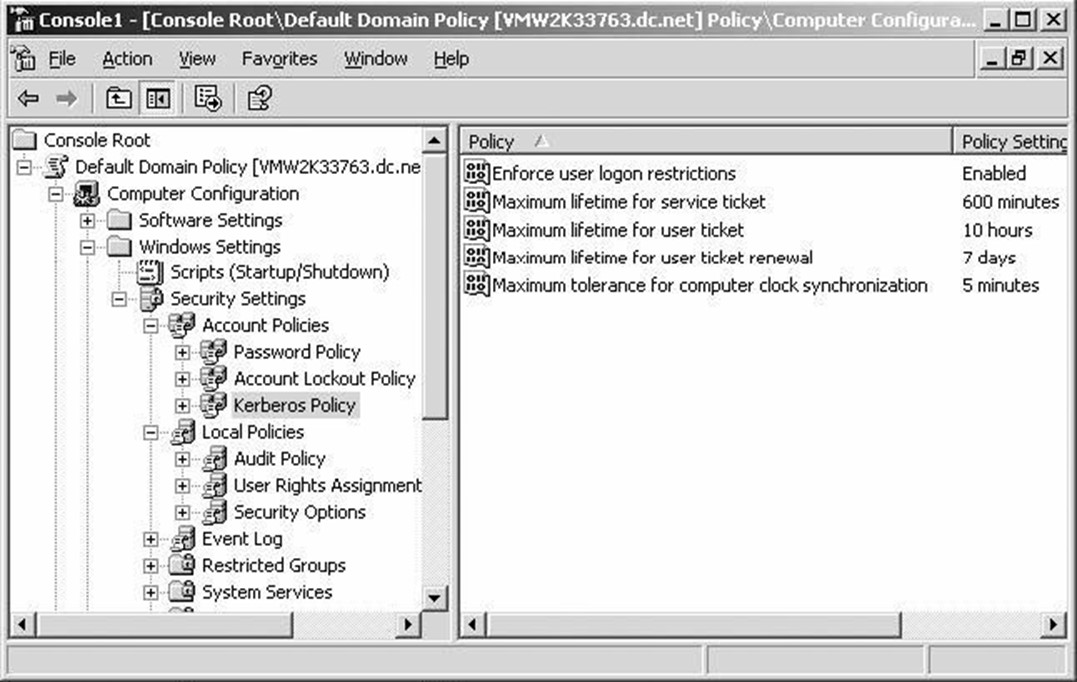

- Ticket validity is controlled by time stamps. Every machine in the domain needs to have roughly the same idea of what time it is, which is why Windows automatically synchronizes time within the domain. A skew of about 5 minutes is allowed by default.

- Tickets are a bit sensitive to UDP fragmentation, meaning you need to look at your network infrastructure and make sure it isn't hacking UDP packets into fragments. You can also force Kerberos to use TCP, which is designed to handle fragmentation.

There are a few other uncommon issues also covered by the flowchart.

Active Directory Security

In the security world, AAA is usually the term used to describe the broad functionality of security: authentication, authorization, and auditing. For a Windows‐centric network, Active Directory (AD) serves one of those roles: authentication. Internally, AD also has authorization and auditing functionality, which are used to secure and monitor objects listed within the directory itself. In this chapter, we'll talk about all of these functions, how AD implements them, and some of the pros and cons of AD's security model. We'll also look at reasons your own security design might be due for a review, and potentially a remodel.

This chapter will also discuss security capabilities usually acquired from third parties. I know, it would be nice to think that AD is completely self‐contained and capable of doing everything we need from a security perspective. In a modern business world, however, that's rarely true, as we shall see.

Active Directory Security Architecture

As mentioned, AD has a role in each of the three main security functions. Let's take each one separately.

Authentication: Kerberos

Microsoft adopted an extended version of the industry‐standard Kerberos protocol for use within AD. Compared with Microsoft's older authentication protocol, NTLM, Kerberos provides distinct benefits:

- Mutual authentication. Both sides of any security transaction are identified and authenticated to each other. With NTLM, the client was authenticated, but the client wasn't able to verify the server's identity.

- Distributed processing. Clients are responsible for maintaining 100% of the information needed to authenticate themselves to a server; servers maintain nothing. That behavior reduces server overhead, improving overall performance.

- Secure. Unlike NTLM, Kerberos doesn't transmit any portion of your password over the network at any time—not even in encrypted form. Thus, passwords remain a bit safer.

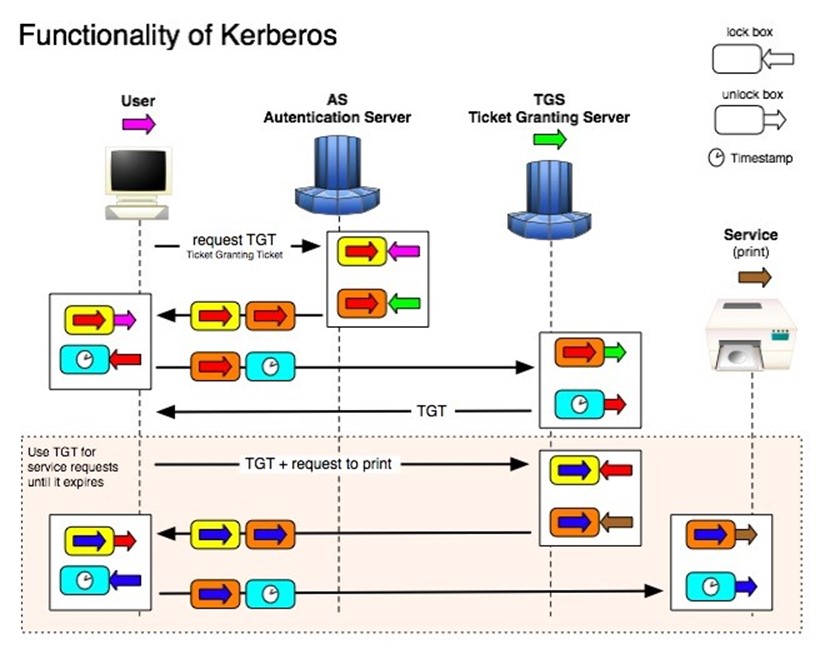

The name Kerberos comes from Greek mythology, and identifies the mythical three‐headed dog that guarded the gates to the Underworld. The threeheaded bit is the important one because the protocol entails three parties: the client, the server, and the Key Distribution Center (KDC).

In AD, Kerberos relies on the fact that the KDCs—a role played by domain controllers— have access to a hashed version of every user and computer password. The users and computers, of course, know their passwords, and the computers (which users log on to, of course) know the same password‐hashing algorithm as the domain controllers. This setup enables the hashed passwords to be used as a symmetric encryption key: If the KDC encrypts something with a user or computer password as the encryption key, that user or computer will be able to decrypt it using the same hashed password.

When a user logs on, their computer—on the user's behalf—contacts the KDC and sends an authentication packet. The KDC attempts to decrypt it using the user's hashed password, and if that is successful, the KDC can read the authentication packet. The KDC constructs a ticketgranting ticket (TGT), encrypting it first with its own encryption key (which the user doesn't know), then again with the user's key (which the user does know). The user's computer stores this TGT in a special area of memory that isn't swapped to disk at any time, so the TGT is never permanently stored. The TGT contains the user's security token, listing all of the security identifiers (SIDs) for the user and whatever groups they belong to.

When the user needs to access a server, their computer resends the TGT to a domain controller. The domain controller decrypts the TGT using its private key—keep in mind that there's no way the user could have tampered with the TGT and still have that decryption work because the user doesn't have access to the domain controller's private key. The KDC creates a copy of the TGT called a ticket, and encrypts it using the hashed password of whatever server the user is attempting to access. That's encrypted again using the user's key, and sent to the user. The user then transmits that ticket to the server they want to access, along with a request for whatever resource they need.

The server attempts to use its key to decrypt the ticket. If it's able to do so, then several things are known:

- The server is the one the user intended, because if it weren't, it wouldn't have the key needed to decrypt and read the ticket.

- The user's identity is known, because it's included in a ticket that only the server could read.

- The user's identify is trusted because the ticket was encrypted not by the user but by the KDC, and in a way that only the KDC and the server could read.

Figure 4.1 shows a functional diagram of how Kerberos works. Keep in mind that this isn't a Microsoft‐specific protocol; Microsoft made some extensions to allow for Windowsspecific needs—such as the need to include a security token in the tickets—but Windows'

Kerberos still works like the standard MIT‐developed protocol.

Figure 4.1: Kerberos functional diagram.

The user's computer caches the ticket for 8 hours (by default), enabling it to continue accessing that server over the course of a work day.

If a user's group memberships are changed during the day, that change won't be reflected until the user logs off—destroying their tickets and TGT—and logs back on—forcing the KDC to construct a new TGT.

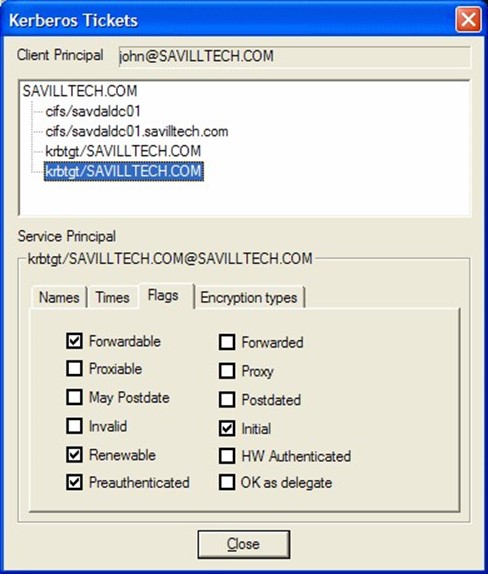

Microsoft provides a utility called KerbTray.exe, shown in Figure 4.2, which provides a way to view locally‐cached tickets.

Figure 4.2: The KerbTray utility.

This utility also provides access to several key properties of a ticket, including whether it can be renewed, whether it can be forwarded by a server to another server in order to pass along a user's authentication, and so forth.

Kerberos' primary weakness is a dependence on time for the initial TGT‐requesting authenticator. In order to prevent someone from capturing an authenticator on the network and then replaying it at a later time, Kerberos requires authenticators to be timestamped, and will by default reject any authenticator more than a few minutes old. Domain computers synchronize their time with their authenticating domain controller (after authentication), and domain controllers synchronize with the domain's PDC Emulator roleholder. Without this time sync, computers' clocks would tend to drift, taking them outside the few‐minutes Kerberos "window" and making authentication impossible.

Authorization: DACLs

As I've already mentioned, AD's main role is authentication. However, for information— such as users and computers, along with configuration objects like sites and services— inside the directory, AD also performs its own authorization and auditing.

Every AD objects is secured with a discretionary access list. DACLs follow the same basic structure as Windows' NTFS file permissions. The DACL consists of a list of access control entries. Each ACE grants or denies specific permission to a single security principle, which would be a user or a group. Figure 4.3 shows a pretty typical AD permissions dialog.

Figure 4.3: AD permissions dialog.

As with NTFS permissions, objects can have directly‐applied ACEs in their DACLs, and they can inherit ACEs from containing objects' DACLs. In most directory implementations, for example, user objects have few or no directly‐defined ACEs but instead inherit all of their ACEs from a containing organizational unit (OU).

ACEs actually consist of a permissions mask (which defines the permissions the ACE is granting or denying) and a SID. When displaying ACEs in a dialog box, Windows translates those SIDs to user and group names. Doing so requires a quick lookup in the directory, so in a busy network, it's sometimes possible to see the SIDs for a brief moment before they're replaced with the looked‐up user or group names.

It's important to understand that, in AD, computers are the same kind of security principle as a user, meaning computers don't have any special permissions. For example, if a Routing and Remote Access Server (RRAS) machine is attempting to authenticate a dial‐in user, the server might need to look at properties of the user's AD account to see whether the user has any dial‐in time restrictions. Doing so requires that the server have permission to read certain attributes of the user's account, which is why the dialog in Figure 4.2 shows the "RAS and IAS Servers" user group as having permissions to the user's account—without that permission, the server would be unable to examine the user's account to determine whether the dial‐in was to be allowed.

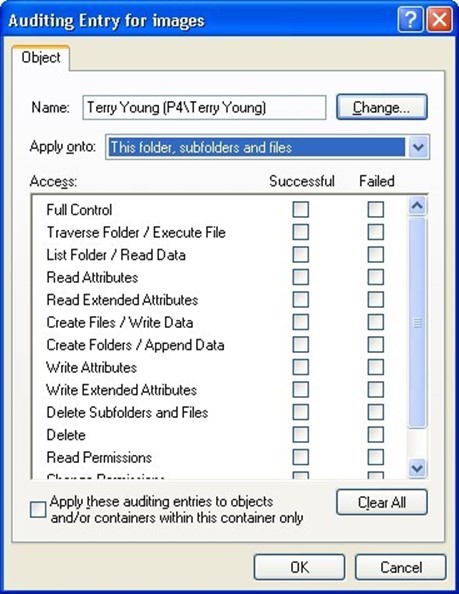

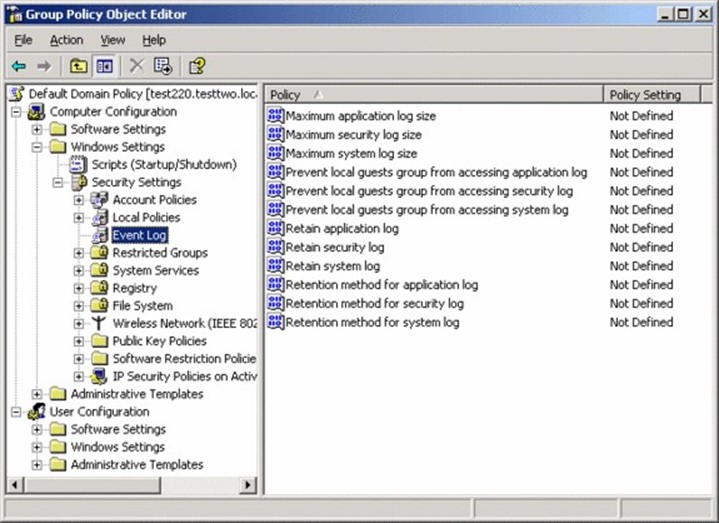

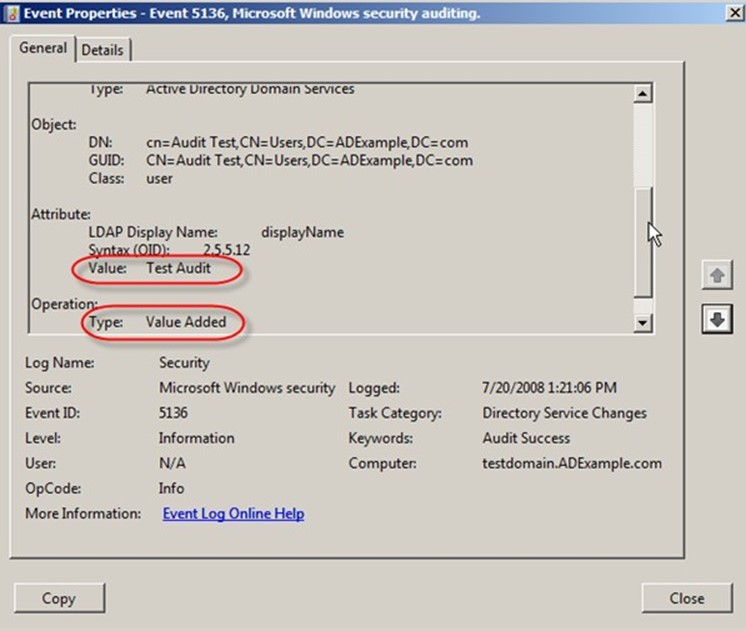

Auditing: SACLs