Cybersecurity Gateway

Knowledge Objectives

- Describe the basic operation of computer networks and the internet, including common networking devices, routed and routing protocols, different types of area networks and topologies, and the Domain Name System (DNS).

- Explain the function of physical, logical, and virtual addressing in networking.

- Discuss IPv4 and IPv6 addressing and subnetting fundamentals.

- Discuss the OSI Reference Model and TCP/IP model including packet analysis, protocol and packet filtering, and TCP/IP encapsulation.

- Explain various network security models, concepts, and principles including perimeterbased security and the Zero Trust model.

- Discuss cloud and data center security design concepts including cloud computing security considerations and requirements, the role of virtualization in cloud computing, existing data security solution weaknesses, east-west traffic protection, and security in virtualized data centers.

- Describe network security technologies including firewalls, Intrusion Detection Systems and Intrusion Prevention Systems (IDS/IPS), web content filters, virtual private networks (VPNs), data loss prevention (DLP), Unified Threat Management (UTM), and security information and event management (SIEM).

- Explain cloud, virtualization, and storage security concepts and challenges.

- Discuss network operations concepts including traffic analysis, troubleshooting, and server and systems administration.

- Discuss directory services, network device optimization, and structured host and network troubleshooting.

- Describe IT Infrastructure Library (ITIL) concepts and help desk and technical support functions.

The Connected Globe

With more than four billion internet users worldwide in 2018, which represents well over half the world's population, the internet connects businesses, governments, and people across the globe. Our reliance on the internet will continue to grow, with nearly 30 billion devices and "things" – including autonomous vehicles, household appliances, wearable technology, and more – connecting to the internet of things (IoT) and more than 7.3 billion worldwide smartphone subscriptions each downloading 17 gigabytes (GB) of monthly data by 2023.

The NET: How things connect

In the 1960s, the U.S. Defense Advanced Research Project Agency (DARPA) created ARPANET, the precursor to the modern internet. ARPANET was the first packet switching network. A packet switching network breaks data into small blocks (packets), transmits each individual packet from node to node toward its destination, then reassembles the individual packets in the correct order at the destination.

Today, hundreds of millions of routers (discussed in Section 2.1.2) deliver Transmission Control Protocol/Internet Protocol (TCP/IP) packets using various routing protocols (discussed in Section 2.1.3) across local-area networks and wide-area networks (LANs and WANs, respectively, discussed in Section 2.1.4). The Domain Name System (DNS, discussed in Section 2.1.5) enables internet addresses, such as www.paloaltonetworks.com, to be translated into routable IP addresses.

Introduction to networking devices

Routers are physical or virtual devices that send data packets to destination networks along a network path using logical addresses (discussed in Section 2.2). Routers use various routing protocols (discussed in Section 2.1.3) to determine the best path to a destination, based on variables such as bandwidth, cost, delay, and distance. A wireless router combines the functionality of a router and a wireless access point (AP) to provide routing between a wired and wireless network. An AP is a network device that connects to a router or wired network and transmits a Wi-Fi signal so that wireless devices can connect to a wireless (or Wi-Fi) network. A wireless repeater rebroadcasts the wireless signal from a wireless router or AP to extend the range of a Wi-Fi network.

A hub (or concentrator) is a network device that connects multiple devices – such as desktop computers, laptop docking stations, and printers – on a local-area network (LAN). Network traffic that is sent to a hub is broadcast out of all ports on the hub, which can create network congestion and introduces potential security risks (broadcast data can be intercepted).

A switch is essentially an intelligent hub that uses physical addresses (discussed in Section 2.2) to forward data packets to devices on a network. Unlike a hub, a switch is designed to forward data packets only to the port that corresponds to the destination device. This transmission method (referred to as micro-segmentation) creates separate network segments and effectively increases the data transmission rates available on the individual network segments. Also, a switch can be used to implement virtual LANs (VLANs), which logically segregate a network and limit broadcast domains and collision domains.

Key Terms:

- A router is a network device that sends data packets to a destination network along a network path.

- A wireless repeater rebroadcasts the wireless signal from a wireless router or AP to extend the range of a Wi-Fi network.

- A hub (or concentrator) is a device used to connect multiple networked devices on a local-area network (LAN).

- A switch is an intelligent hub that forwards data packets only to the port associated with the destination device on a network.

- A virtual LAN (VLAN) is a logical network that is created within a physical LAN.

- A broadcast domain is the portion of a network that receives broadcast packets sent from a node in the domain.

- A collision domain is a network segment on which data packets may collide with each other during transmission.

Routed and routing protocols

Routed protocols, such as the Internet Protocol (IP), address packets with routing information that enables those packets to be transported across networks using routing protocols. IP is discussed further in Sections 2.2 and 2.2.1.

Routing protocols are defined at the Network layer of the OSI model (discussed in Section 2.3.1) and specify how routers communicate with one another on a network. Routing protocols can either be static or dynamic.

Key Terms:

- An Internet Protocol (IP) address is a 32-bit or 128-bit identifier assigned to a networked device for communications at the Network layer of the OSI model (discussed in Section 2.3.1) or the Internet layer of the TCP/IP model (discussed in Section 2.3.2).

A static routing protocol requires that routes be created and updated manually on a router or other network device. If a static route is down, traffic can't be automatically rerouted unless an alternate route has been configured. Also, if the route is congested, traffic can't be automatically rerouted over the less congested alternate route. Static routing is practical only in very small networks or for very limited, special-case routing scenarios (for example, a destination that's reachable only via a single router or when used as a backup route). However, static routing has low bandwidth requirements (routing information isn't broadcast across the network) and some built-in security (users can only get to destinations that are specified in statically defined routes).

A dynamic routing protocol can automatically learn new (or alternate) routes and determine the best route to a destination. The routing table is updated periodically with current routing information. Dynamic routing protocols are further classified as:

Distance-vector. A distance-vector protocol makes routing decisions based on two factors: the distance (hop count or other metric) and vector (the egress router interface). It periodically informs its peers and/or neighbors of topology changes. Convergence, the time required for all routers in a network to update their routing tables with the most current information (such as link status changes), can be a significant problem for distance-vector protocols. Without convergence, some routers in a network may be unaware of topology changes, which causes the router to send traffic to an invalid destination. During convergence, routing information is exchanged between routers, and the network slows down considerably. Convergence can take several minutes in networks that use distance-vector protocols.

Routing Information Protocol (RIP) is an example of a distance-vector routing protocol that uses hop count as its routing metric. To prevent routing loops, in which packets effectively get stuck bouncing between various router nodes, RIP implements a hop limit of 15, which limits the size of networks that RIP can support. After a data packet crosses 15 router nodes (hops) between a source and a destination, the destination is considered unreachable. In addition to hop limits, RIP employs four other mechanisms to prevent routing loops:

- Split horizon. Prevents a router from advertising a route back out through the same interface from which the route was learned.

- Triggered updates. When a change is detected the update gets sent immediately instead of waiting 30 seconds to send a RIP update.

- Route poisoning. Sets the hop count on a bad route to 16, which effectively advertises the route as unreachable.

- Holddown timers. Causes a router to start a timer when the router first receives information that a destination is unreachable. Subsequent updates about that destination will not be accepted until the timer expires. This timer also helps avoid problems associated with flapping. Flapping occurs when a route (or interface) repeatedly changes state (up, down, up, down) over a short period of time.

Link-state. A link-state protocol requires every router to calculate and maintain a complete map, or routing table, of the entire network. Routers that use a link-state protocol periodically transmit updates that contain information about adjacent connections, or link states, to all other routers in the network. Link-state protocols are compute-intensive, but they can calculate the most efficient route to a destination. They consider numerous factors such as link speed, delay, load, reliability, and cost (an arbitrarily assigned weight or metric). Convergence occurs very rapidly (within seconds) with link-state protocols.

Open Shortest Path First (OSPF) is an example of a link-state routing protocol that is often used in large enterprise networks. OSPF routes network traffic within a single autonomous system (AS). OSPF networks are divided into areas identified by 32-bit area identifiers. Area identifiers can (but don't have to) correspond to network IP addresses and can duplicate IP addresses without conflicts.

Path-vector. A path-vector protocol is similar to a distance-vector protocol, but without the scalability issues associated with limited hop counts in distance-vector protocols. Each routing table entry in a path-vector protocol contains path information that gets dynamically updated.

Key Terms:

- Convergence is the time required for all routers in a network to update their routing tables with the most current routing information about the network.

- Hop count generally refers to the number of router nodes that a packet must pass through to reach its destination.

- An autonomous system (AS) is a group of contiguous IP address ranges under the control of a single internet entity. Individual autonomous systems are assigned a 16-bit or 32-bit AS number (ASN) that uniquely identifies the network on the Internet. ASNs are assigned by the Internet Assigned Numbers Authority (IANA).

- Border Gateway Protocol (BGP) is an example of a path-vector protocol used between separate autonomous systems. BGP is the core protocol used by Internet Service Providers (ISPs), network service providers (NSPs), and on very large private IP networks.

Area networks and topologies

Most computer networks are broadly classified as either local-area networks (LANs) or widearea networks (WANs).

A local-area network (LAN) is a computer network that connects end-user devices such as laptop and desktop computers, servers, printers, and other devices so that applications, databases, files, file storage, and other networked resources can be shared among authorized users on the LAN. A LAN operates across a relatively small geographic area, such as a floor, a building, or a group of buildings, typically at speeds of up to 10 megabits per second (Mbps - Ethernet), 100Mbps (Fast Ethernet), 1,000Mbps (or 1 gigabit per second [1Gbps] – Gigabit Ethernet) on wired networks and 11Mbps (802.11b), 54Mbps (802.11a and g), 450Mbps (802.11n), 1.3Gbps (802.11ac), and 14Gbps (802.11ax – theoretical) on wireless networks. A LAN can be wired, wireless, or a combination of wired and wireless. Examples of networking equipment commonly used in LANs include bridges, hubs, repeaters, switches, and wireless access points (APs).

Key Terms:

- A local-area network (LAN) is a computer network that connects laptop and desktop computers, servers, printers, and other devices so that applications, databases, files and file storage, and other networked resources can be shared across a relatively small geographic area, such as a floor, a building, or a group of buildings.

- A bridge is a wired or wireless network device that extends a network or joins separate network segments.

- A repeater is a network device that boosts or re-transmits a signal to physically extend the range of a wired or wireless network.

Two basic network topologies (and many variations) are commonly used in LANs:

- Star. Each node on the network is directly connected to a switch, hub, or concentrator, and all data communications must pass through the switch, hub, or concentrator. The switch, hub, or concentrator can thus become a performance bottleneck or single point of failure in the network. A star topology is ideal for practically any size environment and is the most commonly used basic LAN topology. A star topology is also easy to install and maintain, and network faults are easily isolated without affecting the rest of the network.

- Mesh. All nodes are interconnected to provide multiple paths to all other resources. A mesh topology may be used throughout the network or only for the most critical network components, such as routers, switches, and servers, to eliminate performance bottlenecks and single points of failure.

Other once-popular network topologies, such as ring and bus, are rarely found in modern networks.

Key Terms:

- In a ring topology, all nodes are connected in a closed loop that forms a continuous ring. In a ring topology, all communication travels in a single direction around the ring. Ring topologies were common in token ring networks.

- In a bus (or linear bus) topology, all nodes are connected to a single cable (the backbone) that is terminated on both ends. In the past, bus networks were commonly used for very small networks because they were inexpensive and relatively easy to install, but today bus topologies are rarely used. The cable media has physical limitations (the cable length), the backbone is a single point of failure (a break anywhere on the network affects the entire network), and tracing a fault in a large network can be extremely difficult.

- A wide-area network (WAN) is a computer network that connects multiple LANs or other WANs across a relatively large geographic area, such as a small city, a region or country, a global enterprise network, or the entire planet (for example, the internet).

- A wide-area network (WAN) is a computer network that connects multiple LANs or other WANs across a relatively large geographic area, such as a small city, a region or country, a global enterprise network, or the entire planet (for example, the internet).

A WAN connects networks using telecommunications circuits and technologies such as broadband cable, digital subscriber line (DSL), fiber optic, optical carrier (for example, OC-3), and T-carrier (for example, T-1), at various speeds typically ranging from 256Kbps to several hundred megabits per second. Examples of networking equipment commonly used in WANs include access servers, Channel Service Units/Data Service Units (CSUs/DSUs), firewalls, modems, routers, virtual private network (VPN) gateways, and WAN switches.

The hierarchical internetworking model is a best practice network design, originally proposed by Cisco, that comprises three layers:

- Access. User endpoints and servers connect to the network at this layer, typically via network switches. Switches at this layer may perform some Layer 3 (discussed in Section 2.3.1) functions and may also provide electrical power via power over Ethernet (PoE) ports to other equipment connected to the network, such as wireless APs or Voice over IP (VoIP) phones.

- Distribution. This layer performs any compute-intensive routing and switching functions on the network such as complex routing, filtering, and Quality of Service (QoS). Switches at this layer may be Layer 7 (discussed in Section 2.3.1) switches and connect to lowerend Access layer switches and higher-end Core layer switches.

- Core. This layer is responsible for high-speed routing and switching. Routers and switches at this layer are designed for high-speed packet routing and forwarding.

Key Terms:

- Broadband cable is a type of high-speed internet access that delivers different upload and download data speeds over a shared network medium. The overall speed varies depending on the network traffic load from all the subscribers on the network segment.

- Digital subscriber line (DSL) is a type of high-speed internet access that delivers different upload and download data speeds. The overall speed depends on the distance from the home or business location to the provider's central office (CO).

- Fiber optic technology converts electrical data signals to light and delivers constant data speeds in the upload and download directions over a dedicated fiber optic cable medium. Fiber optic technology is much faster and more secure than other types of network technology.

- Optical carrier is a specification for the transmission bandwidth of digital signals on Synchronous Optical Networking (SONET) fiber optic networks. Optical carrier transmission rates are designated by the integer value of the multiple of the base rate (51.84Mbps). For example, OC-3 designates a 155.52Mbps (3 x 51.84) network and OC-192 designates a 9953.28Mbps (192 x 51.84) network.

- T-carrier is a full-duplex digital transmission system that uses multiple pairs of copper wire to transmit electrical signals over a network. For example, a T-1 circuit consists of two pairs of copper wire – one pair transmits, the other pair receives – that are multiplexed to provide a total of 24 channels, each delivering 64Kbps of data, for a total bandwidth of 1.544Mbps.

- Power over Ethernet (PoE) is a network standard that provides electrical power to certain network devices over Ethernet cables.

- Voice over IP (VoIP) or IP telephony is technology that provides voice communication over an Internet Protocol (IP)-based network.

- Quality of Service (QoS) is the overall performance of specific applications or services on a network including error rate, bit rate, throughput, transmission delay, availability, and jitter. QoS policies can be configured on certain network and security devices to prioritize certain traffic, such as voice or video, over other, less performance-intensive traffic.

In addition to LANs and WANs, many other types of area networks are used for different purposes:

- Campus area networks (CANs) and wireless campus area networks (WCANs) connect multiple buildings in a high-speed network (for example, across a corporate or university campus).

- Metropolitan area networks (MANs) and wireless metropolitan area networks (WMANs) extend networks across a relatively large area, such as a city.

- Personal area networks (PANs) and wireless personal area networks (WPANs) connect an individual's electronic devices – such as laptop computers, smartphones, tablets, virtual personal assistants (for example, Amazon Alexa, Apple Siri, Google Assistant, and Microsoft Cortana), and wearable technology – to each other or to a larger network.

- Storage area networks (SANs) connect servers to a separate physical storage device (typically a disk array).

- Value-added networks (VANs) are a type of extranet that allows businesses within an industry to share information or integrate shared business processes.

- Virtual local-area networks (VLANs) segment broadcast domains in a LAN, typically into logical groups (such as business departments). VLANs are created on network switches.

- Wireless local-area networks (WLANs), also known as Wi-Fi networks, use wireless access points (APs) to connect wireless-enabled devices to a wired LAN.

- Wireless wide-area networks (WWANs) extend wireless network coverage over a large area, such as a region or country, typically using mobile cellular technology.

Domain Name System (DNS)

The Domain Name System (DNS) is a distributed, hierarchical internet database that maps fully qualified domain names (FQDNs) for computers, services, and other resources – such as a website address (also known as a uniform resource locator, or URL) – to IP addresses (discussed in Sections 2.2 and 2.2.1), similar to how a contact list on a smartphone maps the names of businesses and individuals to phone numbers. To create a new domain name that will be accessible via the internet, you must register your unique domain name with a domain name registrar, such as GoDaddy or Network Solutions, similar to listing a new phone number in a phone directory. DNS is critical to the operation of the internet.

Key Terms:

- The Domain Name System (DNS) is a hierarchical distributed database that maps the fully qualified domain name (FQDN) for computers, services, or any resource connected to the Internet or a private network to an IP address.

- A fully qualified domain name (FQDN) is the complete domain name for a specific computer, service, or resource connected to the internet or a private network.

- A domain name registrar is an organization that is accredited by a top-level domain (TLD) registry to manage domain name registrations.

- A root name server is the authoritative name server for a DNS root zone. Worldwide, 13 root name servers (actually 13 networks comprising hundreds of root name servers) are configured, named a.root-servers.net through m.root-servers.net. DNS servers are typically configured with a root hints file that contains the names and IP addresses of the root servers.

A host (such as a web browser on a desktop computer) on a network that needs to connect to another host (such as a web server on the internet) must first translate the name of the destination host from its URL to an IP address. The connecting host (the DNS client) sends a DNS request to the IP address of the DNS server that is specified in the network configuration of the DNS client. If the DNS server is authoritative for the destination domain, the DNS server resolves the IP address of the destination host and answers the DNS request from the DNS client. For example, you are attempting to connect to an intranet server on your internal network from the desktop computer in your office. If the DNS server address that is configured on your computer is an internal DNS server that is authoritative for your intranet domain, the DNS server resolves the IP address of the intranet server. Your computer then encapsulates the resolved destination IP address in the Hypertext Transfer Protocol (HTTP) or Hypertext Transfer Protocol Secure (HTTPS) request packets that are sent to the intranet server.

If a DNS server is not authoritative for the destination domain, for example, an internet website address, then the DNS server performs a recursive query (if it is configured to perform recursive queries) to obtain the IP address of the authoritative DNS server and sends the original DNS request to the authoritative DNS server. This process is a top-down process in which the DNS server first consults its root hints file and queries a root name server to identify the authoritative DNS server for the TLD (for example, .com) associated with the DNS query. The DNS server then queries the TLD server to identify the authoritative server for the specific domain that is being queried (for example, paloaltonetworks.com). This process continues until the authoritative server for the FQDN is identified and queried. The recursive DNS server then answers the original DNS client's request with the DNS information from the authoritative DNS server.

The basic DNS record types are:

- A (IPv4) or AAAA (IPv6) (Address). Maps a domain or subdomain to an IP address or multiple IP addresses

- CNAME (Canonical Name). Maps a domain or subdomain to another hostname

- MX (Mail Exchanger). Specifies the hostname or hostnames of email servers for a domain

- PTR (Pointer). Points to a CNAME; commonly used for reverse DNS lookups that map an IP address to a host in a domain or subdomain

- SOA (Start of Authority). Specifies authoritative information about a DNS zone such as primary name server, email address of the domain administrator, and domain serial number

- NS (Name Server). The NS record specifies an authoritative name server for a given host

- TXT (Text). Stores text-based information

Key Terms:

- A top-level domain (TLD) is the highest level domain in DNS, represented by the last part of a FQDN (for example, .com, or .edu). The most commonly used TLDs are generic top-level domains (gTLD) such as .com, edu, .net, and .org, and country-code top-level domains (ccTLD) such as .ca and .us.

- An authoritative DNS server is the system of record for a given domain.

- An intranet is a private network that provides information and resources – such as a company directory, human resources policies and forms, department or team files, and other internal information – to an organization's users. Like the internet, an intranet uses the HTTP and/or HTTPS protocols, but access to an intranet is typically restricted to an organization's internal users. Microsoft SharePoint is a popular example of intranet software.

- Hypertext Transfer Protocol (HTTP) is an application protocol used to transfer data between web servers and web browsers.

- Hypertext Transfer Protocol Secure (HTTPS) is a secure version of HTTP that uses Secure Sockets Layer (SSL) or Transport Layer Security (TLS) encryption.

- A recursive DNS query is performed (if the DNS server allows recursive queries) when a DNS server is not authoritative for a destination domain. The nonauthoritative DNS server obtains the IP address of the authoritative DNS server for the destination domain and sends the original DNS request to that server to be resolved.

Physical, Logical, and Virtual Addressing

Physical, logical, and virtual addressing in computer networks requires a basic understanding of decimal (base10), binary (base2), and hexadecimal (base16) numbering (see Table 2-1).

The decimal (base10) numbering system is, of course, what we all are taught in school. It comprises the numerals 0 through 9. After the number 9, we add a digit ("1") in the "tens" position and begin again at zero in the "ones" position, thereby creating the number 10. Humans use the decimal numbering system, quite literally, because we have ten fingers, so a base10 numbering systems is easiest for humans to understand.

Table 2-1: Decimal, Hexadecimal, and Binary Notation

| Decimal | Hexadecimal | Binary |

| 0 | 0 | 0000 |

| 1 | 1 | 0001 |

| 2 | 2 | 0010 |

| 3 | 3 | 0011 |

| 4 | 4 | 0100 |

| 5 | 5 | 0101 |

| 6 | 6 | 0110 |

| 7 | 7 | 0111 |

| 8 | 8 | 1000 |

| 9 | 9 | 1001 |

| 10 | A | 1010 |

| 11 | B | 1011 |

| 12 | C | 1100 |

| 13 | D | 1101 |

| 14 | E | 1110 |

| 15 | F | 1111 |

A binary (base2) numbering system comprises only two digits – 1 ("on") and 0 ("off"). Binary numbering is used in computers and networking because they use electrical transistors (rather than fingers, like humans) to count. The basic function of a transistor is a gate – when electrical current is present, the gate is closed ("1" or "on"). When no electrical current is present, the gate is open ("0" or "off"). With only two digits, a binary numbering system increments to the next position more frequently than a decimal numbering system. For example, the decimal number one is represented in binary as "1," number two is represented as "10," number three is represented as "11," and number four is represented as "100."

A hexadecimal (base16) numbering system comprises 16 digits (0 through 9, and A through F). Hexadecimal numbering is used because it is more convenient to represent a byte (which consists of 8 bits) of data as two digits in hexadecimal, rather than eight digits in binary. The decimal numbers 0 through 9 are represented as in hexadecimal "0" through "9," respectively. However, the decimal number 10 is represented in hexadecimal as "A," the number 11 is represented as "B," the number 12 is represented as "C," the number 13 is represented as "D," the number 14 is represented as "E," and the number 15 is represented as "F." The number 16 then increments to the next numeric position, represented as "10."

The physical address of a network device, known as a media access control (MAC) address (also referred to as a burned-in address [BIA] or hardware address), is used to forward traffic on a local network segment. The MAC address is a unique 48-bit identifier assigned to the network interface controller (NIC) of a device. If a device has multiple NICs, each NIC must have a unique MAC address. The MAC address is usually assigned by the device manufacturer and is stored in the device read-only memory (ROM) or firmware. MAC addresses are typically expressed in hexadecimal format with a colon or hyphen separating each 8-bit section. An example of a 48bit MAC address is:

00:40:96:9d:68:16

The logical address of a network device, such as an IP address, is used to route traffic from one network to another. An IP address is a unique 32-bit or 128-bit (IPv4 and IPv6, respectively) address assigned to the NIC of a device. If a device has multiple NICs, each NIC may be assigned a unique IP address or multiple NICs may be assigned a virtual IP address to enable bandwidth aggregation or failover capabilities. IP addresses are statically or dynamically (most commonly using the Dynamic Host Configuration Protocol, or DHCP) assigned, typically by a network administrator or network service provider. IPv4 addresses are usually expressed in dotted decimal notation with a dot separating each decimal section (known as an octet). An example of an IPv4 address is:

192.168.0.1

IPv6 addresses are typically expressed in hexadecimal format (32 hexadecimal numbers grouped into eight blocks) with a colon separating each block of four hexadecimal digits (known as a hextet). An example of an IPv6 address is:

2001:0db8:0000:0000:0008:0800:200c:417a

IPv4 and IPv6 addressing is explained further in Section 2.2.1.

The Address Resolution Protocol (ARP) translates a logical address, such as an IP address, to a physical MAC address. The Reverse Address Resolution Protocol (RARP) translates a physical MAC address to a logical address.

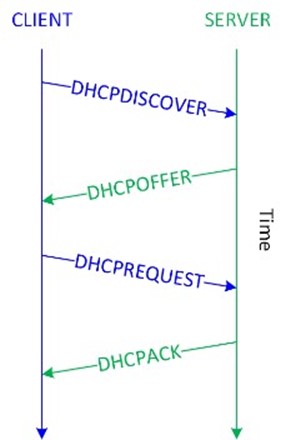

The Dynamic Host Configuration Protocol (DHCP) is a network management protocol used to dynamically assign IP addresses to devices that do not have a statically assigned (manually configured) IP address on a TCP/IP network. BOOTP is a similar network management protocol that is commonly used on UNIX and Linux TCP/IP networks. When a network-connected device that does not have a statically assigned IP address is powered on, the DHCP client software on the device broadcasts a DHCPDISCOVER message on UDP port 67. When a DHCP server on the same subnet (or a different subnet if a DHCP Helper or DHCP Relay Agent is configured) as the client receives the DHCPDISCOVER message, it reserves an IP address for the client and sends a DHCPOFFER message to the client on UDP port 68. The DHCPOFFER message contains the MAC address of the client, the IP address that is being offered, the subnet mask, the lease duration, and the IP address of the DHCP server that made the offer. When the client receives the DHCPOFFER, it broadcasts a DHCPREQUEST message on UDP port 67, requesting the IP address that was offered. A client may receive DHCPOFFER messages from multiple DHCP servers on a subnet, but can only accept one offer. When the DHCPREQUEST message is broadcast, the other DHCP servers that sent an offer that was not requested (in effect, accepted) in the DHCPREQUEST message will withdraw their offers. Finally, when the correct DHCP server receives the DHCPREQUEST message, it sends a DHCPACK (acknowledgment) message on UDP port 68 and the IP configuration process is completed (see Figure 2-1).

Figure 2-1: DHCP operation

Network address translation (NAT) virtualizes IP addresses by mapping private, non-routable IP addresses (discussed in Section 2.2.1) that are assigned to internal network devices to public IP addresses when communication across the internet is required. NAT is commonly implemented on firewalls and routers to conserve public IP addresses.

Key Terms:

- A media access control (MAC) address is a unique 48-bit or 64-bit identifier assigned to a network interface controller (NIC) for communications at the Data Link layer of the OSI model (discussed in Section 2.3.1).

- The Dynamic Host Configuration Protocol (DHCP) is a network management protocol that dynamically assigns (leases) IP addresses and other network configuration parameters (such as default gateway and Domain Name System [DNS] information) to devices on a network.

- A default gateway is a network device, such as a router or switch, to which an endpoint sends network traffic when a specific destination IP address is not specified by an application or service, or when the endpoint does not know how to reach a specified destination.

- The Domain Name System (DNS) is a decentralized, hierarchical directory service that maps IP addresses to domain names for computers, servers, and other resources on a network and the internet. DNS is analogous to a phone book for the internet.

- An octet is a group of 8 bits in a 32-bit IPv4 address.

- A hextet is a group of four 4-bit hexadecimal digits in a 128-bit IPv6 address.

- The Address Resolution Protocol (ARP) translates a logical address, such as an IP address, to a physical MAC address. The Reverse Address Resolution Protocol (RARP) translates a physical MAC address to a logical address.

- Network address translation (NAT) virtualizes IP addresses by mapping private, non-routable IP addresses assigned to internal network devices to public IP addresses.

IP addressing basics

Data packets are routed over a Transmission Control Protocol/Internet Protocol (TCP/IP) network using IP addressing information. IPv4, which is the most widely deployed version of IP, consists of a 32-bit logical IP address. The first four bits in an octet are known as the high-order bits (the first bit in the octet is referred to as the most significant bit); the last four bits in an octet are known as the low-order bits (the last bit in the octet is referred to as the least significant bit).

Each bit position represents its value (see Table 2-2) if the bit is "on" (1); otherwise, the bit's value is zero ("off" or 0).

Key Terms:

- The first four bits in a 32-bit IPv4 address octet are referred to as the high-order bits.

- The last four bits in a 32-bit IPv4 address octet are referred to as the low-order bits.

- The first bit in a 32-bit IPv4 address octet is referred to as the most significant bit.

- The last bit in a 32-bit IPv4 address octet is referred to as the least significant bit.

Table 2-2: Bit Position Values in an IPv4 Address

| High-order bits | Low-order bits | ||||||

| 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

Each octet contains an 8-bit number with a value of 0 to 255. Table 2-3 shows a partial list of octet values in binary notation.

Table 2-3: Binary Notation of Octet Values

| Decimal | Binary | Decimal | Binary | Decimal | Binary | Decimal | Binary |

| 255 | 1111 1111 | 200 | 1100 1000 | 128 | 1000 0000 | 8 | 0000 1000 |

| 254 | 1111 1110 | 192 | 1100 0000 | 120 | 0111 1000 | 7 | 0000 0111 |

| 253 | 1111 1101 | 180 | 1011 0100 | 110 | 0110 1110 | 6 | 0000 0110 |

| 252 | 1111 1100 | 172 | 1010 1100 | 100 | 0110 0100 | 5 | 0000 0101 |

| 251 | 1111 1011 | 170 | 1010 1010 | 96 | 0110 0000 | 4 | 0000 0100 |

| 250 | 1111 1010 | 160 | 1010 0000 | 90 | 0101 1010 | 3 | 0000 0011 |

| 249 | 1111 1001 | 150 | 1001 0110 | 64 | 0100 0000 | 2 | 0000 0010 |

| 248 | 1111 1000 | 140 | 1000 1100 | 32 | 0010 0000 | 1 | 0000 0001 |

| 224 | 1110 0000 | 130 | 1000 0010 | 16 | 0001 0000 | 0 | 0000 0000 |

The five different IPv4 address classes (indicated by the high-order bits) are shown in Table 2-4.

Table 2-4: IP Address Classes

| Class | Purpose | High-Order Bits | Address Range | Max. # of Hosts |

| A | Large networks | 0 | 1 to 126 | 16,777,214 |

| B | Medium-size networks | 10 | 128 to 191 | 65,534 |

| C | Small networks | 110 | 192 to 223 | 254 |

| D | Multicast | 1110 | 224 to 239 | ─ |

| E | Experimental | 1111 | 240 to 254 | ─ |

The address range 127.0.0.1 to 127.255.255.255 is a loopback network used for testing and troubleshooting. Packets sent to a loopback (or localhost) address – such as 127.0.0.1 – are immediately routed back to the source device.

A subnet mask is a number that hides the network portion of an IPv4 address, leaving only the host portion of the IP address. The network portion of a subnet mask is represented by contiguous "on" (1) bits beginning with the most significant bit. For example, in the subnet mask 255.255.255.0, the first three octets represent the network portion and the last octet represents the host portion of an IP address. Recall that the decimal number 255 is represented in binary notation as 1111 1111 (refer back to Table 2-2).

Key Terms:

- A subnet mask is a number that hides the network portion of an IPv4 address, leaving only the host portion of the IP address.

The default (or standard) subnet masks for Class A, B, and C networks are:

- Class A: 255.0.0.0

- Class B: 255.255.0.0

- Class C: 255.255.255.0

Several IPv4 address ranges are reserved for use in private networks and are not routable on the internet, including:

- 10.0.0.0–10.255.255.255 (Class A)

- 172.16.0.0–172.31.255.255 (Class B)

- 192.168.0.0–192.168.255.255 (Class C)

The 32-bit address space of an IPv4 address limits the total number of unique public IP addresses to about 4.3 billion. The widespread use of NAT (discussed in Section 2.2) delayed the inevitable depletion of IPv4 addresses but, as of 2018, the pool of available IPv4 addresses that can be assigned to organizations has officially been depleted (a small pool of IPv4 addresses has been reserved by each regional internet registry to facilitate the transition to IPv6). IPv6 addresses, which use a 128-bit hexadecimal address space providing about 3.4 x 1038 (340 hundred undecillion) unique IP addresses, was created to replace IPv4 when the IPv4 address space was exhausted.

IPv6 addresses consist of 32 hexadecimal numbers grouped into eight hextets of four hexadecimal digits, separated by a colon. A hexadecimal digit is represented by 4 bits (refer to Table 2-1), so each hextet is 16 bits (four 4-bit hexadecimal digits) and eight 16-bit hextets equals 128 bits.

An IPv6 address is further divided into two 64-bit segments: The first (also referred to as the "top" or "upper") 64 bits represent the network part of the address, and the last (also referred to as the "bottom" or "lower") 64 bits represent the node or interface part of the address. The network part is further subdivided into a 48-bit global network address and a 16-bit subnet. The node or interface part of the address is based on the MAC address (discussed in Section 2.2) of the node or interface.

The basic format for an IPv6 address is: xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx:xxxx where x represents a hexadecimal digit (0–f).

This is an example of an IPv6 address: 2001:0db8:0000:0000:0008:0800:200c:417a

The IETF has defined several rules to simplify an IPv6 address:

- Leading zeroes in an individual hextet can be omitted, but each hextet must have at least one hexadecimal digit, except as noted in the next rule. Applying this rule to the previous example yields this result: 2001:db8:0:0:8:800:200c:417a.

- Two colons (::) can be used to represent one or more groups of 16 bits of zeros, and leading or trailing zeroes in an address; the :: can appear only once in an IPv6 address. Applying this rule to the previous example yields this result: 2001:db8::8:800:200c:417a.

- In mixed IPv4 and IPv6 environments, the form x:x:x:x:x;x:d.d.d.d can be used, in which x represents the six high-order 16-bit hextets of the address and d represents the four low-order 8-bit octets (in standard IPv4 notation) of the address. For example, 0db8:0:0:0:0:FFFF:129.144.52.38 is a valid IPv6 address. Application of the previous two rules to this example yields this result: db8::ffff:129.144.52.38.

IPv6 security features are specified in Request For Comments (RFC) 7112 and include techniques to prevent fragmentation exploits in IPv6 headers and implementation of Internet Protocol Security (IPsec, discussed in Section 2.6.4) at the Network layer of the OSI model (discussed in Section 2.3.1).

Introduction to subnetting

Subnetting is a technique used to divide a large network into smaller, multiple subnetworks by segmenting an IP address into two parts: the network and the host. Subnetting can be used to limit network traffic or limit the number of devices that are visible to, or can connect to, each other. Routers examine IP addresses and subnet values (called masks) and determine whether to forward packets between networks. With IP addressing, the subnet mask is a required element.

Key Terms:

- Subnetting is a technique used to divide a large network into smaller, multiple subnetworks.

For a Class C IPv4 address, there are 254 possible node (or host) addresses (28 or 256 potential addresses, but you lose two addresses for each network: one for the base network address and the other for the broadcast address). A typical Class C network uses a default 24-bit subnet mask (255.255.255.0). This subnet mask value identifies the network portion of an IPv4 address with the first three octets being all ones (11111111 in binary notation, 255 in decimal notation). The mask displays the last octet as zero (00000000 in binary notation). For a Class C IPv4 address with the default subnet mask, the last octet is where the node-specific values of the IPv4 address are assigned.

For example, in a network with an IPv4 address of 192.168.1.0 and a mask value of 255.255.255.0, the network portion of the address is 192.168.1 and there are 254 node addresses (192.168.1.1 through 192.168.1.254) available. Remember, the first address (192.168.1.0) is the base network and the last address (192.168.1.255) is the broadcast address.

Class A and Class B IPv4 addresses use smaller mask values and support larger numbers of nodes than Class C IPv4 addresses for their default address assignments. Class A networks use a default 8-bit (255.0.0.0) subnet mask, which provides a total of more than 16 million (256 x 256 x 256) available IPv4 node addresses. Class B networks use a default 16-bit (255.255.0.0) subnet mask, which provides a total of 65,534 (256x256, minus the network address and the broadcast address) available IPv4 node addresses.

Unlike subnetting, which divides an IPv4 address along an arbitrary (default) classful 8-bit boundary (8 bits for a Class A network, 16 bits for a Class B network, 24 bits for a Class C network), classless inter-domain routing (CIDR) allocates address space on any address bit boundary (known as variable-length subnet masking, or VLSM). For example, using CIDR, a Class A network could be assigned a 24-bit mask (255.255.255.0, instead of the default 8-bit 255.0.0.0 mask) to limit the subnet to only 254 addresses, or a 23-bit mask (255.255.254.0) to limit the subnet to 512 addresses.

CIDR is used to reduce the size of routing tables on internet routers by aggregating multiple contiguous network prefixes (known as supernetting) and also helped to slow the depletion of public IPv4 addresses (discussed in Section 2.2.1).

Key Terms:

- classless inter-domain routing (CIDR) is a method for allocating IP addresses and IP routing that replaces classful IP addressing (for example, Class A, B, and C networks) with classless IP addressing.

- Variable-length subnet masking (VLSM) is a technique that enables IP address spaces to be divided into different sizes.

- Supernetting aggregates multiple contiguous smaller networks into a larger network to enable more efficient Internet routing.

An IP address can be represented with its subnet mask value, using "netbit" or CIDR notation. A netbit value represents the number of ones in the subnet mask and is displayed after an IP address, separated by a forward slash. For example, 192.168.1.0/24 represents a subnet mask consisting of 24 ones:

11111111.11111111.11111111.00000000 (in binary notation) or

255.255.255.0 (in decimal notation)

Packet Encapsulation and Lifecycle

In a circuit-switched network, a dedicated physical circuit path is established, maintained, and terminated between the sender and receiver across a network for each communications session. Prior to the development of the internet, most communications networks, such as telephone company networks, were circuit-switched. As discussed in Section 2.1.1, the internet is a packet-switched network comprising hundreds of millions of routers and billions of servers and user endpoints. In a packet-switched network, devices share bandwidth on communications links to transport packets between a sender and receiver across a network. This type of network is more resilient to error and congestion than circuit-switched networks.

Key Terms:

- In a circuit-switched network, a dedicated physical circuit path is established, maintained, and terminated between the sender and receiver across a network for each communications session.

- In a packet-switched network, devices share bandwidth on communications links to transport packets between a sender and receiver across a network.

An application that needs to send data across the network, for example, from a server to a client computer first creates a block of data and sends it to the TCP stack on the server. The TCP stack places the block of data into an output buffer on the server and determines the Maximum Segment Size (MSS) of individual TCP blocks (segments) permitted by the server operating system. The TCP stack then divides the data blocks into appropriately sized segments (for example, 1460 bytes), adds a TCP header, and sends the segment to the IP stack on the server.

The IP stack adds source (sender) and destination (receiver) IP addresses to the TCP segment (which is now called an IP packet) and notifies the server operating system that it has an outgoing message that is ready to be sent across the network. When the server operating system is ready, the IP packet is sent to the network interface card (NIC), which converts the IP packet to bits and sends the message across the network.

On their way to the destination computer, the packets typically traverse several network and security devices such as switches, routers, and firewalls before reaching the destination computer, where the encapsulation process described is reversed.

The OSI and TCP/IP models

The Open Systems Interconnection (OSI) and Transmission Control Protocol/Internet Protocol (TCP/IP) models define standard protocols for network communication and interoperability. Using a layered approach, the OSI and TCP/IP models:

- Clarify the general functions of communications processes

- Reduce complex networking processes into simpler sublayers and components

- Promote interoperability through standard interfaces

- Enable vendors to change individual features at a single layer rather than rebuilding the entire protocol stack

- Facilitate logical troubleshooting

Defined by the International Organization for Standardization (ISO – not an acronym, but the adopted organizational name from the Greek language, meaning "equal"), the OSI model consists of seven layers:

- Application (Layer 7 or L7). This layer identifies and establishes availability of communication partners, determines resource availability, and synchronizes communication. Protocols that function at the Application layer include:

- File Transfer Protocol (FTP). Used to copy files from one system to another on TCP ports 20 (the data port) and 21 (the control port)

- Hypertext Transfer Protocol (HTTP). Used for communication between web servers and web browsers on TCP port 80

- Hypertext Transfer Protocol Secure (HTTPS). Used for Secure Sockets Layer/Transport Layer Security (SSL/TLS) encrypted communications between web servers and web browsers on TCP port 443 (and other ports, such as 8443)

- Internet Message Access Protocol (IMAP). A store-and-forward electronic mail protocol that allows an email client to access, manage, and synchronize email on a remote mail server on TCP and UDP port 143

- Post Office Protocol Version 3 (POP3). An email retrieval protocol that allows an email client to access email on a remote mail server on TCP port 110

- Simple Mail Transfer Protocol (SMTP). Used to send and receive email across the internet on TCP/UDP port 25

- Simple Network Management Protocol (SNMP). Used to collect network information by polling stations and sending traps (or alerts) to a management station on TCP/UDP ports 161 (agent) and 162 (manager)

- Telnet. Provides terminal emulation for remote access to system resources on TCP/UDP port 23

- Presentation (Layer 6 or L6). This layer provides coding and conversion functions (such as data representation, character conversion, data compression, and data encryption) to ensure that data sent from the Application layer of one system is compatible with the Application layer of the receiving system. Protocols that function at the Presentation layer include:

- American Standard Code for Information Interchange (ASCII). A character-encoding scheme based on the English alphabet, consisting of 128 characters

- Extended Binary-Coded Decimal Interchange Code (EBCDIC). An 8-bit characterencoding scheme largely used on mainframe and mid-range computers

- Graphics Interchange Format (GIF). A bitmap image format that allows up to 256 colors and is suitable for images or logos (but not photographs)

- Joint Photographic Experts Group (JPEG). A photographic compression method used to store and transmit photographs

- Motion Picture Experts Group (MPEG). An audio and video compression method used to store and transmit audio and video files

- Session (Layer 5 or L5). This layer manages communication sessions (service requests and service responses) between networked systems, including connection establishment, data transfer, and connection release. Protocols that function at the Session layer include:

- Network File System (NFS). Facilitates transparent user access to remote resources on a UNIX-based TCP/IP network

- remote procedure call (RPC). A client-server network redirection protocol

- Secure Shell (SSH). Establishes an encrypted tunnel between a client and server

- Session Initiation Protocol (SIP). An open signaling protocol standard for establishing, managing and terminating real-time communications — such as voice, video, and text — over large IP-based networks

- Transport (Layer 4 or L4). This layer provides transparent, reliable data transport and end-to-end transmission control. Specific Transport layer functions include:

- Flow control (managing data transmission between devices by ensuring that the transmitting device doesn't send more data than the receiving device can process)

- Multiplexing (enabling data from multiple applications to be simultaneously transmitted over a single physical link)

- Virtual circuit management (establishing, maintaining, and terminating virtual circuits)

- Error checking and recovery (detecting transmission errors and taking action to resolve any errors that occur, such as requesting that data be retransmitted) TCP and UDP port numbers assigned to applications and services are defined at the Transport layer. Protocols that function at the Transport layer include:

- Transmission Control Protocol (TCP). A connection-oriented (a direct connection between network devices is established before data segments are transferred) protocol that provides reliable delivery (received segments are acknowledged and retransmission of missing or corrupted segments is requested) of data. TCP connections are established via a three-way handshake. The additional overhead associated with connection establishment, acknowledgment, and error correction means that TCP is generally slower than connectionless protocols such as User Datagram Protocol (UDP).

- User Datagram Protocol (UDP). A connectionless (a direct connection between network devices is not established before datagrams are transferred) protocol that provides best-effort delivery (received datagrams are not acknowledged and missing or corrupted datagrams are not requested) of data. UDP has no overhead associated with connection establishment, acknowledgment, sequencing, or error-checking and recovery. UDP is ideal for data that requires fast delivery, as long as that data isn't sensitive to packet loss and doesn't need to be fragmented. Applications that use UDP include Domain Name System (DNS), Simple Network Management Protocol (SNMP), and streaming audio or video.

- Stream Control Transmission Protocol (SCTP). A message-oriented protocol (similar to UDP) that ensures reliable, in-sequence transport with congestion control (similar to TCP)

- Network (Layer 3 or L3). This layer provides routing and related functions that enable data to be transported between systems on the same network or on interconnected networks. Routing protocols (discussed in Section 2.1.3) are defined at this layer. Logical addressing of devices on the network is accomplished at this layer using routed protocols such as the Internet Protocol (IP). Routers operate at the Network layer of the OSI model.

- Data Link (Layer 2). This layer ensures that messages are delivered to the proper device across a physical network link. This layer also defines the networking protocol (for example, Ethernet) used to send and receive data between individual devices and formats messages from layers above into frames for transmission, handles point-topoint synchronization and error control, and can perform link encryption. Switches typically operate at Layer 2 of the OSI model (although multilayer switches that operate at different layers also exist). The Data Link layer is further divided into two sublayers:

- Logical Link Control (LLC). The LLC sublayer provides an interface for the MAC sublayer; manages the control, sequencing, and acknowledgment of frames being passed up to the Network layer or down to the Physical layer; and manages timing and flow control.

- Media access control (MAC). The MAC sublayer is responsible for framing and performs error control using a cyclic redundancy check (CRC), identifies MAC addresses (discussed in Section 2.2), and controls media access.

- Physical (Layer 1 or L1). This layer sends and receives bits across the network medium (cabling or wireless links) from one device to another. It specifies the electrical, mechanical, and functional requirements of the network, including network topology, cabling and connectors, and interface types, and the process for converting bits to electrical (or light) signals that can be transmitted across the physical medium.

Key Terms:

- A TCP segment is a protocol data unit (PDU) defined at the Transport layer of the OSI model.

- A protocol data unit (PDU) is a self-contained unit of data (consisting of user data or control information and network addressing).

- In TCP, a three-way handshake is used to establish a connection. For example, a PC initiates a connection with a server by sending a TCP SYN (Synchronize) packet. The server replies with a SYN ACK packet (Synchronize Acknowledgment). Finally, the PC sends an ACK or SYN-ACK-ACK packet, acknowledging the server's acknowledgment, and data communication commences.

- A UDP datagram is a PDU defined at the Transport layer of the OSI model.

- Flow control monitors the flow of data between devices to ensure that a receiving device, which may not necessarily be operating at the same speed as the transmitting device, doesn't drop packets.

- A cyclic redundancy check (CRC) is a checksum used to create a message profile. The CRC is recalculated by the receiving device. If the recalculated CRC doesn't match the received CRC, the packet is dropped and a request to resend the packet is transmitted back to the device that sent the packet.

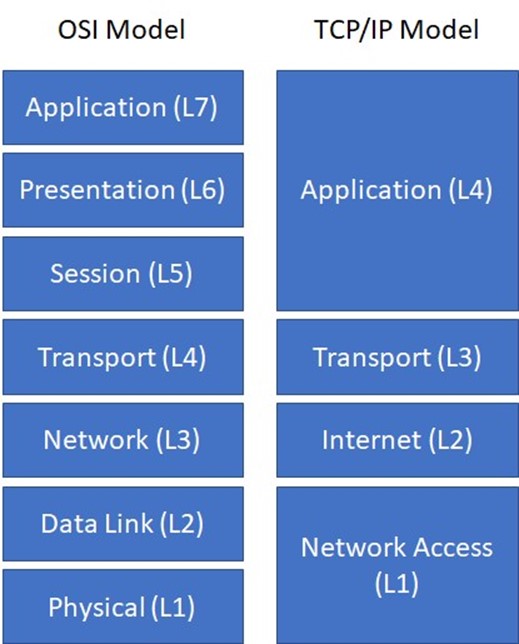

The TCP/IP model was originally developed by the U.S. Department of Defense (DoD) and actually preceded the OSI model. Whereas the OSI model is a theoretical model used to logically describe networking processes, the TCP/IP model defines actual networking requirements, for example, for frame construction. The TCP/IP model consists of four layers (see Figure 2-2):

- Application (Layer 4 or L4). This layer consists of network applications and processes, and it loosely corresponds to Layers 5 through 7 of the OSI model.

- Transport (Layer 3 or L3). This layer provides end-to-end delivery and it corresponds to Layer 4 of the OSI model.

- Internet (Layer 2 or L2). This layer defines the IP datagram and routing, and it corresponds to Layer 3 of the OSI model.

- Network Access (Layer 1 or L1). Also referred to as the Link layer, this layer contains routines for accessing physical networks and it corresponds to Layers 1 and 2 of the OSI model.

Figure 2-2: The OSI model and the TCP/IP model

Data encapsulation

In the OSI and TCP/IP models, data is passed from the highest layer (L7 in the OSI model, L4 in the TCP/IP model) downward through each layer to the lowest layer (L1 in the OSI model and TCP/IP model), and is then transmitted across the network medium to the destination node, where it is passed upward from the lowest layer to the highest layer. Each layer communicates only with the adjacent layer immediately above and below it. This communication is achieved through a process known as data encapsulation (or data hiding), which wraps protocol information from the layer immediately above in the data section of the layer immediately below.

Key Terms:

- Data encapsulation (or data hiding) wraps protocol information from the (OSI or TCP/IP) layer immediately above in the data section of the layer below.

A protocol data unit (PDU) describes a unit of data at a particular layer of a protocol. For example, in the OSI model, a Layer 1 PDU is known as a bit, a Layer 2 PDU is known as a frame, a Layer 3 PDU is known as a packet, and a Layer 4 PDU is known as a segment or datagram. When a client or server application sends data across a network, a header (and trailer in the case of Layer 2 frames) is added to each data packet from the adjacent layer below it as it passes through the protocol stack. On the receiving end, the headers (and trailers) are removed from each data packet as it passes through the protocol stack to the receiving application.

Network Security Models

This section describes perimeter-based and Zero Trust network security models.

Perimeter-based network security strategy

Perimeter-based network security models date back to the early mainframe era (circa late 1950s), when large mainframe computers were located in physically secure "machine rooms" that could be accessed by only a relatively limited number of remote job entry (RJE) "dumb" terminals that were directly connected to the mainframe and also located in physically secure areas. Today's data centers are the modern equivalent of machine rooms, but perimeter-based physical security is no longer sufficient for several obvious, but important reasons:

- Mainframe computers predate the internet. In fact, mainframe computers predate ARPANET, which predates the internet. Today, an attacker uses the internet to remotely gain access, rather than to physically breach the data center perimeter.

- Data centers today are remotely accessed by millions of remote endpoint devices from anywhere and at any time. Unlike the RJEs of the mainframe era, modern endpoints (including mobile devices) are far more powerful than many of the early mainframe computers and are targets themselves.

- The primary value of the mainframe computer was its processing power. The relatively limited data that was produced was typically stored on near-line media, such as tape. Today, data is the target, it is stored online in data centers and in the cloud, and it is a high value target for any attacker.

The primary issue with a perimeter-based network security strategy in which countermeasures are deployed at a handful of well-defined ingress and egress points to the network is that it relies on the assumption that everything on the internal network can be trusted. However, this assumption is no longer safe to make, given modern business conditions and computing environments where:

- Remote employees, mobile users, and cloud computing solutions blur the distinction between "internal" and "external."

- Wireless technologies, the proliferation of partner connections, and the need to support guest users introduce countless additional pathways into the network branch offices that may be located in untrusted countries or regions.

- Insiders, whether intentionally malicious or just careless, may present a very real security threat.

Perimeter-based approach strategies fail to account for:

- The potential for sophisticated cyberthreats to penetrate perimeter defenses, in which case they would then have free passage on the internal network

- Scenarios where malicious users can gain access to the internal network and sensitive resources by using the stolen credentials of trusted users

- The reality that internal networks are rarely homogeneous, but instead include pockets of users and resources with inherently different levels of trust/sensitivity that should ideally be separated in any event (for example, research and development and financial systems versus print/file servers)

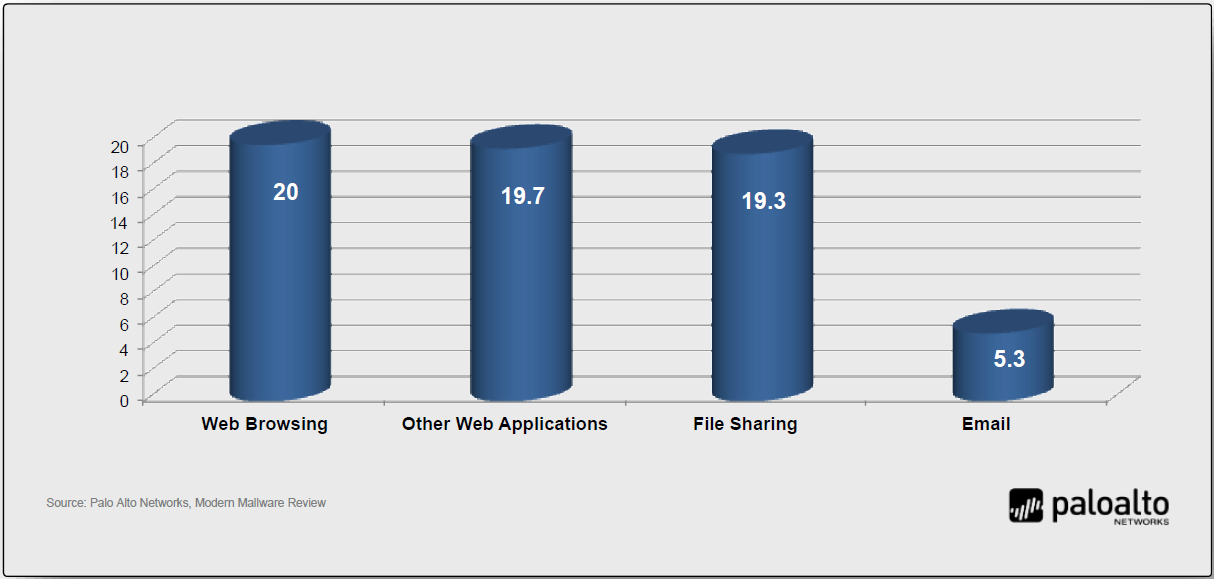

A broken trust model is not the only issue with perimeter-centric approaches to network security. Another contributing factor is that traditional security devices and technologies (such as port-based firewalls) commonly used to build network perimeters let too much unwanted traffic through. Typical shortcomings in this regard include the inability to:

- Definitively distinguish good applications from bad ones (which leads to overly permissive access control settings)

- Adequately account for encrypted application traffic

- Accurately identify and control users (regardless of where they're located or which devices they're using)

- Filter allowed traffic not only for known application-borne threats but also for unknown ones

The net result is that re-architecting defenses in a way that creates pervasive internal trust boundaries is, by itself, insufficient. You must also ensure that the devices and technologies used to implement these boundaries actually provide the visibility, control, and threat inspection capabilities needed to securely enable essential business applications while still thwarting modern malware, targeted attacks, and the unauthorized exfiltration of sensitive data.

Zero Trust security

Introduced by Forrester Research, the Zero Trust security model addresses some of the limitations of perimeter-based network security strategies by removing the assumption of trust from the equation. With Zero Trust, essential security capabilities are deployed in a way that provides policy enforcement and protection for all users, devices, applications, data resources, and the communications traffic between them, regardless of location.

In particular, with Zero Trust there is no default trust for any entity — including users, devices, applications, and packets — regardless of what it is and its location on or relative to the enterprise network. Verification that authorized entities are always doing only what they're allowed to do also is no longer optional in a Zero Trust model; it's now mandatory.

The implications for these two changes are, respectively:

- The need to establish trust boundaries that effectively compartmentalize different segments of the internal computing environment. The general idea is to move security functionality closer to the different pockets of resources that require protection. This way security can always be enforced regardless of the point of origin of associated communications traffic.

- The need for trust boundaries to do more than just initial authorization and access control enforcement. To "always verify" also requires ongoing monitoring and inspection of associated communications traffic for subversive activities (such as threats).

Benefits of implementing a Zero Trust network include:

- Clearly improved effectiveness in mitigating data loss with visibility and safe enablement of applications, and detection and prevention of cyberthreats

- Greater efficiency for achieving and maintaining compliance with security and privacy mandates, using trust boundaries to segment sensitive applications, systems, and data

- Improved ability to securely enable transformative IT initiatives, such as user mobility, BYOD/BYOA, infrastructure virtualization, and cloud computing

- Lower total cost of ownership (TCO) with a consolidated and fully integrated security operating platform, rather than a disparate array of siloed, purpose-built security point products

Core Zero Trust design principles

The core Zero Trust principles that define the operational objectives of a Zero Trust implementation include:

- Ensure that all resources are accessed securely, regardless of location. This principle suggests not only the need for multiple trust boundaries but also increased use of secure access for communication to or from resources, even when sessions are confined to the "internal" network. It also means ensuring that the only devices allowed access to the network have the correct status and settings, have an approved VPN client and proper passcodes, and are not running malware.

- Adopt a least privilege strategy and strictly enforce access control. The goal is to minimize allowed access to resources as a means to reduce the pathways available for malware and attackers to gain unauthorized access — and subsequently to spread laterally and/or infiltrate sensitive data.

- Inspect and log all traffic. This principle reiterates the need to "always verify" while also reinforcing that adequate protection requires more than just strict enforcement of access control. Close and continuous attention must also be given to exactly what "allowed" applications are actually doing, and the only way to accomplish these goals is to inspect the content for threats.

Key Terms:

- The principle of least privilege in network security requires that only the permission or access rights necessary to perform an authorized task are granted.

Zero Trust conceptual architecture

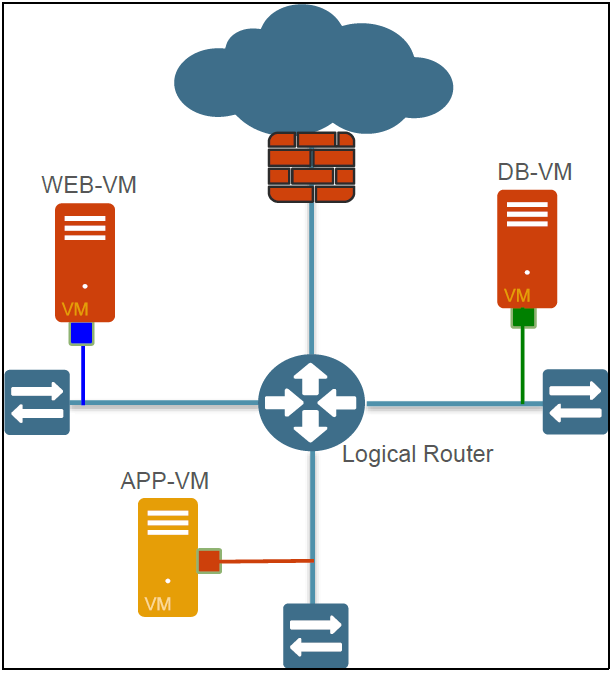

The main components of a Zero Trust conceptual architecture (shown in Figure 2-3) include:

- Zero Trust Segmentation Platform. The Zero Trust Segmentation Platform is referred to as a network segmentation gateway by Forrester Research. It is the component used to define internal trust boundaries. That is, it provides the majority of the security functionality needed to deliver on the Zero Trust operational objectives, including the ability to:

Enable secure network access

Enable secure network access  Granularly control traffic flow to and from resources

Granularly control traffic flow to and from resources  Continuously monitor allowed sessions for any threat activity

Continuously monitor allowed sessions for any threat activity

Although Figure 2-3 depicts the Zero Trust Segmentation Platform as a single component in a single physical location, in practice – because of performance, scalability, and physical limitations – an effective implementation is more likely to entail multiple instances distributed throughout an organization's network. The solution also is designated as a "platform" to reflect that it is an aggregation of multiple distinct (and potentially distributed) security technologies that operate as part of a holistic threat protection framework to reduce the attack surface and correlate information about threats that are found.

- Trust zones. Forrester Research refers to a trust zone as a micro core and perimeter (MCAP). A trust zone is a distinct pocket of infrastructure where the member resources not only operate at the same trust level but also share similar functionality.

Functionality such as protocols and types of transactions must be shared because it is needed to actually minimize the number of allowed pathways into and out of a given zone and, in turn, minimize the potential for malicious insiders and other types of threats to gain unauthorized access to sensitive resources.

Examples of trust zones shown in Figure 2-3 include the user (or campus) zone, a wireless zone for guest access, a cardholder data zone, database and application zones for multi-tier services, and a zone for public-facing web applications.

Remember, too, that a trust zone is not intended to be a "pocket of trust" where systems (and therefore threats) within the zone can communicate freely and directly with each other. For a full Zero Trust implementation, the network would be configured to ensure that all communications traffic — including traffic between devices in the same zone — is intermediated by the corresponding Zero Trust Segmentation Platform.

- Management infrastructure. Centralized management capabilities are crucial to enabling efficient administration and ongoing monitoring, particularly for implementations involving multiple distributed Zero Trust Segmentation Platforms. A data acquisition network also provides a convenient way to supplement the native monitoring and analysis capabilities for a Zero Trust Segmentation Platform. Session logs that have been forwarded to a data acquisition network can then be processed by any number of out-of-band analysis tools and technologies intended, for example, to further enhance network visibility, detect unknown threats, or support compliance reporting.

Figure 2-3: Zero Trust conceptual architecture

Key Zero Trust criteria and capabilities

The core of any Zero Trust network security architecture is the Zero Trust Segmentation Platform, so you must choose the correct solution. Key criteria and capabilities to consider when selecting a Zero Trust Segmentation Platform, include:

- Secure access. Consistent secure IPsec and SSL VPN connectivity is provided for all employees, partners, customers, and guests wherever they're located (for example, at remote or branch offices, on the local network, or over the internet). Policies to determine which users and devices can access sensitive applications and data can be defined based on application, user, content, device, and device state.

- Inspection of all traffic. Application identification accurately identifies and classifies all traffic, regardless of ports and protocols, and evasive tactics such as port hopping or encryption. Application identification eliminates methods that malware may use to hide from detection and provides complete context into applications, associated content, and threats.

- Least privileges access control. The combination of application, user, and content identification delivers a positive control model that allows organizations to control interactions with resources based on an extensive range of business-relevant attributes, including the specific application and individual functions being used, user and group identity, and the specific types or pieces of data being accessed (such as credit card or Social Security numbers). The result is truly granular access control that safely enables the correct applications for the correct sets of users while automatically preventing unwanted, unauthorized, and potentially harmful traffic from gaining access to the network.

- Cyberthreat protection. A combination of anti-malware, intrusion prevention, and cyberthreat prevention technologies provides comprehensive protection against both known and unknown threats, including threats on mobile devices. Support for a closedloop, highly integrated defense also ensures that inline enforcement devices and other components in the threat protection framework are automatically updated.

- Coverage for all security domains. Virtual and hardware appliances establish consistent and cost-effective trust boundaries throughout an organization's entire network, including in remote or branch offices, for mobile users, at the internet perimeter, in the cloud, at ingress points throughout the data center, and for individual areas wherever they might exist.

Implementing a Zero Trust design

Implementation of a Zero Trust network security model doesn't require a major overhaul of an organization's network and security infrastructure. A Zero Trust design architecture can be implemented in a way that requires only incremental modifications to the existing network and is completely transparent to your users. Advantages of such a flexible, non-disruptive deployment approach include minimizing the potential impact on operations and being able to spread the required investment and work effort over time.

To get started, you can configure a Zero Trust Segmentation Platform in listen-only mode to obtain a detailed picture of traffic flows throughout the network, including where, when, and the extent to which specific users are using specific applications and data resources.

Once you have a detailed understanding of the network traffic flows in the environment, the next step is to define trust zones and incrementally establish corresponding trust boundaries based on relative risk and/or sensitivity of the data involved:

- Deploy devices in appropriate locations to establish internal trust boundaries for defined trust zones

- Configure the appropriate enforcement and inspection policies to effectively put each trust boundary "online"

Next, you can then progressively establish trust zones and boundaries for other segments of the computing environment based on their relative degree of risk. Examples where secure trust zones can be established are:

- IT management systems and networks (where a successful breach could lead to compromise of the entire network)

- Partner resources and connections (business-to-business, or B2B)

- High-profile, customer-facing resources and connections (business-to-consumer, or B2C)

- Branch offices in risky countries or regions, followed by all other branch offices

- Guest access networks (both wireless and wired)

- Campus networks

Zero Trust principles and concepts need to be implemented at major access points to the internet. You will have to replace or augment legacy network security devices with a Zero Trust Segmentation Platform at this deployment stage to gain all of the requisite capabilities and benefits of a Zero Trust security model.

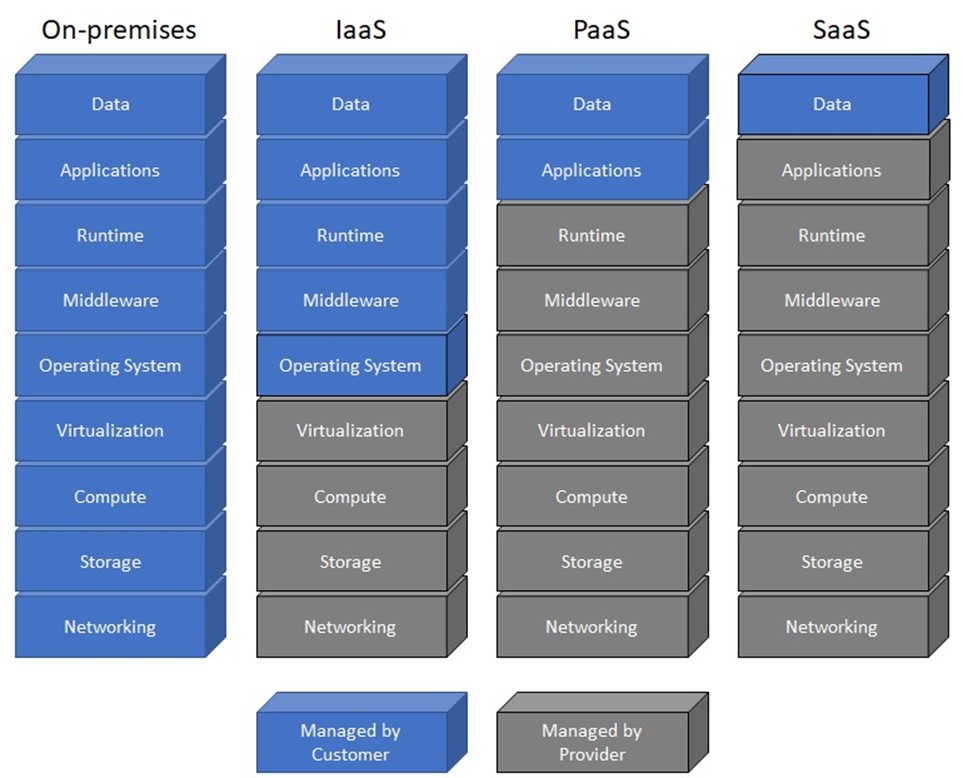

Cloud and Data Center Security

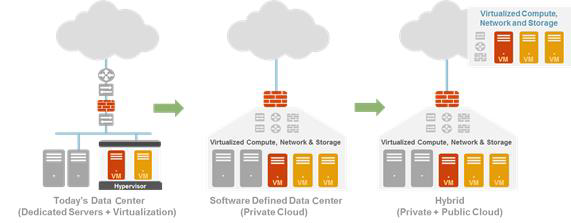

Data centers are rapidly evolving from a traditional, closed environment with static, hardwarebased computing resources to one in which traditional and cloud computing technologies are mixed (see Figure 2-4).

Figure 2-4: Data centers are evolving to include a mix of hardware and cloud computing technologies.

The benefit of moving toward a cloud computing model – private, public, or hybrid – is that it improves operational efficiencies and lowers capital expenditures:

- Optimizes existing hardware resources. Instead of using a "one server, one application" model, you can run multiple virtual applications on a single physical server, which means that organizations can leverage their existing hardware infrastructure by running more applications within the same system, provided there are sufficient compute and memory resources on the system.

- Reduces data center costs. Reduction of the server hardware "box" count not only reduces the physical infrastructure real estate but also reduces data center costs for power, cooling, and rack space, among others.

- Increases operational flexibility. Through the dynamic nature of virtual machine (VM) provisioning, applications can be delivered more quickly than they can through the traditional method of purchasing them, "racking/stacking," cabling, and so on. This operational flexibility helps improve the agility of the IT organization.

- Maximizes efficiency of data center resources. Because applications can experience asynchronous or bursty demand loads, virtualization provides a more efficient way to address resource contention issues and maximize server use. It also provides a better way to address server maintenance and backup challenges. For example, IT staff can migrate VMs to other virtualized servers or hypervisors while performing hardware or software upgrades.

Cloud computing depends on virtualization