Implementing Virtualization in the Small Environment

What Is the Technology Behind Virtualization?

Virtualization. Even if you're not technology savvy, you've likely heard of its concepts and perhaps even a few of its products. Virtualization's technology is a big and obvious play in enterprise environments. Its promise of shrinking your data center footprint, reducing power and cooling costs, and enhancing the workflow of your business make it a perfect fit for environments of size.

But small businesses and small environments needn't necessarily be left out in the cold. These oft‐overlooked places—where no‐ or low‐cost technologies are a necessity and administrators must take broad responsibility rather than specialize—stand to gain as well. Yet with so much information in the market today, much of which is focused on the needs of large environments, small businesses and their computing needs have a hard time understanding virtualization's technology, let alone justifying a move to it.

Thus the reason for this guide. This goal of this guide is to illuminate the technologies, the cost savings, and the workflow improvements that small businesses and environments will see with virtualization. By asking and answering a series of four important questions, this guide will pull you through the four most critical hurdles that you must understand to be successful in your virtualization implementation.

As the individual in charge of your company's technology decisions, you have probably asked yourself these same four questions:

- What is the technology behind virtualization? With the media's level of hype surrounding virtualization, an uninformed person could think it would solve every IT problem. Obviously this isn't true, but what exactly is virtualization and what can it do? Chapter 1 of this guide will discuss those technologies and what virtualization is really all about.

- What business benefits will I recognize from implementing virtualization? Understanding this technology is great, but the technology must provide a direct benefit to the small business to be of value. Chapter 2 of this guide will discuss the hard and soft benefits that small businesses and environments can immediately see by virtualizing servers.

- What do I need to get started with virtualization? Following an understanding of virtualization's benefits, small businesses next want to know how to get started. Virtualization comes in many no‐cost and for‐cost flavors, and can be implemented in innumerable configurations. Chapter 3 will discuss common first steps for small environments as well as the trigger points for investing in its no‐cost solutions.

- What are the best practices in implementing small environment virtualization? Virtualization is no longer a nascent technology. Having been available for many years, a common set of best practices for its implementation have been developed. Following these best practices will ensure that your implementation provides the greatest value to your business.

A ProductNeutral Approach

There are many virtualization products available on the market today, each with its set of benefits and detractors, features and gotchas. With this reality in mind, this guide will take a product‐neutral approach to presenting the technologies associated with virtualization. The goal with this guide is to empower you with the information you need to make smart decisions about how to improve your computing efficiency with virtualization technology.

What Is Virtualization?

Circling around today's IT conversations, the question is really no longer "Should I virtualize my servers?" but "When can I do it?" Although systems virtualization has technically been around since 1967 with its start in IBM mainframes, its incorporation into Intel x86 systems started in the late 1990s. Since that time, virtualization has matured quickly over a few short years to become a major component both in the server room as well as on individual desktops.

As such, virtualization is not new technology. Although some vendors have recently introduced new products that fit under virtualization's banner, this technology has been around for a long time. Because of this long history, virtualization and its products today are mature solutions that are trusted by enterprises worldwide. That trust extends to even the most critical of server workloads.

Layers of Abstraction

At its most basic, virtualization is involved with the abstraction of computer resources. This abstraction is put into place because it enables a logical separation between a resource and how that resource is used. Consider the address book on your telephone. This address book provides an excellent example of how a layer of abstraction works. When you enter a name into that address book, you assign it a telephone number. You do so because it is operationally easier to work with a person's name than their individual phone number. Abstracting that person's number beneath their name makes it easier and more efficient to work with the telephone system.

Another benefit of abstraction is the ability to relocate an underlying resource without affecting how you access it at the top level. With your telephone address book, it is possible to change a person's underlying phone number without changing their name. As a result, a change to that person's phone number doesn't have to change your process for calling them.

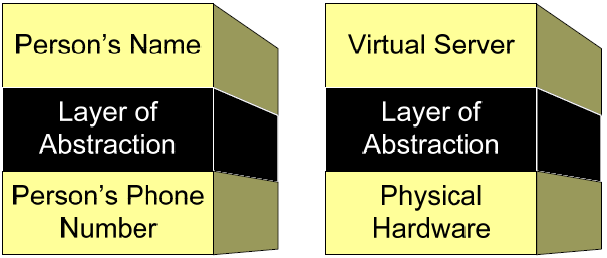

With virtualization, this abstraction exists between two "layers" of a computer system. There are many virtualization architectures available today, with the differences in each having to do with where that layer is positioned. This guide will discuss the kind of virtualization commonly called hardware virtualization or systems virtualization. In this type of virtualization, everything that makes up a computer's operating system (OS), installed applications, and data are abstracted away from its physical hardware to create a "virtual" server. Figure 1.1 shows an example of how this setup looks in comparison to the earlier phone number example.

Figure 1.1: Virtualization involves adding layers of abstractions to resources.

The Hypervisor

For virtualized computers, that layer of abstraction is handled through the use of a hypervisor. This hypervisor is a thin layer of code that is installed directly on top of a server's physical hardware. "Below" itself, the hypervisor's code interfaces with the hardware devices that make up the physical server. At the same time, the hypervisor presents a uniform interface "above" itself, upon which virtual machines can be installed. The job of the hypervisor is to intercept hardware resource requests from residing virtual machines and translate those requests into something understandable by the physical hardware. The reverse holds true as well: Requests from physical hardware that are destined for a virtual machine are translated by the hypervisor to an emulated device driver the virtual machine can understand.

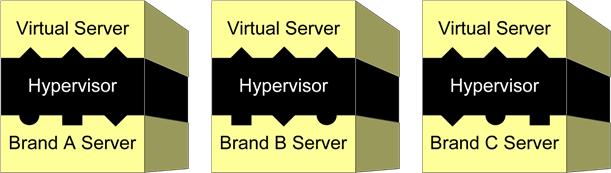

As you can see in Figure 1.2, no matter what hardware composition is present on the physical machine, each residing virtual server sees the same set of emulated hardware because they are exposed "on top" of the hypervisor.

Figure 1.2: The hypervisor abstracts each server's unique hardware to create a uniform platform for virtual servers.

The benefit of this abstraction is that the computer's OS and applications no longer directly rely on a specific set of physical hardware. A virtual machine can be run atop essentially any physical hardware, effectively decoupling hardware from software. If a piece of physical hardware experiences a problem, the processing of that server instance can be manually or automatically relocated to different hardware. If Brand A of a server is no longer available for purchase or parts, it is now trivial to relocate the virtual machine to Brand B. In every case, this movement requires no configuration change to the virtual machine itself.

Hardware Independence with Virtual Servers

This introduction of hypervisors into the traditional data center enables levels of systems commonality never before seen by IT. Whereas physical servers are often quite different from each other in terms of device and hardware composition, virtual servers operate with functionally the same hardware. When every server in your environment runs with the same hardware, you have fewer drivers to deal with, fewer conflicts to plan for, and a much more predictable environment to administer.

A result of this commonality is greater flexibility with the positioning of server instances. Consider the situation in which a critical server experiences a problem. In the worst of cases, it can be necessary to relocate that server's OS and applications to a new piece of hardware. You might want to do so because the service that server is running is highly critical to your business, and its extended downtime costs you money.

If that server is a physical server, this task is exceptionally complex and is generally possible when the two servers have the exact same hardware. This requirement presents a problem because many environments use servers from multiple vendors or product generations, and keeping exact spares on‐hand to support this need is expensive and wasteful. As a result, a server's hardware problem can directly impact the functionality of your critical business services.

With virtual servers, a hardware problem isn't really a problem at all. Because virtual servers are decoupled from their physical hosts, they tend to see a greater level of inherent resiliency. Should a virtual server's host experience a problem, moving the virtual server to a new host involves little more than a file copy. This process can be invoked by an administrator when the problem occurs, or in some cases, can occur automatically when virtual monitoring software recognizes the problem. In either case, the hypervisor ensures that a uniform platform exists for virtual machines no matter what kind of server to which it is installed.

Virtualization Reduces Your Hardware Footprint

Virtual machines are also superior to physical machines because more than one virtual machine can run atop a single host. Because virtualization eliminates the direct connection between server instance and physical hardware, the hypervisor can support the running of multiple virtual machines atop a single host. This ability to consolidate multiple physical machines onto a single virtual host provides substantial cost savings while increasing the efficient use of existing hardware. In essence, with virtualization, you'll actually use more of your purchased server hardware.

Why is this true? As an average, many Windows server computers rarely use more than 5 to 7% of their available processor resources. They are often overloaded with RAM memory, sometimes containing 4×, 8×, or greater memory than is actually needed to process their assigned mission. This massive over‐engineering of computer hardware has been common in IT as the rate of server hardware performance—and subsequent reductions in cost—has outpaced the needs of software. It also happens due to the standardized hardware configurations that are available to be purchased through the major server vendors. As a result, many non‐virtualized servers today spend much of their time idly flipping ones and zeroes as they wait for their next instruction.

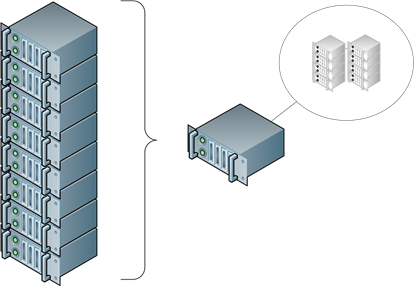

Yet with your small business, you likely want to get the most out of those costly server purchases. With virtualization, another major job of the hypervisor is to schedule hardware resource requests between virtual machines on the same host. Thus, a large number of underutilized physical computers can be easily consolidated onto a single virtual host and run concurrently.

The result is that the number of physical servers you need to run your business goes down significantly. This reduction in your total number of physical servers means less equipment to manage, to power, and to keep cool, all of which directly reduces your overall costs. Figure 1.3 shows a representative example of how this reduction might change the look of your server racks.

Figure 1.3: Virtualization consolidates many underutilized physical machines onto a single virtual host.

Virtual Consolidation Requires Performance Management

Obviously, with the consolidation of many server instances onto fewer physical servers, there is the potential to simply go too far. When too many virtual machines are collocated on the same host, each finds itself fighting for needed resources. The result is that the performance of every virtual machine goes down.

One critical skill that gains priority in virtualized environments is the monitoring and management of host and virtual server performance. Your virtual platform software should include the necessary tools to allow you to watch for and correct performance‐related problems. This guide will talk more about best practices for performance management in Chapter 4.

Virtual Disks Enjoy Special Benefits

One mechanism that enables this flexibility with virtual servers is in the way their hard disks are presented. Inside a traditional physical server's hard disk are stored the thousands of files and folders required for that server instance. Those thousands of files and folders are responsible for the OS, any installed applications, and even the data that the server works with in fulfilling its mission.

The OS that makes up a virtual server still requires each of those files to be available, and from the perspective of the virtual server itself, those files are individually accessible. However, from the perspective of the virtual host, all the files and folders on that server's disk drive are encapsulated into a single file. This single file—typically one per disk volume—bestows unique capabilities on the virtual server:

- File relocation. Since the entire composition of a virtual machine is encapsulated into a single disk file, relocating a server from one host to another requires little more than moving that file to a new host. Virtual platforms often have a small handful of additional files that store configuration information about the virtual machine itself. Yet every file and folder that makes up that server is effectively encapsulated into that single disk file per mounted volume.

- File copy replication. Making the previous feature even more useful is the ability to copy that single file to create a new one. Completing this process creates an exact replica of the original server that can be hosted elsewhere in the environment. This capability makes it easy for administrators to create additional servers as necessary. Entire server template libraries can be created for commonly needed servers, drastically reducing the amount of time required to build new servers when needed.

- File snapshots. Today's modern file systems and virtualization platforms include the ability to "snapshot" the server's disk file as well. This snapshotting process effectively creates a second disk file that is connected to the first. When this process is initiated, all changes to the original disk file are instead written to the second disk file. The virtualization platform combines both the original and "delta" disk files to create and process the virtual server. The benefit here is that a snapshot of that virtual server's state can be created at any point. Changes made to that virtual server after the snapshot are stored in the delta file. If those changes are later no longer desired, the administrator simply "rolls back" the server to its pre‐snapshot state, eliminating the deltas. This feature enables administrators to protect a server's state from the installation of a poorly developed patch or application. It allows administrators the flexibility to work with a server knowing that any changes can be immediately rolled back if mistakes are made or can be merged with the server's "main" disk file if successful.

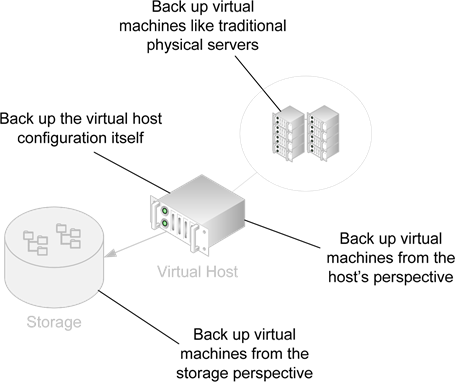

- Singlefile backup and guaranteed restore. Traditional backups with physical servers have historically been an area of concern with IT administrators. With thousands of files that make up a typical Windows server, the loss of only a single file in a regular backup can prevent the successful emergency restore of that server. Making this problem worse, backup software can sometimes miss files because they are locked or in use. The combination of these two problems makes the successful restore of a failed server a less‐than‐guaranteed situation. With virtual servers, however, backing up the entire server requires backing up only its single disk file. This encapsulation of a server's state onto a single disk file goes far towards ensuring its successful restore.

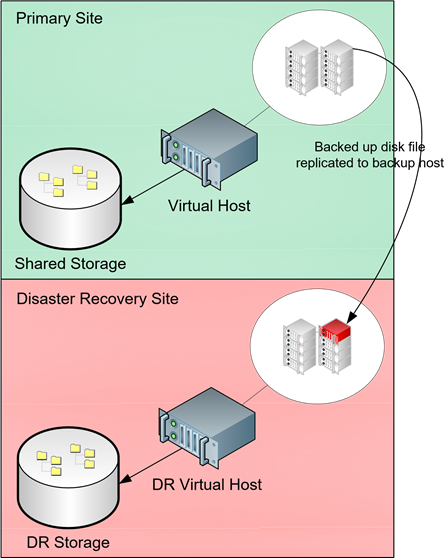

- Inexpensive disaster recovery. Lastly, small environment disaster recovery has historically been impossible due to the operational costs and complexities of managing a secondary off‐site data center containing duplicate servers and other hardware. Virtual servers and their disk files bring inexpensive and operationally feasible disaster recovery to even the smallest of businesses. Necessary is the offsite replication of backed up virtual server disk files. Also needed at that backup site are a set of servers that can later power on those virtual servers after a disaster. Because of the impact of the hypervisor, those servers needn't be the exact same hardware as what is installed at your primary site.

Virtualization Enables Cost‐Effective Role Isolation

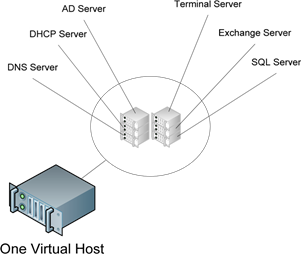

A common architectural decision in many of the smallest environments is to operate numerous server roles atop a single server. These services can be infrastructure services such as DNS, DHCP, or Active Directory (AD) domain services, or in some cases, can be user services such as SQL, application services, or email servers like Microsoft Exchange.

This assignment of multiple roles per server is actually in conflict with the best practices for Windows server architecture design. Best practices suggest that each server role or application should be installed to its own individual server. The reasons for this role isolation are many:

- Isolated roles are less likely to conflict with each other

- The loss of one server will not negatively impact the processing of other services

- Patching, update, and administrative requirements grow less complex when roles are isolated

- Lowered complexity means a reduction in the chance for an outage

The problem is that small businesses and environments are often forced into multiple roles per server because of limited funding. Many small businesses simply cannot afford the costs associated with purchasing individual servers for each needed service. This situation is especially problematic when considering the low resource‐utilization nature of many infrastructure services. Although role isolation is a good idea, most infrastructure services use very little of a server's processing power, making their consolidation appear to make more sense for the small environment.

Figure 1.4: On the left is a physical server with consolidated roles. The right shows the same server as a virtual host supporting role isolation.

Figure 1.4 shows how the addition of virtualization often changes the outcome of this decision. Incorporating virtualization into the small environment offers an opportunity to address this conundrum, due to two major factors:

- Lowuse infrastructure services tend to be excellent candidates for virtualization. Infrastructure services such as those discussed earlier tend to require very few resources in order to accomplish their stated missions. For example, in very small domains, AD domain services requires very little processor power and can be operated atop a server configured with as little as one gigabyte of RAM. This level of service consumes only a slight percentage of a server's total available resources. As such, virtualizing multiple critical but low‐needs services such as these provides a way to efficiently isolate each into its own virtual server.

- Special virtualization licensing from Microsoft extends the value of each physical license. With the release of Windows Server 2003 R2 in 2005, Microsoft updated the terms of its licensing agreements. These new terms added licensing benefits for certain editions of its server OS. Specifically, a company is entitled to install and license an additional four virtual instances for every purchased license of Windows Server 2003 R2 Enterprise Edition. For Windows Server 2003 R2 Datacenter Edition, an unlimited number of additional virtual instances can be installed and licensed. This count of additional licenses is related to concurrently running instances, giving a business the option to install—but not run—an unlimited number of non‐running instances. With the release of Windows Server 2008, Microsoft changed the terms again to allow one additional virtual instance when purchasing Windows Server 2008 Standard Edition.

The combination of a virtualization's consolidation capabilities with Microsoft's licensing benefits now enables even small businesses to build the right level of role isolation into their networks. By building role isolation per best practices, small businesses and environments can further enhance the resiliency of their servers and services.

P2V Converts Physical Servers to Virtual Servers

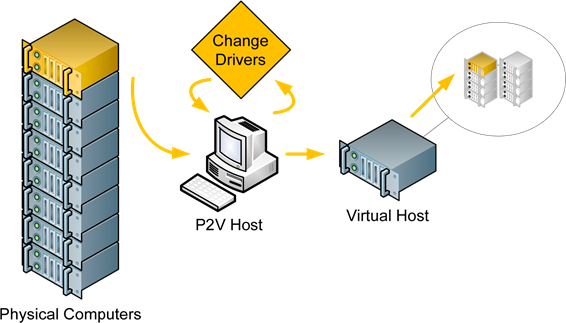

All of this discussion about moving to virtualization is incomplete without a nod to the tools that enable the direct conversion of physical servers to virtual ones. Moving your environment from physical to virtual needn't require the wholesale reinstallation and rebuild of every server in your environment. Rather, all virtualization platform software typically includes a set of tools that convert physical servers to virtual servers. These tools are generically referred to as P2V Tools.

This P2V process is very similar to the processes used to image and rapidly deploy desktops and laptops. Where it differs is in the end result. Instead of rapidly deploying an image to a new piece of physical hardware, the result of a P2V conversion is the creation of a virtual server's disk file. The P2V process is also different in that its processes automatically inject the proper device driver software into the imaged virtual server. This injection is required so that the server can correctly interoperate with the virtual platform and its hypervisor. These tools are particularly useful in that they can convert physical servers without requiring them to be powered down. Most P2V software available today can complete a full conversion while the source machine remains online and operational.

In the past, early P2V software was quite different in the types of capabilities and feature sets available. However, today's P2V software has become a relative commodity between vendors. As such, the capabilities between the P2V software of one virtualization platform vendor and another are fairly similar. This being said, there remain two areas in which differences remain. The first has to do with the administrative activities that wrap around the P2V process. These relate to scheduling of the P2V process, P2V agent distribution, the automatic mounting of converted servers into the virtualization environment, and scripting exposure for custom actions.

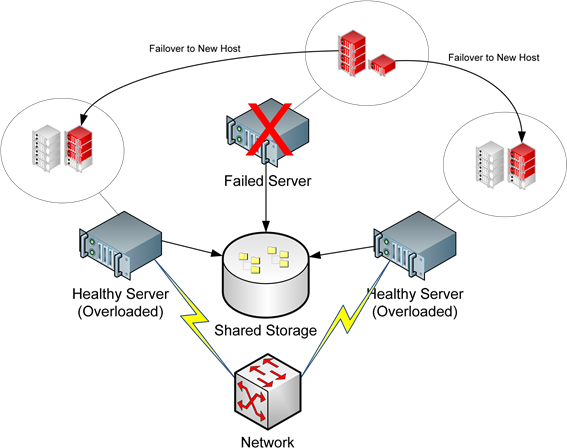

Motioning Automates Load Balancing & Failover

Earlier, this chapter discussed how small businesses and environments—just like large enterprises—cannot function if their services are not up and operational. Lacking those services, even the small business stands to lose money, as they cannot meet the needs of their customers. To that end, it was also discussed how virtualization enables a virtual server to be relocated from one host to another in the case of a host problem.

The generic industry term for the automated move of virtual machines from one host to another is motioning. There are three common ways in which motioning can occur with a virtual machine:

- This process can be invoked by an administrator to relocate virtual servers off a potentially failing host.

- It can be invoked to move running virtual machines off of a host prior to an upgrade that involves downtime on that host.

- It can automatically relocate and restart all virtual machines onto new hosts after a complete host failure.

- It can be used as a performance management tool to load‐balance virtual servers across available physical hosts to ensure the best possible performance.

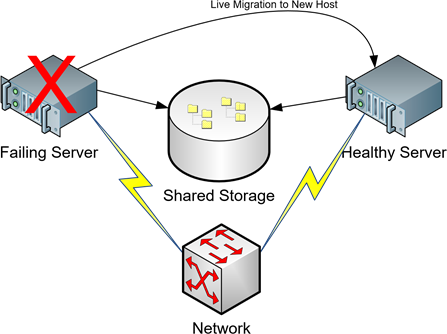

Different virtualization platforms enable motioning through different mechanisms, and each requires its own set of technologies for this automated relocation to take place. Essentially, all virtual platforms can relocate a virtual server through a traditional file copy when that server is powered off. However, some provide the ability to relocate that virtual server while it remains powered on. This process is often generically referred to as live migration, and it requires a bit more additional technology involvement than what is required for a traditional file copy. Let's take a look at the components that are commonly required in order to enable this added reliability for your virtual servers:

- Supporting virtual platform. First and foremost, your virtual platform must support the live migration capability. Important to note here is that the features necessary for accomplishing a live migration often require an add‐on cost to the base cost of the virtual platform.

- Flexible networking. Your networking infrastructure must support the correct configuration and features necessary to recognize a server has been moved from one network port to another and to quickly re‐converge. The networking protocols necessary to enable this automated reconfiguration work with the virtual platform to handle the change and are often a feature available only in business‐class networking devices.

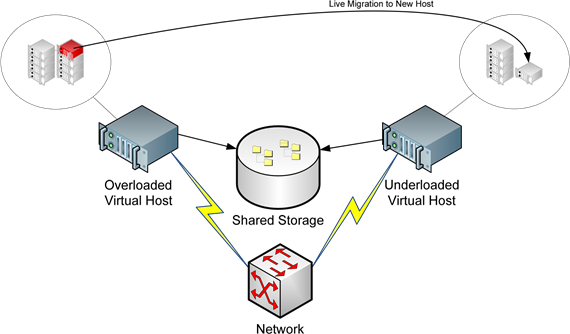

- Shared storage. Lastly, some form of shared storage is usually required for the storage of virtual machines. This requirement is commonly fulfilled through the use of shared storage such as iSCSI, fibre‐channel, NFS, and others, all of which have the necessary capabilities to support multiple, simultaneous host access. Although the relocation of a virtual server while it is powered off usually involves moving its disk files as well, most live migration technologies only relocate the processing of the virtual machine. The virtual machine's disk files do not actually move during the relocation. Figure 1.5 shows a graphical example of how this process works. You'll see in this figure how the processing of the virtual machine changes hosts but the virtual machine's disk files do not.

Figure 1.5: With live migration, when a host is failing or load needs rebalancing, virtual machine processing can be automatically relocated to a new host without server downtime.

Live Migration with Storage Relocation

Although most live migration technologies do not relocate server disk files during a migration, some virtualization platforms are beginning to include this as a feature. This dual migration of server processing and disk file relocation can be a boon for environments that want to maintain the uptime of their servers while moving their disk files to new locations.

High‐end motioning technologies all involve add‐on costs to virtualization environments, yet they enable the highest levels of resiliency and load balancing for your virtual servers. Thus, using them in your environment will involve a tradeoff of cost versus capabilities.

When considering a virtualization solution, factor in your business' tolerance for downtime. Due to the nature of their business, some small environments can tolerate the loss of even a critical server for a few hours up to a day or more. Others cannot tolerate downtime for even a few minutes.

What Will This Technology Cost?

It can cost as much as…nothing. Virtualization platforms exist today with prices that range from freeware to very expensive. The key differences between platforms are in the type and scalability of features that layer on top of the basic capabilities outlined in this chapter.

The basic ability to install a hypervisor to a virtual host and begin running virtual machines is today considered a commodity technology from the perspective of price. Essentially, every virtualization software vendor today provides the basic capability to run virtual servers atop a hypervisor for no cost. Other no‐cost features include:

- The ability to remotely access the virtual server's console over the network

- The ability to enact machine‐level changes to that machine, such as power on, power off, reboot, and others

- The ability to perform basic snapshotting

- The ability to perform basic virtual server backup and restore of entire virtual servers at once

These capabilities enable small businesses and environments with light needs to quickly move to virtualization with very little or no initial capital investment. For the needs of many small businesses and environments, these capabilities available with virtualization's free software options will suffice. What are missing from these free tools, however, are the additional management functionality and high‐end features that may be a necessity down the road.

It's More Than Just Software

It is important to note that with the more robust classes of virtualization software, there are more costs to the organization than just the software itself. When an organization decides that it needs more advanced capabilities, additional hardware such as servers, storage, networking, and cabling will likely be necessary as well. This additional hardware can substantially increase the cost associated with making the jump to high‐end virtualization. That being said, substantial additional benefits are gained with those additional costs.

Will My Existing Servers Be Supported?

In all likelihood, yes. It is true that many virtualization platforms require the use of recent hardware features on servers that support virtualization extensions directly on the hardware itself. However, not all virtualization platforms have these requirements. Many no‐cost virtualization solutions can be run atop essentially any server available today.

It is, however, important to temper the previous statement with a caution. Virtualization is all about performance. As such, the specified hardware requirements that are necessitated by many high‐end virtualization solutions are in place to ensure the highest level of performance. From a technical perspective, virtualization solutions of the type focused on in this guide can be broken down into two major classes:

- Type1. Type‐1 virtualization solutions as a whole are the most equivalent to what has been discussed in this chapter so far. These solutions leverage a hypervisor that itself is installed directly atop physical hardware. Type‐1 virtualization solutions can and often do require specialized hardware, yet they enjoy the highest levels of performance. This is due to the hypervisor's extremely "thin" code as well as its positioning directly between virtual servers and hardware.

- Type2. Type‐2 virtualization solutions actually install as applications on top of an existing OS. These solutions leverage the existing OS and its integrations into hardware resources. The hypervisor for these types of virtualization solutions is functionally equivalent to what has been discussed in this chapter thus far. However, because of the added OS "layer" beneath the hypervisor, these solutions suffer from lowered levels of performance.

Your existing servers—even those that are beyond their operational life cycle—are very likely to support Type‐2 virtualization and its products. Your more recently purchased servers are likely to support both classes. Obviously, with Type‐1 virtualization's dramatic performance enhancements, it is a likely goal for use in your small environment today.

Telling Them Apart

In every case, a Type‐1 virtualization solution will involve the direct installation of a hypervisor to a host system. That host system can include an OS for management purposes or can be devoid of a full OS entirely. In every case, a Type‐2 virtualization solution will arrive as an application that is installed on top of an existing OS instance.

Virtualization Makes Sense for Even the Smallest of Environments

Virtualization makes sense for large enterprises. In an all‐physical world, their massive environments are so wasteful of processor cycles as well as power and cooling energy that any consolidation of servers to virtual servers makes sense.

But virtualization makes sense for the small environment too. Small environments stand to gain reliability advantages from virtualization's enhanced systems commonality. The ability to relocate virtual servers when hardware failures occur is a boon to availability for small environments. The accident protections gained through snapshots reduce downtime that is related to administrator mistakes. Tying all of these together are the cost savings gained through consolidating many servers onto few.

It is the goal of this guide to assist you with understanding the nuances of how virtualization can work in your small business or environment. To continue this discussion, the next three chapters will expand the conversation to include the benefits, implementation suggestions, and best practices you can employ today to incorporate virtualization into your business and technology plans.

What Business Benefits Will I Recognize from Implementing Virtualization?

Small businesses and environments are indeed small due in many ways to their limited budgets. As such, the small business must leverage no‐cost and low‐cost tools wherever possible if they are to survive. They must be extremely careful about where they spend their money, making sure that every investment provides a direct and recognizable benefit back to the bottom line.

This focus is much different than what is typically seen in large enterprises. With large organizations, benefits are more easily classified in terms of operational efficiencies, with reductions in time and increases in agility directly equating to reductions in cost. Enabling improvements to manageability are a key concern when you have 1000 servers in your data center because their management involves a lot of time and cost. The small business, however, must look to whole dollar or "hard" cost savings when considering any cost outlay.

To that end, answering the question posed by this chapter requires a primary focus on hard dollar savings to be of benefit to the small environment. You'll see just that focus in this chapter. Yet at the same time, this chapter will also discuss some of those other "soft" benefits that make sense. You might be surprised that even in the small environment, the addition of a "soft" benefit can in the end result in a recognizable "hard" return.

Why Should My Budget Care About Virtualization?

As with many IT technologies, virtualization's first play into the IT market was targeted directly towards the needs and demands of the enterprise organization. For enterprises, virtualization is an obvious and smart investment. The cost savings of reducing 1000 servers to 100 are easy to see. A 90% reduction in total server count, along with their power and cooling costs and all the associated administration improvements, makes a lot of sense for the very large environment.

Yet small environments can also benefit through the technologies first employed by the large corporations. For the small business environment, that same 90% reduction in physical servers may not reduce 1000 to 100, but reducing 20 to 2 results in just as striking a benefit to your cost basis. Those benefits obviously relate to your bottom line; at the same time, they go far in improving your business' computing agility.

With this idea in mind, why should your budget care about virtualization? What are the benefits your small business or environment stands to realize from a virtualization rollout? Let's first look at a few high‐level areas of return you can expect. With your virtualization investment

- You will save money on hardware and software

- You will save money on power and cooling

- Your entry costs will start low.

- You will leverage your existing infrastructure

- Yu will recognize improvements to your business services

- Your administrators are likely already familiar with this technology

All of these are pretty lofty statements. Yet each brings about a benefit to the business through direct cost avoidance, reduction in operational expense, or elimination/reduction of risk. For a more detailed analysis, let's take a look at each in detail.

You Will Save Money on Hardware and Software

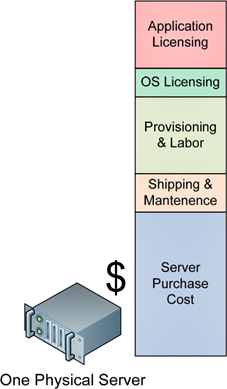

Virtualization's savings on physical hardware tends to arrive first with the need for future expansion. In the physical world, adding two additional servers to support a new business venture or management activity requires purchasing two new pieces of hardware, along with the necessary software and OS licenses, maintenance, and shipping costs associated with those servers. Additional manpower costs are required to provision those servers, install their OS, add applications, and generally prepare them for use.

In a virtualized environment, the infrastructure for servers is abstracted away from the traditional 1:1 relationship between server chassis and new business service. With a properly planned and managed virtual infrastructure, your environment will be configured to reserve sufficient processing power to support expansion. Thus, a need for two new servers does not require a requisition for two new physical servers. Fulfilling that need instead involves little more than provisioning them within the virtualization platform's management console. Although the manpower costs associated with application installations are generally the same, the other provisioning tasks—such as unboxing, mounting, cabling, and even the OS installation—are eliminated. Figure 2.1 shows a bar graph that illustrates these costs.

Figure 2.1: Adding a new virtual machine to a virtual host eliminates many costs traditionally associated with adding new services.

In Figure 2.1, you can easily see how the majority of the costs associated with bringing a new service online relate to its physical hardware. Buying new servers involves the cost for those servers as well as maintenance and shipping fees. That new server also incurs a marginal cost associated with getting it installed into your infrastructure. Racks, cabling, power and cooling, network connectivity and its associated infrastructure, and in some cases, shared storage are all potentially hidden costs that might not be accounted in the plans of many small businesses and environments. In short, there are quite a few more costs associated with running a physical server than just the server itself.

This is the situation displayed on the left of Figure 2.1. Now, contrast this situation with the virtual host shown on the right. There, the costs associated with purchasing the server, adding maintenance and shipping fees, and provisioning are all grayed out. This is the case because these components of these marginal costs are effectively zero. Adding that new virtual server to an existing virtual infrastructure requires no physical reconfiguration and involves no additional hardware purchases.

The Laws of Physics Still Apply

There is an important caveat here: The costs identified are valid when your virtualization infrastructure has the residual capacity to support a new virtual server. As with everything else, virtual platforms must still obey the laws of physics. Any virtual platform will have a fixed amount of resources available to distribute to virtual machines based on the host's configured hardware resources. It then follows that at some point additional hosts— with all their associated costs—will be needed to support your future expansion.

Yet adding that new host is uniquely different. A new virtual host can support more than one virtual machine. Thus, depending on the resource needs of your virtual machines, adding a new virtual host is like getting many more than one new server for the price of one.

You should notice in Figure 2.1 that the costs associated with OS licensing are specially highlighted for the virtual host. This is due to the special virtual machine licensing benefits that are offered by some OS manufacturers. For example, Microsoft provides the following license benefit when using the Enterprise Edition of Windows Server 2008:

A Windows Server 2008 Enterprise license grants the right to run Windows Server 2008 Enterprise on one server in one physical [instance] and up to four simultaneous virtual [instances]. If you run all five permitted instances at the same time, the instance of the server software running in the physical [instance] may only be used to run hardware virtualization software, provide hardware virtualization services or to run software to manage and service the [instances] on the server. You may run instances of the Standard or prior versions in place of Windows Server 2008 Enterprise in any of the [instances] on the licensed server

Microsoft's rules for the Datacenter Edition of Windows Server 2008 provide an even greater benefit:

When Windows Server 2008 Datacenter is licensed for every physical processor in a server, the server may run the server software in the physical [instance] and an unlimited number of virtual [instances] on the licensed server. You may run instances of Windows Server 2008 Standard or Windows Server 2008 Enterprise in place of any Windows Server 2008 Datacenter in any of the OSEs on the licensed server. Unlike with Standard and Enterprise, with Windows Server 2008 Datacenter, the instance of the server software running in the physical [instance] may be used to run any software or application you have licensed. Because Windows Server 2008 Datacenter permits an unlimited number of simultaneous running instances on a licensed server, you have the flexibility to run instances of Windows Server in virtual [instance] without having to track the number of instances running or worry about being under‐licensed.

In effect, the move to virtualization immediately increases your license count for certain OS versions by 4× or more. This benefit automatically expands your capacity for OS and virtual machine growth.

Application Licensing Is Not Necessarily Straightforward

Be warned that although licensing for Microsoft OSs gains benefits, licensing for your applications can grow complex based on the specific language of the licensing terms. When installing applications to virtual machines, pay special attention to license language that focuses on processor‐based licenses or server‐linked licenses. These can have unexpected impacts on installations to virtual environments.

Even if your goal is to consolidate just a few servers to a single host, your reduction in total server count frees those physical server chassis for new missions. As a result, the incremental cost of adding new services to your business drops dramatically. When the addition of a new service no longer automatically also requires a new server, you can quickly and inexpensively expand your business as you see fit. In the case of a large expansion, the cost savings alone in new computer hardware can often pay for a virtualization implementation's initial costs right up front.

Figure 2.2: With virtualization's server consolidation capabilities, each new server purchase nets more than one virtual server instance, multiplying the benefits associated with its cost.

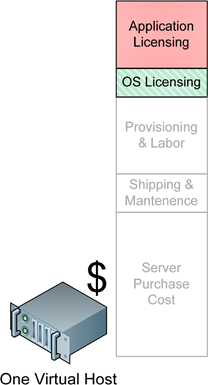

You Will Save Money on Power and Cooling

Hardware and software costs are not the only hard return gained by the organization that makes the move to virtualization. Costs associated with powering and cooling that server can similarly be avoided at a rate that is directly related to your level of consolidation.

It is perhaps easiest to see this by looking at some real numbers. To give you an idea of the amount of money you are currently spending on merely powering your current servers, consider the following example. According to the power consumption calculator found at http://h30099.www3.hp.com/configurator/powercalcs.asp, the following server configuration consumes approximately 300 watts of energy at steady state and when processing at a minimum 10% utilization:

- HP DL385 Generation 5 server‐class hardware

- 115V power

- 2.7Ghz Processor

- 8GB RAM

- A single PCI‐E card

- Five 146GB SAS hard drives

As servers are generally operated continuously throughout their lifetime, this server's 300W of energy are required for 24 hours a day. If your average energy cost is 15¢ per kWh, each server of this type will cost you $395/year to power. Twenty of these servers will cost you $7900/year for power alone.

The costs don't stop there. As a rule of thumb, every watt consumed for a server's processing generates one watt of heat. Removing that heat requires air conditioning, a process that involves additional power. In general, every watt of heat requires another watt of energy to cool. Thus, for an environment that has perfect air conditioning—one that runs at 100% efficiency—you can assume that cooling your servers effectively doubles the costs.

Thus, as Figure 2.3 shows, to power and cool 20 servers of this type will require a recurring cost of $15,800/year.

Figure 2.3: 20 example physical servers can cost $15,800 per year to power and cool.

These costs can be even higher if you house your server infrastructure within an offsite hosting facility. To recognize profit, these facilities up‐charge the costs for power and cooling while also charging for the space consumed by the server chassis itself.

As a result, there are dramatic hard cost benefits that can be gained through the consolidation of server instances onto fewer server chassis. This chapter has already discussed how the goal of achieving a 90% consolidation of servers results in a reduction from 20 to a mere 2 servers. This directly reduces your overall power and cooling costs by $14,220 per year. Considering the average cost of server‐class equipment required to support virtualization, the savings in power and cooling costs alone could buy an additional server each and every year to expand your existing virtual machine capacity.

Your Entry Costs Will Start Low

You may at this point be asking, "For all these great cost‐savings benefits, what will I have to pay to start?" This is an honest question, because at first blush, some virtualization platform software can appear expensive. However, that expected initial expense needn't necessarily be high for small businesses and environments who consider the right approach to getting started.

For a small implementation, your initial cost outlay for a move to virtualization is likely to include some or all of the following components:

- Virtualization platform software. Most virtualization vendors provide an entrylevel software edition at no cost that includes the basic features. Although there tends to be a standard set of features available across all no‐cost solutions, there are notable differences between virtualization vendors. The next section will discuss some of the specific features you can expect to get from most solutions, but research your options thoroughly before deciding on a solution.

- Maintenance and support. Although the initial software acquisition for entry‐level virtualization software involves no cost, the maintenance and technical support for that software will incur a minor cost per year. This maintenance and technical support is critically helpful to ensure that specialists are available in the case of an emergency. Virtualization effectively encapsulates the whole of entire business processing within its platform, so smart businesses should always consider the purchase of a minimum technical support contract as a risk‐reduction measure.

- One or more physical servers. You will require one or more physical servers on which to install the virtualization software. Host resources are a primary indicator of the resulting level of performance once servers are virtualized, so these servers should be equipped as powerfully as you can afford. Additionally, the function of these servers should be reserved exclusively for supporting the virtualization environment. Due to the high‐resource utilization seen on virtual hosts, it is not a recommended practice to leverage multiple roles for a virtual host.

- Additional RAM memory. Virtualization is heavily dependent on RAM, with more memory always preferred over less. More memory in a virtual host means that host can support more collocated virtual machines. With the cost of memory relatively inexpensive, this hardware upgrade quickly pays for itself by increasing your consolidation capabilities.

- Additional storage. Each collocated virtual machine requires virtual host disk storage that is equivalent to the real amount of disk storage that is configured for the virtual machine. Thus, if you want to collocate 10 virtual machines onto a single host and each virtual machine has a 40G hard drive, you can need 400G or more of storage to support this configuration. Be aware that some virtualization software technology requires real disk space for only the consumed portions of virtual disks rather than the entire assigned virtual disk. This technology can reduce your overall disk space requirements but must be closely monitored to prevent host disk space from being fully consumed.

- (Optional) External, shared storage. Direct‐attached storage within a physical host is limited by the count and size of disks that can be installed to the server chassis. Thus, the capabilities of a physical server will eventually be bound by its disk space. External storage that is shared between multiple hosts and connected via fibre channel, iSCSI, or NFS tends to incorporate a greater expansion capability and to enjoy more high‐availability features. This type of external storage is also a primary requirement for the advanced "motioning" capabilities discussed in the previous chapter.

Chapter 3 will go into more detail associated with the physical and logical elements you will need to get started with virtualization. However, the previous list is useful for helping you understand the cost impacts of those components. Discounting the last bullet—which can be an expensive addition—each of these components can be incorporated at very little cost.

With this information in mind, there are a number of architectural decisions that you will need to consider when planning your virtualization implementation. Those decisions relate to the features you anticipate needing, as well as understanding where additional costs can be applied to improve your performance, reliability, and overall management experience. Consider the following decisions and cost impacts when planning your virtualization implementation.

Conscious of the Functions You Need and Don't Need

Entry‐level and no‐cost virtualization platforms focus primarily on basic server partitioning. This basic server partitioning embodies the technologies necessary to consolidate multiple virtual servers onto one physical host. With this basic server partitioning comes the core abilities to work with those machines as if they were physical computers. Your entry‐level software platform will typically include the ability to

- Create virtual machines and run them concurrently atop a single virtual host

- Power on, power off, and suspend running virtual machines as well as adjust their physical configuration

- Interact with the console of a running virtual machine through some form of client interface

- Migrate virtual machines from one host to another while that virtual machine is powered off

- Support basic snapshotting functionality, enabling limited snapshotting and snapshot management of virtual machines

- Manage virtual machines and their hosts on a per‐host basis

- Monitor the current and immediate‐past performance of that virtual machine with a limited set of counters

- Support limited capabilities to adjust the power‐on and power‐off sequence for virtual machines after a host failure; this prevents the race condition where every virtual machine attempts to power on at once after a virtual host reboot

- Set and enforce limited roles and permissions for users who interact with virtual machines

For most small businesses and environments, these capabilities are enough to get started. The features in this list enable your environment to create, manage, and nominally interact with hosted virtual machines. However, there are additional capabilities that are gained with the movement from no‐cost to for‐cost virtualization tools. Although a discussion of specifics is best left for the next section, these capabilities focus on enhancing the management flexibility for running virtual machines as well as enabling them with highavailability features.

Aware of the Paths for Future Augmentation

The basic features highlighted earlier are effective for small businesses, especially when considered alongside their entry cost. Yet it is important to recognize that the move to virtualization very quickly consolidates a lot of resources onto a small number of physical devices. As such, the loss of a single virtual host can be catastrophic if preparations are not made.

This need for business continuity in the case of a virtual host failure is a primary motivator for many small businesses to eventually jump to for‐cost tools. Among other features, the add‐on capabilities enabled by for‐cost tools are focused on preventing this catastrophic loss of business processing. Consider the following as features that you can expect to see upon the upgrade to a for‐cost virtualization platform:

- Live migration capabilities that allow running virtual machines to be moved to an alternate host before a host failure

- Automated relocation to new hardware and restart of virtual machines immediately upon a host failure

- Load‐balancing calculations that manually or automatically re‐balance running virtual machines across hosts to prevent resource contention

- Disk storage migration that enables the zero‐impact relocation of virtual machine disk files to alternate storage

- Integrated block‐level backup capability for virtual machines, enabling the singlefile backup of an entire virtual machine as well as guaranteed restore; some for‐cost virtual platforms additionally offer the ability to perform individual file restores from an image‐level backup—this feature is useful in speeding the recovery of individual files

- Automated replication features that copy backed up virtual machines to alternate locations for disaster recovery purposes

- Centralized management, enabling the administration of all components of the virtual environment as a single unit

All businesses grow, as do their computing requirements. The smart business will look for virtualization platforms that provide an easy migration path from early no‐cost to later forcost software. As your business grows more reliant on your virtual infrastructure, it is likely that you will find these additional features necessary. Ensuring that your virtual platform can scale to meet your needs is critical for its long‐term viability.

Attentive to Hardware Requirements and Upgrades

In the end, keeping those virtual machines up and running is the most critical goal of any virtualization implementation. Yet the software that embodies its platform is only part of the expansion goals for a virtual infrastructure. The architecture of your computing environment itself must also scale to support your growing availability requirements.

These architecture enhancements arrive with the measured upgrade of your entire computing infrastructure. Although these capabilities may not be necessary as your business or environment starts small, growth over time will eventually lead the smart organization to making careful purchases. Your goal with hardware upgrades will be to reduce or eliminate single points of failure within your infrastructure, which ultimately increases the reliability of your business services themselves.

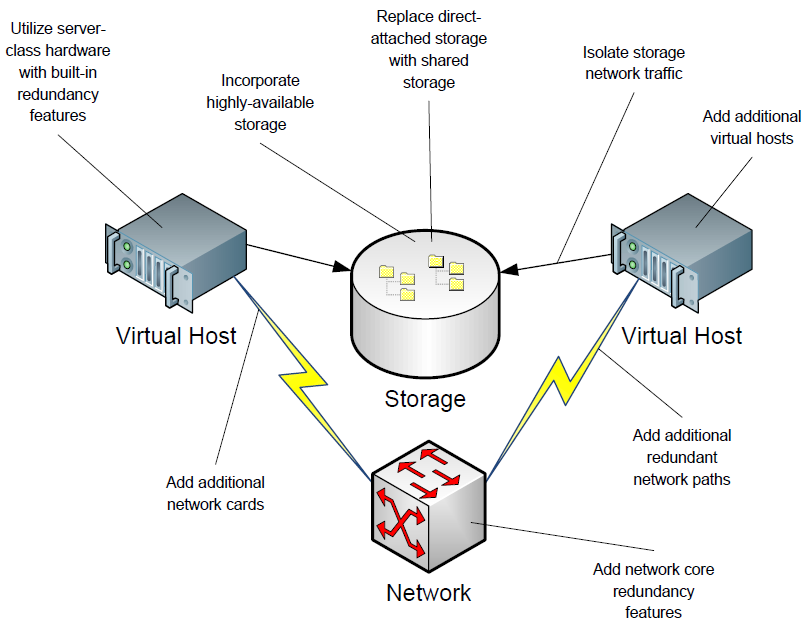

Figure 2.4: Additional hardware capabilities can be added to virtually every component of your virtual infrastructure to add resiliency.

To fully support high availability, the computing environment architecture can be expanded in many ways, some of which are called out in Figure 2.4:

- Adding network cards or entire paths ensures that the network is not a single point of failure

- Moving from local storage to shared storage decouples the data storage layer from the processing layer, adding flexibility and greater resiliency

- Storage appliances such as NAS and iSCSI tend to include internal designs that reduce or eliminate points of failure

- Even the apparently simplistic act of isolating storage network traffic from production network traffic prevents network conditions from impacting a virtual machine's access to its disks

These hardware augmentations needn't necessarily happen at once. Your small business or environment can incrementally add layers of redundancy as requirements and budget allow. Decisions regarding implementing these added layers will need to be made alongside your needs to expand your capacity for more virtual machines through the addition of new virtual hosts.

You Will Leverage Your Existing Infrastructure

Irrespective of what you want your environment to look like down the road, making the move to virtualization today requires technologies that you likely already have in place. Most no‐cost virtualization solutions today can run atop your existing infrastructure. Some virtual solutions require newer features such as 64‐bit hardware, hardware‐based data execution prevention, and/or hardware‐based virtualization extensions. Others provide similar experiences atop lower‐end hardware. To get the most benefit from your virtual platform selection, look for one that supports the technology you have already purchased but that includes the support of future needed capabilities.

Your Administrators Are Already Familiar with this Technology

The cost to educate IT staff on the use of virtualization technology can be a further expense. However, virtualization technology has been available for Microsoft servers for nearly a decade. For many, virtualization's first inroads into the IT data center actually began at the desktop level. These software applications enable the creation of virtual machines at an administrator's desktop, and are commonly used for testing and evaluating new technologies. They are also very popular with software developers in mocking up development and test environments.

As such, your systems administrators are likely already very familiar with virtualization's technologies and best practices. Although the specifics of your chosen virtual platform may require some skills training for your administrators, the overall cost to your company for moving to virtualization is likely to be much lower than with many technology insertions.

You Will Recognize Improvements to Your Business Services

Once implemented, virtualization enables levels of flexibility that were not possible using traditional physical servers alone. This flexibility comes about through the commonality of server instances, enabling a uniform server configuration across every virtual machine. The proper use of snapshots during typical administrative activities ensures that changes can be rolled back should they result in a server failure or problem. Also, the ability to create and rapidly deploy server "templates" enables your business to quickly prototype the development and deployment of new services. Each of these benefits is discussed in more detail in the following sections.

Uniform Server Configuration

When servers fail, their most impacting characteristic is often the differences in their physical composition. The installation of a patch might succeed on one server, only to crash another. One server's device configuration might work well with an application while another may experience problems. This problem is exacerbated over time as business growth and the need for additional hardware mandates new server purchases. With time passing between each purchase, the composition of earlier purchased servers is not likely to be equal to those that are purchased later on. This dissimilarity between hardware makes the job of keeping servers running more difficult, more complex, and more prone to error. With virtualization, every virtual server is functionally the same as every other, making hardware‐specific problems significantly less likely.

Configuration Rollback

Virtualization's snapshotting technologies mean that any change to a virtual server can be rolled back should that change not complete as expected. Administrators need only choose to "snapshot" the configuration of the virtual server—a typically manual step—prior to completing a change. If the change is completed successfully, the administrator can eliminate the snapshot and return the server to regular operations. If a problem occurs, "reverting to the snapshot" involves a few clicks in the server's interface. This capability prevents unexpected problems from impacting the server's operations. It further increases your servers' reliability while enabling administrators to solve problems without impacting your business.

Be Careful with Snapshots

One word of warning with snapshots: Although server snapshots are a useful tool for rolling back a configuration after a problem, their long‐term use can impact the overall performance of a virtual server. Administrators who invoke a snapshot prior to a risky configuration change or patch installation should remove the snapshot once the change is proven to be successful. Doing so ensures that the virtual server's disk processing does not incur the added overhead associated with running changes through the snapshot. This overhead grows particularly problematic when multiple, linked snapshots are created for a single server.

Rapid Service Prototyping

Many small businesses and environments shy from new IT projects due to limitations on available hardware. Most small environment budgets simply don't have the flexibility to purchase server hardware for mere service evaluation. Because of this problem, many times, new services are not properly evaluated prior to implementation. Others are not implemented at all.

Virtual machines bring flexibility to the small environment because they enable administrators to create entire mock‐up environments atop existing hardware. When virtual servers can be created through a simple copy and paste, the process to create test and evaluation environments is extremely simple. Automation components natively available within some virtualization platforms can make this process even faster through the automated personalization of OSs. By giving your administrators the ability to evaluate new software and services prior to their implementation, you gain a higher likelihood of a successful implementation down the road.

Virtualization's Spectrum of Technology, Cost, and Business Requirements

The intent of this chapter is to enable you with information about virtualization's potential benefit to the small business and small environment. These benefits start with the "hard" cost savings associated with virtualization's classic case studies: server hardware consolidation, power and cooling benefits, and software license efficiencies. However, although small businesses are likely to look first to these dollar‐for‐dollar budgetary impacts, virtualization's benefits improve operational efficiencies as well.

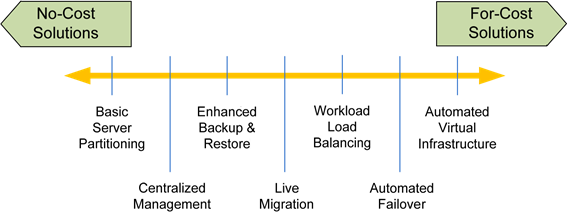

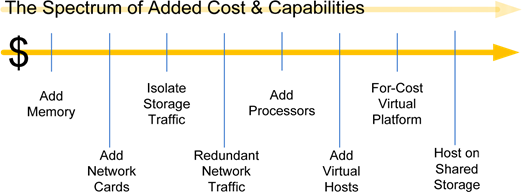

Some of these efficiencies can be recognized with the incorporation of virtualization's free toolsets. Others require the addition of for‐cost features. Ultimately, a spectrum exists between "no‐cost" and "for‐cost" solutions and the capabilities supported by each. That spectrum, shown in Figure 2.5, starts with basic server partitioning and ranges through the desire for centralized management and the enhancement of backups and server restores. Businesses ultimately come to the conclusion that Live Migration capabilities are necessary to ensure virtual server uptime. With Live Migration comes the extra benefits of workload load balancing and automated failover. Mating virtualization's administrative capabilities with the right monitoring and automated actions eventually gets the small business to the point where large levels of their virtual infrastructure become automated.

Figure 2.5: Virtualization's spectrum spans from nocost to forcost solutions and the capabilities that each provides.

The hard part is finding your business' sweet spot on that spectrum for the capabilities you need in your environment and the ones you can afford. To that end, Chapters 3 and 4 of this guide will go into further detail on the steps necessary to make the move towards virtualizing your computing infrastructure. Chapter 3 continues the conversation by asking and answering the question, "What do I need to get started with virtualization?" There, you'll learn about the technologies that virtualization relies upon, and which ones make sense for your business. Chapter 4 will continue by illuminating best practices associated with getting virtualization in the door. Its guidance will quick‐start your IT teams with the right information they need to avoid many of the common pitfalls in any virtual implementation.

What Do I Need to Get Started with Virtualization?

"All these benefits are great. But if the technology I already own doesn't support virtualization, it won't work for my business!"

Nowhere is this statement more important than with small businesses and environments. The goal of your business is to support your customers and ultimately make a profit. As such, implementing technology for technology's sake is always a losing bet, especially when your business isn't necessarily a technology company. For a technology such as virtualization to make sense for your small business or environment, it must be capable of arriving with a minimal or zero cost outlay. It must run atop your existing infrastructure, and it must provide a direct benefit to your goals of supporting your customers and making profit. If it doesn't accomplish these three things, it doesn't make sense for your business.

This chapter's goal is to provide you the technical information you need to ultimately make this decision. Whereas the first two chapters in this guide focused specifically on the cost and operational benefits associated with virtualization, this chapter will focus on a technical discussion of its hardware and software requirements. The intent here and in the next chapter is to provide a comprehensive guide to getting started. Although the content may be of a fairly high technical level, rest assured that the steps necessary to get started are actually pretty easy. Consider the information in these next chapters as your guide for ensuring that start is as successful as possible.

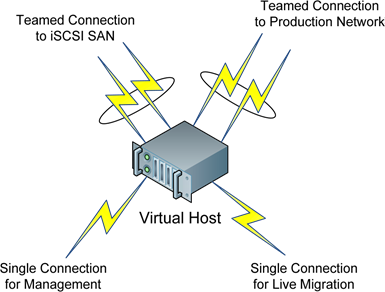

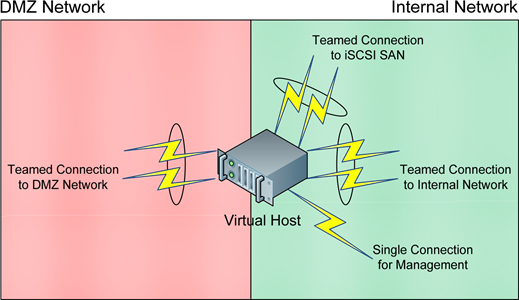

To begin, Figure 3.1 details what will be your grocery list of hardware and software components. These are minimally required to build a lightweight and no‐added‐cost virtualization infrastructure. You'll see in this figure that specific server components are required as well as elements at the network and storage layers. In addition to the right hardware, certain software components are required or optionally suggested. Much of the rest of this chapter will discuss each of these requirements in detail.

Your environment is likely to have most of these components already in place. Thus, repurposing them for use with your virtualization environment will be the first step in your implementation.

Figure 3.1: Your grocery list for a basic virtualization implementation with optional additional hardware and software.

Physical Servers

Obviously, to create a virtual host, you first need a physical host. Unlike many other IT services, virtualization requires large quantities of physical resources to fulfill its mission. Such is the case because a virtualization platform has the ability to scale the running of concurrent virtual machines to essentially any level. The limiting factors are the number of hosts you have as well as the resources on each of those hosts. Greater numbers of physical processors at higher speeds can support the processing needs of more virtual machines. Higher levels of onboard RAM memory mean more virtual machines can be supported by a single physical host. With this "more is greater" concept in mind, let's analyze each of the classes of server resources that you should consider.

Processors

Today's server‐class equipment typically arrives with a minimum of two processors. Many servers now include the support for four, eight, or more processors, leveraging multiple processor cores per socket. From the perspective of overall virtualization performance, the number of processor cores versus the number of sockets is irrelevant; however, the overall count of processors is important.

Licensing Processors and Cores: Although a processor versus a core may be insignificant from a raw performance perspective, be aware that virtual platform licensing may make one configuration's price different than another. For example, some virtual platform software may be priced by the number of sockets rather than the total number of processors.

A major function of the hypervisor layer is to schedule the processing of virtual machine workloads across available processors. Although a processor can process only a single instruction at a time, the rapid swapping of instructions in and out of a server's processors enables the appearance of concurrent functionality across every virtual machine. Because of this scheduling process, the more processors available for targeting by the hypervisor's scheduler means that more virtual machines can be serviced at the same time.

There is no established technical precedent associated with the number of virtual machines per onboard physical processor. Instead, effective performance monitoring is key to determining the best fit. Virtual machines with light resource requirements will use fewer processor resources than those with higher resource requirements. Understanding the anticipated resource needs of your virtual machines is important to knowing how many processors to bring to bear in a virtualization environment.

With this in mind, consider using or purchasing physical servers that have a minimum of two onboard processors for your initial implementation. Servers with four or more processors will support more virtual machines at a higher purchase cost. Going even further, servers with eight or more processors are available today for even greater expansion potential; however, these servers tend to be of much greater cost.

Memory

The second major factor in optimizing the maximum number of virtual machines per physical host relates to the amount of physical RAM memory in each server. Each running virtual machine requires the use of RAM memory that is roughly equivalent to its assigned virtual memory. Thus, if you create a virtual machine that has been assigned 2GB of virtual memory, that virtual machine when booted will consume an equal amount of physical memory.

It is for this reason that Chapter 1 discussed the fallacy of assigning too much memory to server instances. Consider the situation in which a physical server is running a very light workload, such as a file server or DNS server. In this case, if the physical server is outfitted with 4GB of memory but only uses one for its daily processing, its extra memory goes unused. Although a waste in and of itself, this situation does not create a problem for other physical servers because their memory is contained within their server chassis and not shared.

The oversubscription of memory in the virtualization context has substantially more effect. Consider a virtual host that contains 8GB of memory. If 4GB of memory are assigned to a virtual machine that only in fact needs one, the extra memory is both wasted and unavailable for use by other virtual machines on the same host. It is for this reason that virtual machine memory consumption must be carefully monitored and adjusted based on the resource needs of the virtual machine.

Physical Memory Sharing

Although this consumption of physical memory is the case with virtual machines, some virtualization platforms leverage the use of memory page table sharing. This feature allows physical memory to be shared between multiple virtual machines when the contents of each page table are equivalent. The use of memory page table sharing reduces the net effect of virtual machine memory requirements and results in an overall reduction of consumed memory when multiple virtual machines are simultaneously running.

There are, however, downsides to the use of this feature. The resource overhead associated with managing the sharing of memory page tables takes away from available resources on the host, which can result in a reduction in overall performance. Additionally, when virtual machines power on, they initially do not have the right information necessary to begin memory sharing. Thus, recently powered‐on virtual machines cannot participate. As such, even with this feature, make sure that ample physical memory is available on your virtual hosts.

It is also worth mentioning that the amount of physical memory installed to a virtual host should be maximized as much as possible. If your business can afford to augment an existing virtual host with additional memory, its addition is usually the best and least expensive change that will net the greatest benefit to the virtual environment.

Onboard Storage

Virtual hosts also require onboard disk storage for the hosting of the virtual platform itself. It is to this onboard disk storage that you will install the virtual platform's operating system (OS) and runtime files. It is also to this onboard disk storage where you may install virtual machines and their disk files as well as any supporting data such as software media.

The onboard storage requirements for most virtual platforms are relatively low in comparison with the quantity of disk storage available in today's servers. Figure 3.1 shows that a minimum of 2GB of disk storage are suggested for the virtual platform itself. This quantity is for the installation of its files and supporting software.

Due to its low cost and high levels of performance, direct‐attached storage (DAS) is where many small environments also store their virtual machine disk files. If you are repurposing existing equipment and do not have a storage‐area network (SAN) in your environment, DAS will be your only option for virtual machine storage. The correct amount of DAS required for your virtual environment will be based on a number of factors:

- Disk size for running virtual machines. You will need enough storage to support the full disk size of every concurrently‐running virtual machine. Thus, if you plan to host 10 virtual machines and each virtual machine is configured with a 40GB disk, your host will need a minimum of 400GB of DAS spread across your virtual hosts.

- Disk size for nonrunning virtual machines. You will find that virtual machines that are running are only one part of the total number of virtual machines in your environment. Over time, you will begin to accumulate non‐running virtual machine instances as well. These instances may be related to test and evaluation systems created for one‐time or irregular use. They may be virtual machines that are powered on only once in a while for specific uses. These virtual machines and their disks also require storage and will need to be factored into your calculations for disk storage.

- Disk size for future expansion. Because of the ease in deploying new virtual machines, many environments see a resulting explosion in virtual server instances once they have made the jump to virtualization. Your plans should include the additional disk space necessary to support your future expansion.

- Disk size for virtual machine templates. As discussed in the previous chapters, an important value‐add of virtualization is the ability to quickly create new server instances that are based on virtual machine templates. These templates are effectively virtual machines themselves that are not actively run as servers. Instead, they are used as the source for copying and pasting new server instances.

- Disk size for software media. Virtualization environments tend to make heavy use of ISO files that are created from original CD and DVD media. These files are convenient for use, as administrators do not need to locate the media and load it into the correct server's CD/DVD tray. Your additional disk space requirements for this media will depend on how much media you want to make available for use by your virtual machines.

As you can see, the disk space requirements for virtual machines can grow to become extremely large. In fact, many environments quickly find that the limitations of DAS soon become a barrier to virtual environment expansion. With the concurrent running of even 10 virtual machines requiring a half‐terabyte or more of DAS, you will quickly find that many servers are limited in the number of virtual machine disks they can host. In this case, it is likely that you will eventually decide to move to network‐based storage through an iSCSI, Fibre Channel, NAS, or NFS connection.

Clones Reduce Disk Consumption

Many virtualization platforms use a feature called virtual machine cloning to reduce the overall impact of virtual machine storage. With clones, the disk files of virtual machines are linked. Here, a source virtual machine is used as the starting point for creating additional virtual machines. Linked virtual machine disk files are much smaller in size because they contain only the differences between the source and their unique configuration.